升级orachk工具

原版本:22.3 新版本23.2

下载地址:

autonomous health framework (ahf) - including tfa and orachk/exachk (doc id 2550798.1)

[root@rac1 soft]# unzip ahf-linux_v23.2.0.zip

archive: ahf-linux_v23.2.0.zip

inflating: readme.txt

inflating: ahf_setup

extracting: ahf_setup.dat

inflating: oracle-tfa.pub

[root@rac1 soft]# ll

total 767196

-rw-r--r-- 1 root root 382181907 mar 23 14:15 ahf-linux_v23.2.0.zip

-rwx------ 1 root root 403405022 mar 4 02:46 ahf_setup

-rw------- 1 root root 384 mar 4 02:46 ahf_setup.dat

drwxr-xr-x 2 root root 42 mar 16 10:14 db

drwxr-xr-x. 65 root root 4096 mar 15 20:35 grid

-rw-r--r-- 1 root root 625 mar 4 02:46 oracle-tfa.pub

drwxr-xr-x 3 root root 16 mar 16 13:18 patch

-rw-r--r-- 1 root root 1525 mar 4 02:46 readme.txt

[root@rac1 soft]# chmod x ahf_setup

[root@rac1 soft]# ./ahf_setup

ahf installer for platform linux architecture x86_64

ahf installation log : /tmp/ahf_install_232000_15026_2023_03_23-14_19_29.log

starting autonomous health framework (ahf) installation

ahf version: 23.2.0 build date: 202303021115

ahf is already installed at /opt/oracle.ahf

installed ahf version: 22.3.0 build date: 202211210342

do you want to upgrade ahf [y]|n : y

ahf will also be installed/upgraded on these cluster nodes :

1. rac2

the ahf location and ahf data directory must exist on the above nodes

ahf location : /opt/oracle.ahf

ahf data directory : /oracle/app/grid/oracle.ahf/data

do you want to install/upgrade ahf on cluster nodes ? [y]|n : y

upgrading /opt/oracle.ahf

shutting down ahf services

upgrading ahf services

starting ahf services

no new directories were added to tfa

directory /oracle/app/grid/crsdata/rac1/trace/chad was already added to tfa directories.

ahf upgrade completed on rac1

upgrading ahf on remote nodes :

ahf will be installed on rac2, please wait.

ahf will prompt twice to install/upgrade per remote node. so total 2 prompts

do you want to continue y|[n] : y

ahf will continue with upgrading on remote nodes

upgrading ahf on rac2 :

[rac2] copying ahf installer

root@rac2's password:

[rac2] running ahf installer

root@rac2's password:

do you want ahf to store your my oracle support credentials for automatic upload ? y|[n] :

.------------------------------------------------------------.

| host | tfa version | tfa build id | upgrade status |

------ ------------- ---------------------- ----------------

| rac1 | 23.2.0.0.0 | 23200020230302111526 | upgraded |

| rac2 | 23.2.0.0.0 | 23200020230302111526 | upgraded |

'------ ------------- ---------------------- ----------------'

setting up ahf cli and sdk

ahf is successfully upgraded to latest version

moving /tmp/ahf_install_232000_15026_2023_03_23-14_19_29.log to /oracle/app/grid/oracle.ahf/data/rac1/diag/ahf/

[root@rac1 soft]# orachk -v

orachk version: 23.2.0_20230302

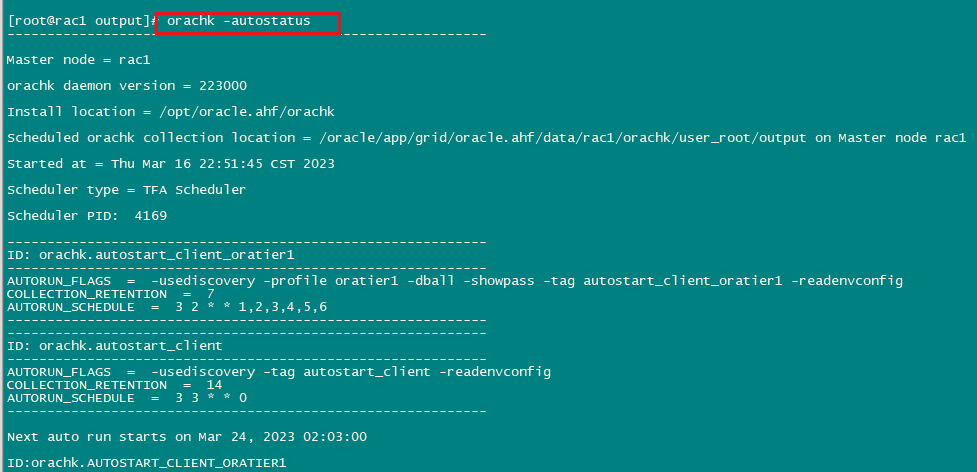

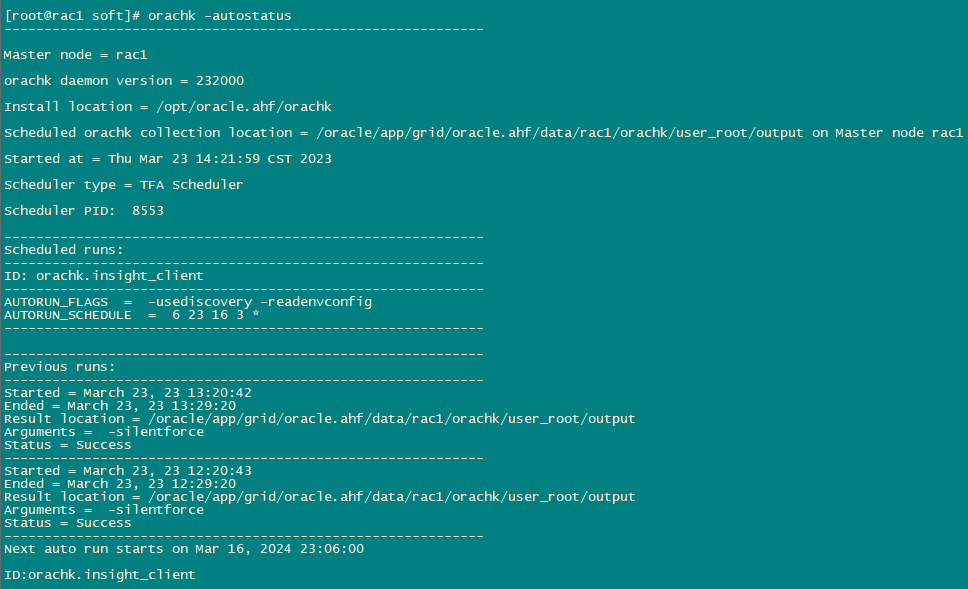

[root@rac1 soft]# orachk -autostatus

------------------------------------------------------------

master node = rac1

orachk daemon version = 232000

install location = /opt/oracle.ahf/orachk

scheduled orachk collection location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output on master node rac1

started at = thu mar 23 14:21:59 cst 2023

scheduler type = tfa scheduler

scheduler pid: 8553

------------------------------------------------------------

scheduled runs:

------------------------------------------------------------

id: orachk.insight_client

------------------------------------------------------------

autorun_flags = -usediscovery -readenvconfig

autorun_schedule = 6 23 16 3 *

------------------------------------------------------------

------------------------------------------------------------

previous runs:

------------------------------------------------------------

started = march 23, 23 13:20:42

ended = march 23, 23 13:29:20

result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output

arguments = -silentforce

status = success

------------------------------------------------------------

started = march 23, 23 12:20:43

ended = march 23, 23 12:29:20

result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output

arguments = -silentforce

status = success

------------------------------------------------------------

next auto run starts on mar 16, 2024 23:06:00

id:orachk.insight_client

由于后台日志一直输出报错。

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

2023-03-23t13:21:13.064313 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_14914.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

ora-01017: 2023-03-23t13:21:13.858875 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15038.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

2023-03-23t13:21:13.863078 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15038.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

ora-01017: 2023-03-23t13:21:14.219992 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15090.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

2023-03-23t13:21:14.224496 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15090.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

ora-01017: 2023-03-23t13:21:14.699104 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15128.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

2023-03-23t13:21:14.703345 08:00

errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15128.trc:

ora-17503: ksfdopn: 2 δdata/cis/password/pwdcis.263.1131705075

ora-27300: ϵͳϵͳ: open ʧ״̬ϊ: 13

ora-27301: ϵͳ: permission denied

ora-27302: sskgmsmr_7

所以临时停止任务:

orachk -autostop

然后重启两节点。

先在oracle用户下手动执行收集,测试查看oracle alert日志是否会报错。

[oracle@rac1 ~]$ orachk

clusterware stack is running from /oracle/app/19c/grid. is this the correct clusterware home?[y/n][y]

searching for running databases . . . . .

. .

list of running databases registered in ocr

1. cis

2. none of above

select databases from list for checking best practices. for multiple databases, select 1 for all or comma separated number like 1,2 etc [1-2][1].

. . . . . .

some audit checks might require root privileged data collection on . is sudo configured for oracle user to execute root_orachk.sh script?[y/n][n] y

either cluster verification utility pack (cvupack) does not exist at /opt/oracle.ahf/common/cvu or it is an old or invalid cvupack

checking cluster verification utility (cvu) version at crs home - /oracle/app/19c/grid

starting to run orachk in background on rac2 using socket

.

. . . .

. .

checking status of oracle software stack - clusterware, asm, rdbms on rac1

. . . . . .

. . . . . . . . . . . . .

-------------------------------------------------------------------------------------------------------

oracle stack status

-------------------------------------------------------------------------------------------------------

host name crs installed rdbms installed crs up asm up rdbms up db instance name

-------------------------------------------------------------------------------------------------------

rac1 yes yes yes yes yes cis1

-------------------------------------------------------------------------------------------------------

.

. . . . . .

.

.

*** checking best practice recommendations ( pass / warning / fail ) ***

.

============================================================

node name - rac1

============================================================

. . . . . .

collecting - asm disk groups

collecting - asm disk i/o stats

collecting - asm diskgroup attributes

collecting - asm disk partnership imbalance

collecting - asm diskgroup attributes

collecting - asm diskgroup usable free space

collecting - asm initialization parameters

collecting - active sessions load balance for cis database

collecting - archived destination status for cis database

collecting - cluster interconnect config for cis database

collecting - database archive destinations for cis database

collecting - database files for cis database

collecting - database instance settings for cis database

collecting - database parameters for cis database

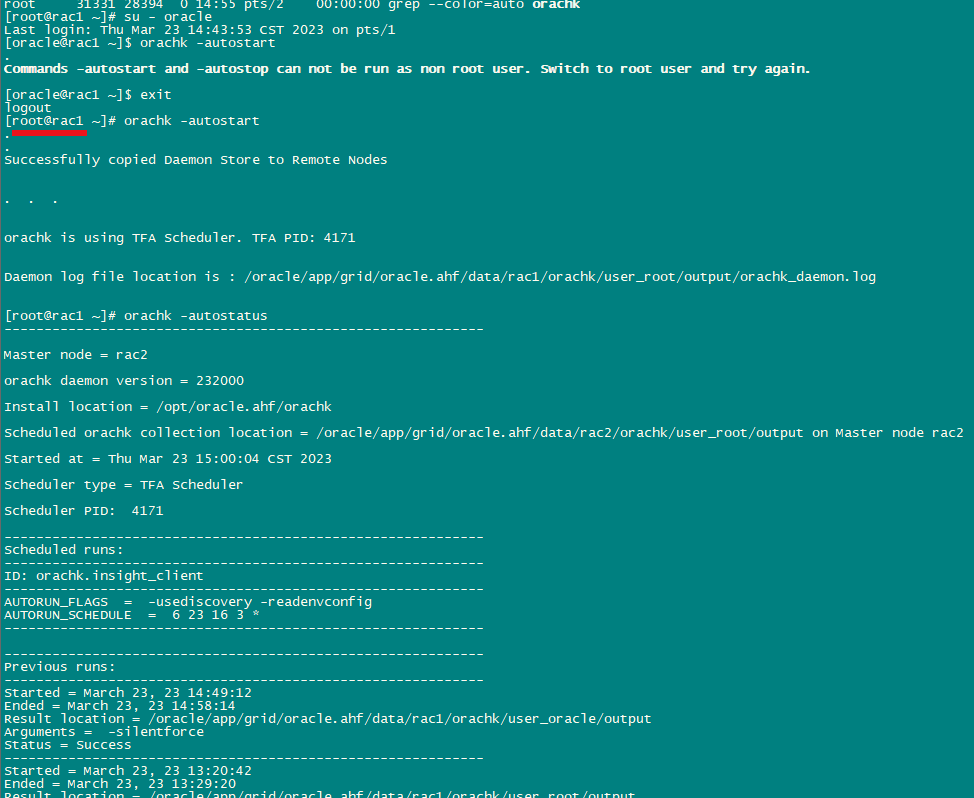

在root用户下设置自动启动,并手动测试orachk,看oracle的alert日志是否报错?

[oracle@rac1 ~]$ orachk -autostart

.

commands -autostart and -autostop can not be run as non root user. switch to root user and try again.

[oracle@rac1 ~]$ exit

logout

[root@rac1 ~]# orachk -autostart

.

.

successfully copied daemon store to remote nodes

. . .

orachk is using tfa scheduler. tfa pid: 4171

daemon log file location is : /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output/orachk_daemon.log

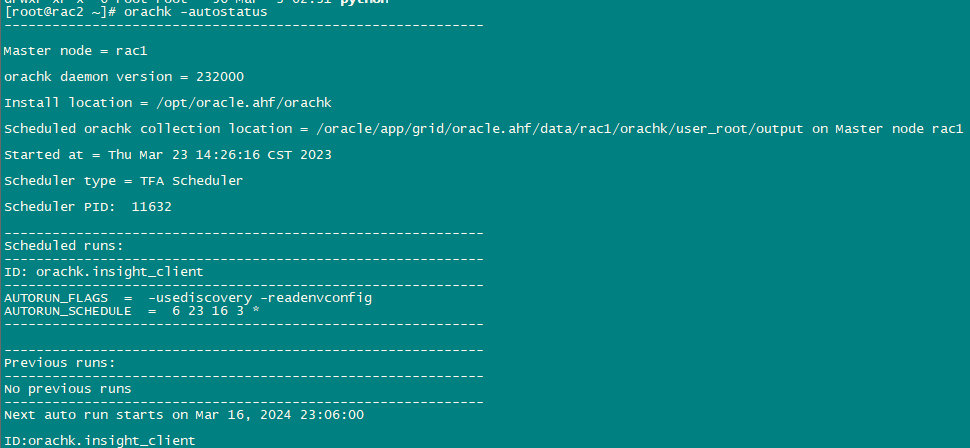

[root@rac1 ~]# orachk -autostatus

------------------------------------------------------------

master node = rac2

orachk daemon version = 232000

install location = /opt/oracle.ahf/orachk

scheduled orachk collection location = /oracle/app/grid/oracle.ahf/data/rac2/orachk/user_root/output on master node rac2

started at = thu mar 23 15:00:04 cst 2023

scheduler type = tfa scheduler

scheduler pid: 4171

------------------------------------------------------------

scheduled runs:

------------------------------------------------------------

id: orachk.insight_client

------------------------------------------------------------

autorun_flags = -usediscovery -readenvconfig

autorun_schedule = 6 23 16 3 *

------------------------------------------------------------

------------------------------------------------------------

previous runs:

------------------------------------------------------------

started = march 23, 23 14:49:12

ended = march 23, 23 14:58:14

result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_oracle/output

arguments = -silentforce

status = success

------------------------------------------------------------

started = march 23, 23 13:20:42

ended = march 23, 23 13:29:20

result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output

arguments = -silentforce

status = success

------------------------------------------------------------

next auto run starts on mar 16, 2024 23:06:00

id:orachk.insight_client

[root@rac1 ~]# orachk

clusterware stack is running from /oracle/app/19c/grid. is this the correct clusterware home?[y/n][y]

searching for running databases . . . . .

. .

list of running databases registered in ocr

1. cis

2. none of above

select databases from list for checking best practices. for multiple databases, select 1 for all or comma separated number like 1,2 etc [1-2][1].

. . . . . .

either cluster verification utility pack (cvupack) does not exist at /opt/oracle.ahf/common/cvu or it is an old or invalid cvupack

checking cluster verification utility (cvu) version at crs home - /oracle/app/19c/grid

starting to run orachk in background on rac2 using socket

.

. . . .

. .

checking status of oracle software stack - clusterware, asm, rdbms on rac1

. . . . . .

. . . . . . . . . . . . .

-------------------------------------------------------------------------------------------------------

oracle stack status

-------------------------------------------------------------------------------------------------------

host name crs installed rdbms installed crs up asm up rdbms up db instance name

-------------------------------------------------------------------------------------------------------

rac1 yes yes yes yes yes cis1

-------------------------------------------------------------------------------------------------------

.

. . . . . .

.

.

*** checking best practice recommendations ( pass / warning / fail ) ***

.

============================================================

node name - rac1

============================================================

. . . . . .

collecting - asm disk groups

collecting - asm disk i/o stats

collecting - asm diskgroup attributes

collecting - asm disk partnership imbalance

collecting - asm diskgroup attributes

collecting - asm diskgroup usable free space

collecting - asm initialization parameters

collecting - active sessions load balance for cis database

collecting - archived destination status for cis database

collecting - cluster interconnect config for cis database

collecting - database archive destinations for cis database

collecting - database files for cis database

collecting - database instance settings for cis database

collecting - database parameters for cis database

collecting - database properties for cis database

collecting - database registry for cis database

collecting - database sequences for cis database

collecting - database undocumented parameters for cis database

collecting - database undocumented parameters for cis database

collecting - database workload services for cis database

collecting - dataguard status for cis database

collecting - files not opened by asm

collecting - list of active logon and logoff triggers for cis database

collecting - log sequence numbers for cis database

collecting - percentage of asm disk imbalance

collecting - process for shipping redo to standby for cis database

collecting - redo log information for cis database

collecting - standby redo log creation status before switchover for cis database

collecting - /proc/cmdline

collecting - /proc/modules

collecting - cpu information

collecting - crs active version

collecting - crs oifcfg

collecting - crs software version

collecting - css reboot time

collecting - cluster interconnect (clusterware)

collecting - clusterware ocr healthcheck

collecting - clusterware resource status

collecting - disk i/o scheduler on linux

collecting - diskfree information

collecting - diskmount information

collecting - huge pages configuration

collecting - interconnect network card speed

collecting - kernel parameters

collecting - linux module config.

collecting - maximum number of semaphore sets on system

collecting - maximum number of semaphores on system

collecting - maximum number of semaphores per semaphore set

collecting - memory information

collecting - numa configuration

collecting - network interface configuration

collecting - network performance

collecting - network service switch

collecting - os packages

collecting - os version

collecting - operating system release information and kernel version

collecting - oracle executable attributes

collecting - patches for grid infrastructure

collecting - patches for rdbms home

collecting - rdbms and grid software owner uid across cluster

collecting - rdbms patch inventory

collecting - shared memory segments

collecting - table of file system defaults

collecting - voting disks (clusterware)

collecting - number of semaphore operations per semop system call

collecting - chmanalyzer to report potential operating system resources usage

[root@rac1 ~]# tfactl status

.-------------------------------------------------------------------------------------------.

| host | status of tfa | pid | port | version | build id | inventory status |

------ --------------- ------ ------ ------------ ---------------------- ------------------

| rac1 | running | 4141 | 5000 | 23.2.0.0.0 | 23200020230302111526 | complete |

| rac2 | running | 4076 | 5000 | 23.2.0.0.0 | 23200020230302111526 | complete |

'------ --------------- ------ ------ ------------ ---------------------- ------------------'

[root@rac1 ~]# tfactl print config

.-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------.

| rac1 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ ------------

| configuration parameter | value |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ ------------

| tfa version ( tfaversion ) | 23.2.0.0.0 |

| java version ( javaversion ) | 1.8 |

| public ip network ( publicip ) | true |

| repository current size (mb) ( currentsizemegabytes ) | 903 |

| repository maximum size (mb) ( maxsizemegabytes ) | 10240 |

| enables the execution of sqls throught sql agent process ( sqlagent ) | on |

| cluster event monitor ( clustereventmonitor ) | on |

| scanacfslog | off |

| automatic purging ( autopurge ) | on |

| internal search string ( internalsearchstring ) | on |

| trim files ( trimfiles ) | on |

| collecttrm | off |

| chmdataapi | on |

| chanotification ( chanotification ) | on |

| reloadcrsdataafterblackout | off |

| consolidate similar events (count shows number of events occurences) ( consolidate_events ) | off |

| managelogs auto purge ( managelogsautopurge ) | off |

| applin incidents automatic collections ( applin_incidents ) | off |

| alert log scan ( rtscan ) | on |

| generatezipmetadatajson | on |

| singlefileupload | off |

| auto sync certificates ( autosynccertificates ) | on |

| auto diagcollection ( autodiagcollect ) | on |

| smartprobclassifier | on |

| send cef metrics to oci monitoring ( defaultocimonitoring ) | off |

| generation of mini collections ( minicollection ) | on |

| disk usage monitor ( diskusagemon ) | on |

| send user events ( send_user_initiated_events ) | on |

| generation of auto collections from alarm definitions ( alarmautocollect ) | on |

| send audit event for collections ( cloudopslogcollection ) | off |

| analyze | off |

| generation of telemetry data ( telemetry ) | off |

| chaautocollect | off |

| queryapi | on |

| scandiskmon | off |

| file data collection ( inventory ) | on |

| isa data gathering ( collection.isa ) | on |

| skip event if it was flood controlled ( floodcontrol_events ) | off |

| automatic ahf insights report generation ( autoinsight ) | off |

| collectonsystemstate | on |

| chmretention | off |

| scanacfseventlog | off |

| debugips | off |

| collectalldirsbyfile | on |

| scanvarlog | off |

| restart_telemetry_adapter | off |

| telemetry adapter ( telemetry_adapter ) | off |

| public ip network ( publicip ) | on |

| flood control ( floodcontrol ) | on |

| odscan | on |

| start consuming data provided by sqlticker ( sqlticker ) | off |

| discovery ( discovery ) | on |

| indexinventory | on |

| send cef notifications ( customerdiagnosticsnotifications ) | off |

| granular tracing ( granulartracing ) | off |

| send cef metrics ( cloudopshealthmonitoring ) | off |

| minpossiblespaceforpurge | 1024 |

| disk.threshold | 90 |

| minimum space in mb required to run tfa. tfa will be stopped until at least this amount of space is available in the data directory (takes effect at next startup) ( minspacetoruntfa ) | 20 |

| mem.swapfree | 5120 |

| mem.util.samples | 4 |

| max number cells for diag collection ( maxcollectedcells ) | 14 |

| inventorythreadpoolsize | 1 |

| mem.swaptotal.samples | 2 |

| maxfileagetopurge | 1440 |

| mem.free | 20480 |

| actionrestartlimit | 30 |

| minimum free space to enable alert log scan (mb) ( minspaceforrtscan ) | 500 |

| cpu.io.samples | 30 |

| mem.util | 80 |

| waiting period before retry loading otto endpoint details (minutes) ( ottoendpointretrypolicy ) | 60 |

| maximum single zip file size (mb) ( maxzipsize ) | 2048 |

| time interval between consecutive disk usage snapshot(minutes) ( diskusagemoninterval ) | 60 |

| tfa isa purge thread delay (minutes) ( tfadbutlpurgethreaddelay ) | 60 |

| firstdiscovery | 1 |

| tfa ips pool size ( tfaipspoolsize ) | 5 |

| maximum file collection size (mb) ( maxfilecollectionsize ) | 1024 |

| time interval between consecutive managelogs auto purge(minutes) ( managelogsautopurgeinterval ) | 60 |

| arc.backupmissing.samples | 2 |

| cpu.util.samples | 2 |

| cpu.usr.samples | 2 |

| cpu.sys | 50 |

| flood control limit count ( fc.limit ) | 3 |

| flood control pause time (minutes) ( fc.pausetime ) | 120 |

| maximum number of tfa logs ( maxlogcount ) | 10 |

| db backup delay hours ( dbbackupdelayhours ) | 27 |

| cdb.backup.samples | 1 |

| arc.backupstatus | 1 |

| automatic purging frequency ( purgefrequency ) | 4 |

| tfa isa purge age (seconds) ( tfadbutlpurgeage ) | 2592000 |

| maximum collection size of core files (mb) ( maxcorecollectionsize ) | 500 |

| maximum compliance index size (mb) ( maxcompliancesize ) | 150 |

| cpu.util | 80 |

| maximum size in mb allowed for alert file inside ips zip ( ipsalertlogtrimsizemb ) | 20 |

| mem.swapfree.samples | 2 |

| cdb.backupstatus | 1 |

| mem.swaputl.samples | 2 |

| arc.backup.samples | 3 |

| unreachablenodetimeout | 3600 |

| flood control limit time (minutes) ( fc.limittime ) | 60 |

| mem.swaputl | 10 |

| mem.free.samples | 2 |

| maximum size of core file (mb) ( maxcorefilesize ) | 50 |

| disk.samples | 1 |

| cpu.sys.samples | 30 |

| cpu.usr | 98 |

| arc.backupmissing | 1 |

| cpu.io | 20 |

| archive backup delay minutes ( archbackupdelaymins ) | 40 |

| allowed sqlticker delay in minutes ( sqltickerdelay ) | 3 |

| inventorypurgethreadinterval | 720 |

| age of purging collections (hours) ( minfileagetopurge ) | 12 |

| cpu.idle.samples | 2 |

| unreachablenodesleeptime | 300 |

| cpu.idle | 20 |

| mem.swaptotal | 24 |

| tfa isa crs profile delay (minutes) ( tfadbutlcrsprofiledelay ) | 2 |

| frequency at which the fleet configurations will be updated ( cloudrefreshconfigrate ) | 60 |

| maximum compliance runs to be indexed ( maxcomplianceruns ) | 100 |

| cdb.backupmissing | 1 |

| cdb.backupmissing.samples | 2 |

| trim size ( trimsize ) | 488.28 kb |

| maximum size of tfa log (mb) ( maxlogsize ) | 52428800 |

| mintimeforautodiagcollection | 300 |

| skipscanthreshold | 100 |

| filecountinventoryswitch | 5000 |

| tfa isa purge mode ( tfadbutlpurgemode ) | simple |

| country | us |

| debug mask (hex) ( debugmask ) | 0x000000 |

| object store secure upload ( oss.secure.upload ) | true |

| rediscovery interval ( rediscoveryinterval ) | 30m |

| setting for acr redaction (none|sanitize|mask) ( redact ) | none |

| language | en |

| alertloglevel | all |

| baselogpath | error |

| encoding | utf-8 |

| lucene index recovery mode ( indexrecoverymode ) | recreate |

| automatic purge strategy ( purgestrategy ) | size |

| userloglevel | all |

| logs older than the time period will be auto purged(days[d]|hours[h]) ( managelogsautopurgepolicyage ) | 30d |

| isamode | enabled |

'------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ ------------'

[root@rac1 ~]# tfactl toolstatus

running command tfactltoolstatus on rac2 ...

.------------------------------------------------------------------.

| tools status - host : rac2 |

---------------------- -------------- -------------- -------------

| tool type | tool | version | status |

---------------------- -------------- -------------- -------------

| ahf utilities | alertsummary | 23.2.0 | deployed |

| | calog | 23.2.0 | deployed |

| | dbglevel | 23.2.0 | deployed |

| | grep | 23.2.0 | deployed |

| | history | 23.2.0 | deployed |

| | ls | 23.2.0 | deployed |

| | managelogs | 23.2.0 | deployed |

| | menu | 23.2.0 | deployed |

| | orachk | 23.2.0 | deployed |

| | param | 23.2.0 | deployed |

| | ps | 23.2.0 | deployed |

| | pstack | 23.2.0 | deployed |

| | summary | 23.2.0 | deployed |

| | tail | 23.2.0 | deployed |

| | triage | 23.2.0 | deployed |

| | vi | 23.2.0 | deployed |

---------------------- -------------- -------------- -------------

| development tools | oratop | 14.1.2 | deployed |

---------------------- -------------- -------------- -------------

| support tools bundle | darda | 2.10.0.r6036 | deployed |

| | oswbb | 22.1.0ahf | running |

| | prw | 12.1.13.11.4 | not running |

'---------------------- -------------- -------------- -------------'

note :-

deployed : installed and available - to be configured or run interactively.

not running : configured and available - currently turned off interactively.

running : configured and available.

.------------------------------------------------------------------.

| tools status - host : rac1 |

---------------------- -------------- -------------- -------------

| tool type | tool | version | status |

---------------------- -------------- -------------- -------------

| ahf utilities | alertsummary | 23.2.0 | deployed |

| | calog | 23.2.0 | deployed |

| | dbglevel | 23.2.0 | deployed |

| | grep | 23.2.0 | deployed |

| | history | 23.2.0 | deployed |

| | ls | 23.2.0 | deployed |

| | managelogs | 23.2.0 | deployed |

| | menu | 23.2.0 | deployed |

| | orachk | 23.2.0 | deployed |

| | param | 23.2.0 | deployed |

| | ps | 23.2.0 | deployed |

| | pstack | 23.2.0 | deployed |

| | summary | 23.2.0 | deployed |

| | tail | 23.2.0 | deployed |

| | triage | 23.2.0 | deployed |

| | vi | 23.2.0 | deployed |

---------------------- -------------- -------------- -------------

| development tools | oratop | 14.1.2 | deployed |

---------------------- -------------- -------------- -------------

| support tools bundle | darda | 2.10.0.r6036 | deployed |

| | oswbb | 22.1.0ahf | running |

| | prw | 12.1.13.11.4 | not running |

'---------------------- -------------- -------------- -------------'

note :-

deployed : installed and available - to be configured or run interactively.

not running : configured and available - currently turned off interactively.

running : configured and available.

问题参考:

最后修改时间:2023-03-24 14:36:28

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【米乐app官网下载的版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。