如今,在oracle数据库上有数t大小的分区是比较常见的。如果你还没遇到,那你也正朝着这个方向在前进。所以,你需要了解如何维护大表的统计信息。我将会通过如下几个贴子来涵盖该问题:

这里有一些额外的细节,还有一些在早前优化器博客中有所涉及的地方,所以您也可以参考一下。

概念

分区表上都有哪些统计信息?

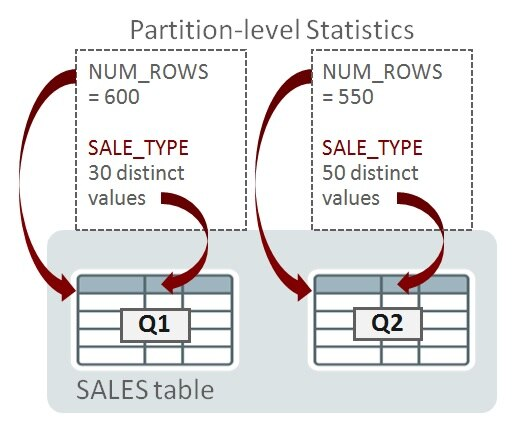

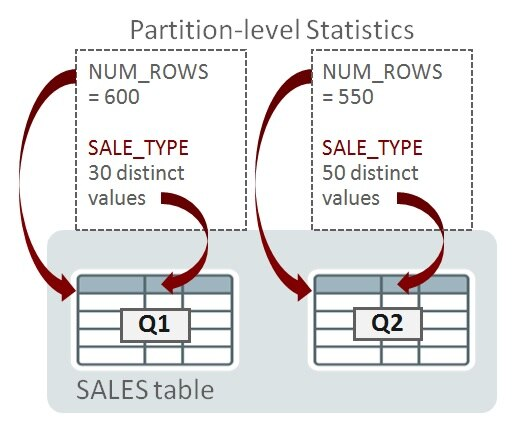

考虑一个名为sales的分区表,其上有对应财务季度1和季度2(q1和q2)的日期范围分区。分区在列quarter上。数据库为单独的分区收集了统计信息,以便可以评估被裁剪到单个分区的查询的基数。这被称之为分区级统计信息。为了用一个示例来演示,我计划只考虑两个统计信息,而忽略其它。q1和q2中分别有600和550行。sale_type列上的唯一值数量(ndvs)在q1中是30,q2中是50:

当查询被编译时,如果优化器确定它只需访问单个分区(比如,通过分区裁剪),那么分区级的统计信息就足够帮助确定执行计划。以下是一个只访问q1的查询:

select sum(amount) from sales where quarter = 'q1' and sale_type = 'direct';

如果优化器在编译时,确定查询会潜在访问一个以上的分区,那么单独的分区统计信息就不够了。在接下来的例子中,查询需要访问一个以上的分区:

select sum(amount) from sales

where sale_type = 'direct';

对于可以访问多个分区的查询,优化器必须考虑表级的统计信息。这些统计信息称为全局统计信息。

你知道,oracle数据库可以进一步划分分区为子分区;即复合分区。当下,我将只讨论分区,后面,我会谈及子分区统计信息。

oracle在分区和表级是如何管理统计信息的?

目前,我们已经创建了所需的分区和表级的统计信息。oracle是如何收集它们的?表级统计信息可以从分区级统计信息上推导出来吗?

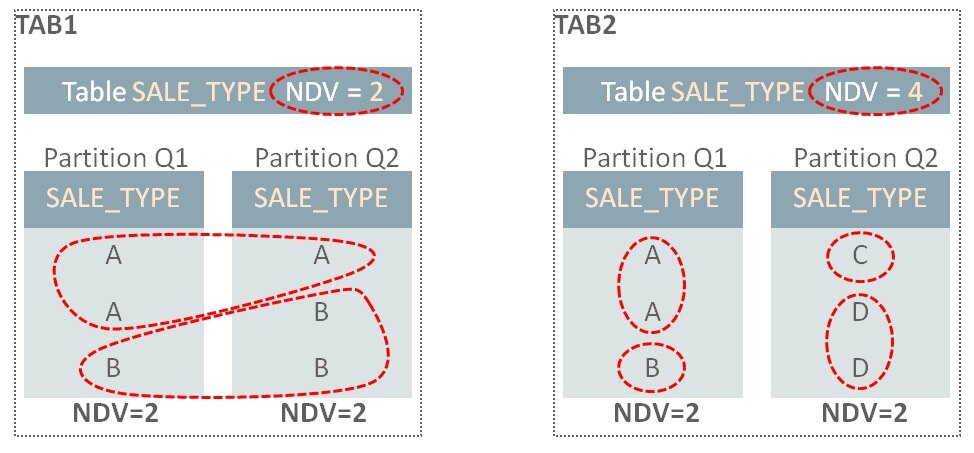

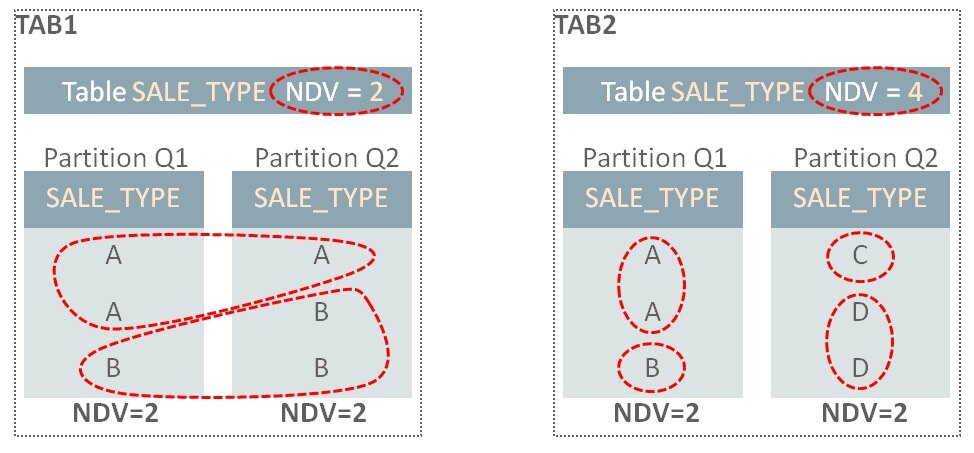

我们可以非常容易地从单独分区中推导出全局的num_rows;简单的合计各个分区的num_rows(比如示例中的:600 550=1150)即可。不幸的是,对于唯一值数量(ndv要)就不是那么简单了。在上面的示例中,在全局层级上,sale_type的ndv(55),并不能通过在分区层级上的值30和50来计算。信息是不充分的:基础值30和50并没有告诉我们sale_type在q1和q2中的重叠情况。设想两张相同的表,tab1和tab2在q1和q2分区上包含有不同的sale_type值:

在tab1中,q1分区上sale_type有值a和值b,因此nds是2.q2上有同样的值a和值b,所以整个表的ndv是2。而在tab2中,在q1和q2中并没有重叠的值,因此,尽管分区上的ndv也是2,但整个ndv是4。

为了计算全局ndv值,数据库需要检测所有表分区(假设我们并没有其它信息可用)。当表变大后,这将是非常耗时,特别是如果有成百上千个分区时。

来自synopses的拯救

oracle数据库是如何解决这个问题的呢?可以配置表在统计信息收集过程中,为每一个分区收集额外的信息。每个表分区拥有一个称之为synopsis的新的数据结构。汇集到一起,称之为synopses。

如果某个分区的数据变化了,重新计算全局ndv值时,不需要读取所有其它分区的内容。在下面的例子中,q2中的数据已经发生了变化(星标表示变化发生的位置)。当重新收集统计信息时,因为可以用q1和q2的synopses所包含的信息来替代,所以,不需要读取q1分区中的内容:

synopses允许数据库以可度量的方式,精准地维护表的统计信息:当表尺寸增长和分区数量增加时,这个功能所带来的性能收益会愈加明显。

synopses 的存储

synopses由数据库自动维护。有关每个表分区中存储的数据的附加信息,保存在sysaux表空间中。对于有较多的列和高ndv值的表,其数据量可能会变得很大,因此,应该对sysaux进行空间使用率的监控。统计信息收集过程必须维护synopsis信息,因而这些操作会带来额外的性能开销。我会在贴子3中继续讨论这个话题。

陈旧度与dml变化

如果统计信息没有被周期性的收集,并且库中的数据总是在变化中,那么统计信息会过期并且潜在地变为陈旧和失准。为了产生好的sql执行计划,统计信息需要精准,因而数据库必须检测到何时他们会陈旧。通过跟踪表、分区和子分区上发生的insert、update和delete这些dml操作的行数来做到这一点。一旦dml操作的行数超过了特定的阈值,则表,分区和子分区的统计信息状态就变为陈旧。

默认情况下,增量维护不会使用陈旧状态来决定何时更新统计信息。该场景在早前有关oracle database 11g的中有所介绍。哪怕分区或子分区只面临单行的dml操作,synopsis也会给予适当的更新,并从synopses中重新计算全局统计信息。该行为在oracle database 12c可以被改变,允许你使用陈旧度阈值来决定何时重新计算增量统计信息。这一点,在下面的“陈旧度与dml阈值”部分介绍

实现

启用synopses

为了启用synopses的创建,表必须被配置为使用增量维护。该功能通过使用dbms_stats中的,称之为’incremental’的偏好参数来切换。比如:

exec dbms_stats.set_table_prefs(null,'sales','incremental','true')

增量维护已启用的检查

可以使用下面的代码来检查 dbms_stats的偏好参数值:

select dbms_stats.get_prefs(pname=>'incremental',

tabname=>'sales')

from dual;

陈旧度和dml阈值

如上所述,当数据上产生的变化量超过一定的值时,优化器统计信息被认为是陈旧的。这个值被表示为表、分区或子分区中行的变化百分比,并通过dbms_stats中称为stale_percent的偏好参数来设置。陈旧百分比的默认值是10。例如,一个包括100行的分区,如果被更新、增加或删除超过10行,就会被认为陈旧。

以下是设置和观察该偏好值的示例:

exec dbms_stats.set_table_prefs(null, 'sales', 'stale_percent','5')

select dbms_stats.get_prefs('stale_percent',null,'sales') from dual;

检查表或分区是否已被标注记为陈旧也很简单:

select partition_name,

subpartition_name,

stale_stats /* yes or no */

from dba_tab_statistics

where table_name = 'sales';

数据库跟踪dml操作,来度量何时数据的变化已经超出了表上的陈旧度阈值。如果你想观察这个信息,请记住统计信息是概略的,是周期性的被刷了到磁盘上。如果你想在测试期间看到即时的变化,你需要手动刷新它们(需要拥有’analyze any’的系统权限), 类似这样:

exec dbms_stats.flush_database_monitoring_info

select *

from dba_tab_modifications

where table_name = 'sales';

请注意,如果你在oracle database 11g中使用增量统计信息,在分区或子分区上的单行的dml操作,也会导致统计信息的刷新–即便这并不会使其陈旧。换句话说,我们可能在包含1百万行的分区上更新了1行。分区并不会被标记为陈旧(如果我们假设陈旧度阈值为10%),但是最新的统计信息还是会被收集。oracle database 12c默认情况下是同样的行为,但是该版本给了你一个选项,允许在分区或子分区上发生多行变化时,才会增量刷新。你可以通过改变dbms_stats的偏好参数incremental_staleness从默认值(null)为’use_stale_percent’来启用。 例如:

exec dbms_stats.set_global_prefs('incremental_staleness', 'use_stale_percent')

一旦设置了这一个偏好参数,stale_percent值将被用来定义增量维护中的dml变化阈值。换句话说,如果分区的dml变化量低于stale_percent阈值,则不会收集该分区的统计信息。

锁定统计信息

只要被锁定的分区上没有dml发生,增量统计信息就可以与分区统计信息锁定一同工作。然而,如果在锁定的分区上会发生dml,那么我们将不再能保证来自于锁定统计信息的全局统计信息是准确的。故而,收集全局统计信息时,数据库会退回到非增量的方法。但是,如果出于某种原因,你必须锁定分区级的统计信息,还仍要使用增量统计信息收集的优点,你可以设置偏好参数’incremental_staleness’包含‘use_locked_stats’。一旦设置了,只要锁定的分区/子分区统计信息有synopses,将无视dml变化,也不会认为是陈旧的

注:‘incremental_staleness’可以接受多个值,比如:

begin

dbms_stats.set_table_prefs(

ownname=>null,

tabname=>'sales',

pname =>'incremental_staleness',

pvalue=>'use_stale_percent, use_locked_stats');

end;

/

陈旧度的检查

你可以非常简单地使用统计信息视图来检查表/分区/子分区的陈旧度,例如:

exec dbms_stats.flush_database_monitoring_info

select partition_name,subpartition_name,stale_stats

from dba_tab_statistics

where table_name = 'sales'

order by partition_position, subpartition_position;

数据库监控数据被用于识别陈旧的统计信息,故而,如果要进行测试,并且希望立即了解数据变化对陈旧状态的影响,你需要调用flush_database_monitoring_info。

synopses的检查

oracle支持网站维护的文档 中,包含有列出带有synopses的对象的查询语句。

收集统计信息

如何在使用增量维护的表上收集其统计信息呢?简单地说,使用下面的存储过程,让oracle database确定如何做最好:

exec dbms_stats.gather_table_stats(null,'sales')

或者

exec dbms_stats.gather_schema_stats(…)

或者更好的是

exec dbms_stats.gather_database_stats()

对于dbms_stats.gather…类存储过程,你必须设置estimate_percent为auto_sample_size。由于这是默认值,所以,这也是上面的示例中使用的值,除非你修改了它。如果你为estimate_percent使用了一个百分比值,增量维护将不会生效。

在数据无变化时重新收集统计信息

你可能时不常发现没有任何dml变化的分区的统计信息被收集了。为什么呢?有这样一些原因:

1、 统计信息被解锁了。

2、 表列的使用发生了改变 (下面会解释)。

3、 添加了新列。这包括因创建诸如列组,列表达式的扩展统计信息而创建的隐藏列。

4、 synopses未和列统计信息同步。这可能是因为你在t1时刻,以增量模式收集了统计信息,然后你关闭了增量并重新收集统计信息在t2时刻。synopses的时间戳t1就与基本列统计信息中的时间戳2失去了同步。

5、 不太常见的情况是使用delete_column_statistics删除列统计信息。

原因2有一些隐晦。数据库会跟踪查询谓词中出现列是如何使用,并存储这一信息到数据字典 (). 数据库使用该信息帮助找出会从直方图 受益,改进查询基数的评估等。其结果会影响sql执行计划。如果列的使用改变了,而且你设置method_opt 带有 'size auto’值,那么数据库可能选择重新收集统计信息并创建一个新的直方图。这种情况很少见,但我知道有些客户,会通过指定收集全部(列)的直方图来防止这种情况发生的可能:

dbms_stats.set_table_prefs

(null, 'sales',

'method_opt','for all columns size 1 for columns size 254 cust_id');

dbms_stats.set_table_prefs

(null, 'sales',

'method_opt','for all columns size 1');

如果你是这么做的,那么你应该请求并应用补丁 。 当你通过dbms_stats的偏好参数来设置method_opt时,你需要确保直方图的检查操作是可靠的 (否则分区统计可能会非常频繁的重新收集)。如果你对相关表并不是使用偏好参数来设置的,而是使用全局dbms_stats 的method_opt设置来关闭直方图的话 ,则不需要该补丁。

本地分区索引统计信息

对于本地分区索引统计信息,我们首先检查对应表分区或子分区。如果表分区(子分区)有新的统计信息并且索引的统计信息已经在表分区(子分区)统计信息收集之后收集了,那么应该认为他们是新的,是不需要重新收集的。

复合分区表

子分区级上的统计信息被数据库收集并存储,但要注意,synopses仅在分区级创建。这意味着如果子分区的统计信息因数据变化而陈旧,那么父分区统计信息(和synopses)将会通过检查其下所有子分区的方式来刷新。数据库只会在子分区级别,重新收集那些陈旧的子分区。

更多信息

在 中有关此主题的更多信息。

原文链接:

原文标题:

efficient statistics maintenance for partitioned tables using incremental statistics – part 1

原文作者:nigel bayliss

原文内容:

efficient statistics maintenance for partitioned tables using incremental statistics – part 1

nigel bayliss

product manager

introduction

it’s common to have multi-terabyte partitioned tables in an oracle database these days. if you are not there yet but you’re heading that way, then you need to know about how to maintain statistics on large tables. i will cover this in a series of posts as follows:

- part 1 (this post) – concepts and implementation

- – incremental statistics and partition exchange loading

- – new to oracle database 12c release 2

there are some additional details and some overlap with an earlier optimizer blog post, so you should check that out too.

consider a partitioned table called sales that has date-range partitions for financial quarters 1 and 2 (q1 and q2). the partition key is on the column quarter. the database gathers statistics for individual partitions so that cardinality can be estimated for queries that are pruned to a single partition. these are called partition-level statistics. to illustrate this with an example, i’m going to consider just a couple of statistics and ignore the others. the number of rows in q1 and q2 are 600 and 550 respectively. the number of distinct values (ndvs) for sale_type in q1 is 30 and q2 it’s 50:

partition-level statistics

when a query is compiled, if the oracle optimizer determines that it will need to access a single partition (using partition pruning, for example) then the statistics at the partition-level will be enough to help determine the execution plan. here’s a query that reads q1 only:

select sum(amount) from sales where quarter = 'q1' and sale_type = 'direct';

if the optimizer determines at compile-time that a query has the potential to access more than one partition, then individual partition statistics are not enough. in the next example, the query needs to access more than one partition:

select sum(amount) from sales

where sale_type = 'direct';

for queries that might access multiple partitions, the optimizer must consider statistics at the table level. these statistics are known as global-level statistics:

global-level statistics

you will know that the oracle database can further subdivide partitions into subpartitions; a feature known as composite partitioning. for now i’m only going to talk about partitions, and later on i’ll say something about subpartition statistics.

now that we have established the need for both partition and table level statistics, how does oracle collect them? can the table-level statistics be derived from partition-level statistics?

it is very easy to derive num_rows at the global level from individual partitions; simply sum num_rows for each partition (e.g. 600 550=1150 in the example). unfortunately, it isn’t that simple for the number of distinct values (denoted as ndvs). in the example above, the ndv for sale_type at the global level (55) can’t be calculated using the values 30 and 50 at the partition-level. there’s insufficient information: the basic values 30 and 50 don’t tell us anything about the overlap of sale_type values in q1 and q2. consider two identical tables, tab1 and tab2 that contain difference sale_type values in q1 and q2 partitions:

ndv

in tab1, the q1 partition has sale_type values a and b, so the ndv is 2. q2 has the same values, a and b, so the overall table ndv is 2. in the tab2 case, there is no overlap in values between q1 and q1, so even though the partition ndv values are also 2, the overall ndv is 4.

to calculate the global-level ndv value, the database must examine all table partitions (assuming that we don’t have some additional information at our disposal). this can become very time-consuming as tables grow large, especially if there are hundreds or thousands of partitions.

how does the oracle database resolve this problem? tables can be configured to instruct the statistics gathering procedures to store additional information about each individual partition. each table partition has a new data structure called a synopsis. collectively, these structures are called synopses.

if data changes in one partition, there is no need to read to contents of all other partitions when recalculating the global-level ndv values. in the following example, change has been made to the data in q2 (the star symbols indicate where change is occurring). when statistics are re-gathered, there is no need to read the contents of the q1 partition because the information contained in the q1 and q2 synopses can be used instead:

gathering statistics

synopses allow the database to maintain accurate table statistics in a scalable manner: as tables grow in size and the number of partitions increases, the performance benefit of this feature will become more apparent.

synopses are maintained automatically by the database. they store additional information in the sysaux tablespace about the data stored in every table partition. for tables with large numbers of columns and high ndvs, the amount of data can become large so space usage in sysaux should be monitored. statistics gathering procedures must maintain the synopsis information so this can add a performance overhead for some operations. i will return to this topic in part 3 of this series.

if statistics are not gathered periodically and if the data in the database changes over time, then statistics will be out of date and potentially stale and inaccurate. statistics need to be accurate to generate good sql execution plans so the database must detect when they are stale. it does this by tracking the number of dml row insert, update and delete operations for tables, partitions and sub-partitions. once the number of dml operations exceeds a certain threshold the statistics status for the table, partition or sub-partition is changed to stale.

by default, incremental maintenance does not use the staleness status to decide when to update statistics. this scenario is covered in an for oracle database 11g. if a partition or sub-partition is subject to even a single dml operation, statistics will be re-gathered, the appropriate synopsis will be updated and the global-level statistics will be re-calculated from the synopses. this behavior can be changed in oracle database 12c, allowing you to use the staleness threshold to define when incremental statistics will be re-calculated. this is covered in staleness and dml thresholds, below.

to enable the creation of synopses, a table must be configured to use incremental maintenance. this feature is switched on using a dbms_stats preference called ‘incremental’. for example:

exec dbms_stats.set_table_prefs(null,'sales','incremental','true')

the value of the dbms_stats preference can be checked as follows:

select dbms_stats.get_prefs(pname=>'incremental',

tabname=>'sales')

from dual;

as mentioned above, optimizer statistics are considered stale when the number of changes made to data exceeds a certain threshold. this threshold is expressed as a percentage of row changes for a table, partition or subpartition and is set using a dbms_stats preference called stale_percent. the default value for stale percent is 10 so, for example, a partition containing 100 rows would be marked stale if more than 10 rows are updated, added or deleted. here is an example of setting and inspecting the preference:

exec dbms_stats.set_table_prefs(null, 'sales', 'stale_percent','5')

select dbms_stats.get_prefs('stale_percent',null,'sales') from dual;

it is easy to check if a table or partition has been marked as stale:

select partition_name,

subpartition_name,

stale_stats /* yes or no */

from dba_tab_statistics

where table_name = 'sales';

the database tracks dml operations to measure when data change has caused a table to exceed its staleness threshold. if you want to take a look at this information, bear in mind that the statistics are approximate and they are autmatically flushed to disk periodically. if you want to see the figures change immediately during your tests then you will need to flush them manually (you must have ‘analyze any’ system privilege), like this:

exec dbms_stats.flush_database_monitoring_info

select *

from dba_tab_modifications

where table_name = 'sales';

remember that if you are using incremental statistics in oracle database 11g, a single dml operation on a partition or sub-partition will make it a target for a statistics refresh - even if it is not marked stale. in other words, we might update one row in a partition containing 1 million rows. the partition won’t be marked state (if we assume a 10% staleness threshold) but fresh statistics will be gathered. oracle database 12c exhibits the same behavior by default, but this release gives you the option to allow multiple dml changes to occur against a partition or sub-partition before it is a target for incremental refresh. you can enable this behavior by changing the dbms_stats preference incremental_staleness from its default value (null) to ‘use_stale_percent’. for example:

exec dbms_stats.set_global_prefs('incremental_staleness', 'use_stale_percent')

once this preference is set, a table’s stale_percent value will be used to define the threshold of dml change in the context of incremental maintenance. in other words, statistics will not be re-gathered for a partition if the number of dml changes is below the stale_percent threshold.

incremental statistics does work with locked partitions statistics as long as no dml occurs on the locked partitions. however, if dml does occurs on the locked partitions then we can no longer guarantee that the global statistics built from the locked statistics will be accurate so the database will fall back to using the non-incremental approach when gathering global statistics. however, if for some reason you must lock the partition level statistics and still want to take advantage of incremental statistics gathering, you can set the ‘incremental_staleness’ preference to include ‘use_locked_stats’. once set, the locked partitions/subpartitions stats are not considered as stale as long as they have synopses, regardless of dml changes.

note that ‘incremental_staleness’ accepts multiple values, such as:

begin

dbms_stats.set_table_prefs(

ownname=>null,

tabname=>'sales',

pname =>'incremental_staleness',

pvalue=>'use_stale_percent, use_locked_stats');

end;

/

you can check for table/partition/subpartition staleness very easily using the statistics views. for example:

exec dbms_stats.flush_database_monitoring_info

select partition_name,subpartition_name,stale_stats

from dba_tab_statistics

where table_name = 'sales'

order by partition_position, subpartition_position;

database monitoring information is used identify stale statistics, so you’ll need to call flush_database_monitoring_info if you’re testing this out and you want to see immediately how the staleness status is affected by data change.

oracle support maintains which includes a query that lists objects with synopses.

how do you gather statistics on a table using incremental maintenance? keep things simple! let the oracle database work out how best to do it. use these procedures:

exec dbms_stats.gather_table_stats(null,'sales')

or exec dbms_stats.gather_schema_stats(…)

or, even better exec dbms_stats.gather_database_stats()

for the dbms_stats.gather… procedures you must use estimate_percent set to auto_sample_size. since this is the default, then that is what will be used in the examples above unless you have overriden it. if you use a percentage value for estimate_percent, incremental maintenance will not kick in.

from time-to-time you might notice that statistics are gathered on partitions that have not been subject to any dml changes. why is this? there are a number of reasons:

1. statistics have been unlocked.

2. table column usage has changed (this is explained below).

3. new columns are added. this includes hidden columns created from statistics extensions such as column groups, column expressions.

4. synopses are not in sync with the column statistics. it is possible that you have gathered statistics in incremental mode at time t1. then you disable incremental and regather statistics at time t2. then the synopses’ timestamp t1 is out of sync with the basic column statistics’ timestamp t2.

5. unusual cases such as column statistics have been deleted using delete_column_statistics.

bullet point “2” has some implications. the database tracks how columns are used in query predicates and stores this information in the data dictionary (). it uses this information to help it figure out which columns will benefit from a to improve query cardinality estimates and, as a result, improve sql execution plans. if column usage changes and you are using method_opt with ‘size auto’, then the database might choose to re-gather statistics and create a new histogram. it will be rare event and will eventually cease, but i know that some customers like to prevent this possibility by specifying histograms fully:

dbms_stats.set_table_prefs

(null, 'sales',

'method_opt','for all columns size 1 for columns size 254 cust_id');

dbms_stats.set_table_prefs

(null, 'sales',

'method_opt','for all columns size 1');

if you do this, then you should request and apply patch for your platform. this is necessary to ensure that the histogram check operates reliably when method_opt is set via a dbms_stats table preference (otherwise partition statistics may be regathered too frequently).the patch is not required if you are not using a table preference for the relevant table, but have instead disabled histograms using a global dbms_stats method_opt setting.

for locally partitioned index statistics, we first check their corresponding table partitions (or subpartitions). if the table (sub)partitions have fresh statistics and the index statistics have been gathered after the table (sub)partition-level statistics, then they are considered fresh and their statistics are not regathered.

statistics at the subpartition level are gathered and stored by the database, but note that synopses are created at the partition level only. this means that if the statistics for a subpartition become stale due to data changes, then the statistics (and synopsis) for the parent partition will be refreshed by examining all of its subpartitions. the database only regathers subpartition-level statistics on subpartitions that are stale.

there is more on this topic in the .