两台主机的oracle数据库存放在共享磁盘上,通过pcs实现oracle ha自动主备切换,任意一台主机出现宕机故障,另外一台主机可自动实现快速接管,最大限度保障业务的连续性运行。

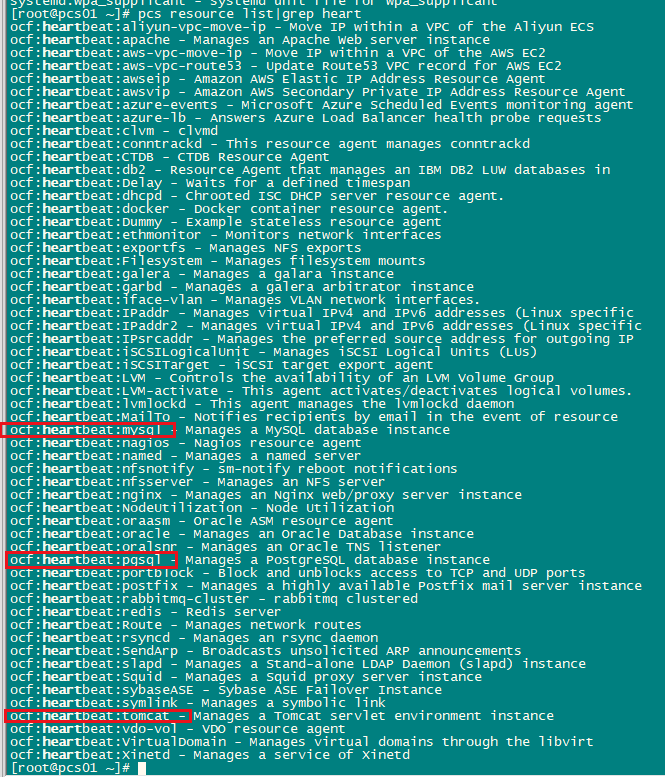

(您不再需要使用linux cluster、roseha等复杂的ha软件,pcs实现的效果完全可满足一般ha需求;本文虽然写的是oracle ha,但其同样适用于其它数据库mysql/pg等和其它应用软件,关键点就是两台主机配置相同的用户和环境变量,数据库或应用安装在共享存储上即可)

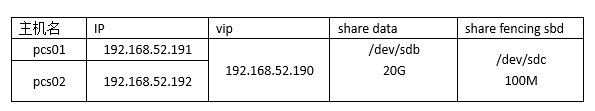

操作系统 oracle linux7.9

root密码 secure_password

hacluster密码 secure_password

数据库版本11.2.0.4

数据库名称:orcl

system/sys密码oracle

其中:共享磁盘/dev/sdb通过lvm方式划分逻辑卷/dev/vg01/lvol01并挂接xfs类型的/u01文件系统,用来安装oracle数据库。

[root@pcs01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.52.191 pcs01

192.168.52.192 pcs02

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/selinux=enforcing/selinux=disabled/g' etc/selinux/config

setenforce 0

时间同步:

设置时间同步

yum install -y chrony

systemctl enable chronyd

systemctl start chronyd

systemctl status chronyd

添加时间同步服务器

vi /etc/chrony.conf

server 时钟服务器ip iburst

重启chronyd服务

systemctl restart chronyd.service

检查同步是否正常

chronyc sources –v

timedatectl

3.1安装pcs软件:(所有节点)

其中:pcs为crm的管理接口工具,pacemaker为集群资源管理器(cluster resource management),corosync为集群消息事务层(massage layer)。

yum -y install pcs

离线情况下,需要配置本地yum源:

[redhat7.9]

name = redhat 7.9

baseurl=file:///mnt

gpgcheck=0

enabled=1

[highavailability]

name=highavailability

baseurl=file:///mnt/addons/highavailability

gpgcheck=0

enabled=1

[resilientstorage]

name=resilientstorage

baseurl=file:///mnt/addons/resilientstorage

gpgcheck=0

enabled=1

systemctl start pcsd.service

systemctl enable pcsd.service

3.2配置集群用户和互信权限:(1节点)

echo secure_password | passwd --stdin hacluster

pcs cluster auth pcs01 pcs02 -u hacluster -p secure_password

3.3安装集群:(1节点)

pcs cluster setup --start --name cluster01 pcs01 pcs02

检查状态:忽略stonith的警告。

pcs status

启动集群:

pcs cluster enable --all

pcs cluster status

pcs property set stonith-enabled=false

pcs status

3.4创建虚拟服务ip:(1节点)

pcs resource create virtualip ipaddr2 ip=192.168.52.190 cidr_netmask=24 nic=eth0 op monitor interval=10s

pcs status

通过如下方式确认ip正常挂接并可用,核查网卡名称比如eth0/ens32:

ip a

ping -c 2 192.168.52.190

ip addr show dev ens32

测试ip切换:

pcs resource move virtualip pcs02

3.5创建共享磁盘卷组(1节点)

vgcreate vg01 /dev/sdb

vgdisplay|grep free

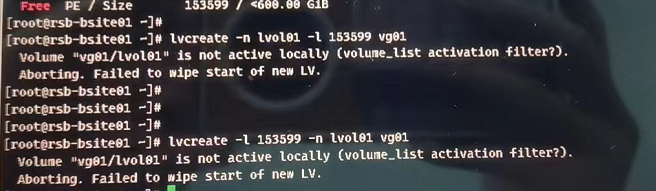

lvcreate -n lvol01 -l 2598 vg01(根据柱面数划逻辑卷大小)

lvcreate -n lvol01 -l 9g vg01(直接分配大小,存在浪费空间问题)

mkfs -t xfs /dev/vg01/lvol01

mkdir /u01

systemctl daemon-reload

mount -t xfs /dev/vg01/lvol01 /u01

df -th /u01

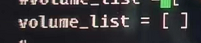

vi /etc/lvm/lvm.conf找到volume_list =修改volume_list = [],最终可能需改成volume_list = [ "ol" ],其中ol为本地vg(通过vgs等核查本地磁盘卷组排除掉)

egrep -v "#|^$" /etc/lvm/lvm.conf

lvmconf --enable-halvm --services --startstopservices

3.5.1创建卷组资源:

pcs resource create vg01 lvm volgrpname=vg01 exclusive=true

pcs resource show

pcs status

pcs resource move vg01 pcs02

pcs status

3.6创建文件系统资源:

pcs resource create u01 filesystem device="/dev/vg01/lvol01" directory="/u01" fstype=“xfs”

pcs status

把资源加入oracle组

pcs resource group add oracle virtualip vg01 u01

pcs status

测试资源启停:

pcs cluster standby pcs01

pcs cluster unstandby pcs01

3.6.1在/u01文件系统安装oracle数据库:

系统参数:(所有节点)

vi /etc/sysctl.conf

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 64424509440 ##小于物理内存

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

执行生效sysctl -p

vi /etc/profile(所有节点)

if [ $user = "oracle" ]; then

if [ $shell = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

fi

执行生效:source /etc/profile

在 /etc/security/limits.conf 文件中添加:(所有节点)

oracle soft nofile 10240

oracle hard nofile 65536

oracle soft nproc 16384

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

oracle hard memlock unlimited

oracle soft memlock unlimited

安装包:(所有节点)

yum -y install binutils compat-libstdc -33 gcc gcc-c glibc glibc-common glibc-devel ksh libaio libaio-devel libgcc libstdc libstdc -devel make sysstat openssh-clients compat-libcap1 xorg-x11-utils xorg-x11-xauth elfutils unixodbc unixodbc-devel libxp elfutils-libelf elfutils-libelf-devel smartmontools unzip

建用户和组:(所有节点)

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

useradd -u 54321 -g oinstall -g dba,oper oracle

目录权限(1节点)

mkdir -p /u01/db

mkdir -p /u01/soft

chown -r oracle:oinstall /u01

chmod -r 755 /u01

环境变量:(所有节点)

su - oracle

vi .bash_profile

export oracle_base=/u01/db/oracle

export oracle_home=$oracle_base/product/11.2.0/dbhome_1

export oracle_sid=orcl

export lang=en_us.utf-8

export nls_lang=american_america.zhs16gbk

export nls_date_format="yyyy-mm-dd hh24:mi:ss"

export path=.:${path}:$home/bin:$oracle_home/bin:$oracle_home/opatch

export path=${path}:/usr/bin:/bin:/usr/bin/x11:/usr/local/bin

export path=${path}:$oracle_base/common/oracle/bin:/u01/oracle/run

export oracle_term=xterm

export ld_library_path=$oracle_home/lib

export ld_library_path=${ld_library_path}:$oracle_home/oracm/lib

export ld_library_path=${ld_library_path}:/lib:/usr/lib:/usr/local/lib

export classpath=$oracle_home/jre

export classpath=${classpath}:$oracle_home/jlib

export classpath=${classpath}:$oracle_home/rdbms/jlib

export classpath=${classpath}:$oracle_home/network/jlib

export threads_flag=native

export temp=/tmp

export tmpdir=/tmp

umask 022

export tmout=0

安装软件:(1节点)

vi /etc/orainst.loc

inventory_loc=/u01/db/orainventory

inst_group=oinstall

./runinstaller -silent -debug -force -noconfig -ignoresysprereqs \

from_location=/u01/soft/database/stage/products.xml \

oracle.install.option=install_db_swonly \

unix_group_name=oinstall \

inventory_location=/u01/db/orainventory \

oracle_home=/u01/db/oracle/product/11.2.0/dbhome_1 \

oracle_home_name="oracle11g" \

oracle_base=/u01/db/oracle \

oracle.install.db.installedition=ee \

oracle.install.db.iscustominstall=false \

oracle.install.db.dba_group=dba \

oracle.install.db.oper_group=dba \

decline_security_updates=true

建库:(1节点)

cd /u01/db/oracle/product/11.2.0/dbhome_1/assistants/dbca/templates

dbca -silent -createdatabase -templatename general_purpose.dbc -gdbname orcl -sid orcl -syspassword oracle -systempassword oracle -responsefile no_value -datafiledestination /u01/db/oracle/oradata -redologfilesize 200 -recoveryareadestination no_value -storagetype fs -characterset zhs16gbk -nationalcharacterset al16utf16 -sampleschema false -memorypercentage 60 -databasetype oltp -emconfiguration none

创建监听:(1节点)

netca -silent -responsefile /u01/db/oracle/product/11.2.0/dbhome_1/assistants/netca/netca.rsp

服务名和静态监听修改(注意红色部分):(1节点)

[oracle@pcs02 ~]$ cd $oracle_home/network/admin

[oracle@pcs02 admin]$ more listener.ora

# listener.ora network configuration file: /u01/db/oracle/product/11.2.0/dbhome_1/network/admin/listener.ora

# generated by oracle configuration tools.

listener =

(description_list =

(description =

(address = (protocol = ipc)(key = extproc1521))

(address = (protocol = tcp)(host = 192.168.52.190)(port = 1521))

)

)

sid_list_listener =

(sid_list =

(sid_desc =

(global_dbname = orcl)

(oracle_home = /u01/db/oracle/product/11.2.0/dbhome_1)

(sid_name = orcl)

)

)

adr_base_listener = /u01/db/oracle

[oracle@pcs02 admin]$ more tnsnames.ora

# tnsnames.ora network configuration file: /u01/db/oracle/product/11.2.0/dbhome_1/network/admin/tnsnames.ora

# generated by oracle configuration tools.

orcl =

(description =

(address = (protocol = tcp)(host = 192.168.52.190)(port = 1521))

(connect_data =

(server = dedicated)

(service_name = orcl)

)

)

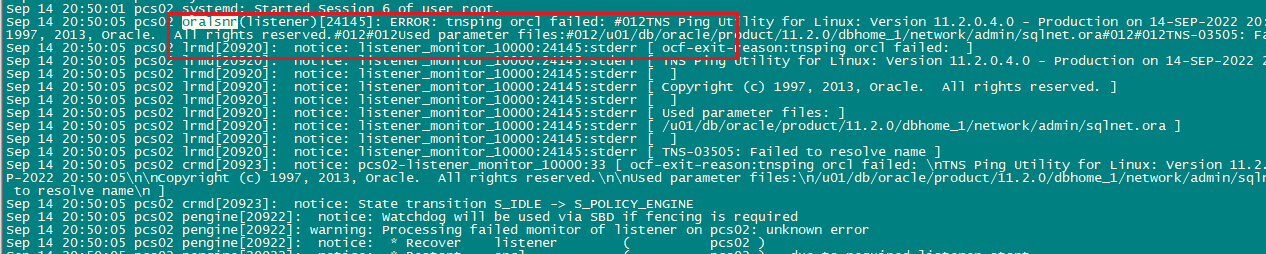

注意tnsnames的服务名orcl必须与sid名称一致,否则当前节点的pcs服务会当掉发生切换,并且集群切换到另外一个节点,监听和数据库服务stop,如下图:

修改正确后,两节点都重启systemctl restart pacemaker恢复正常。

调优基本参数:(1节点)

alter profile default limit failed_login_attempts unlimited;

alter profile default limit password_life_time unlimited;

alter system set audit_trail=none scope=spfile sid='*';

alter system set recyclebin=off scope=spfile sid='*';

alter system set sga_target=2000m scope=spfile sid='*';

alter system set pga_aggregate_target=500m sid='*';

拷贝节点1文件到节点2:

scp -p /etc/orainst.loc pcs02:/etc/

scp -p /etc/oratab pcs02:/etc/

scp -p /usr/local/bin/coraenv pcs02:/usr/local/bin/

scp -p /usr/local/bin/dbhome pcs02:/usr/local/bin/

scp -p /usr/local/bin/oraenv pcs02:/usr/local/bin/

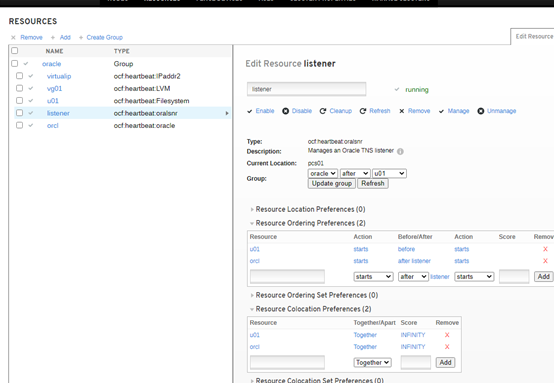

3.7创建监听资源:(1节点)

pcs resource create listener_orcl oralsnr sid="orcl" listener="listener" --group=oracle

pcs status

3.8创建oracle db资源:(1节点)

pcs resource create orcl oracle sid=“orcl” --group=oracle

pcs status

3.9定义资源依赖(1节点)

pcs constraint colocation add vg01 with virtualip

pcs constraint colocation add u01 with vg01

pcs constraint colocation add listener with u01

pcs constraint colocation add orcl with listener

3.10定义资源启动顺序(1节点)

pcs constraint order start virtualip then vg01

pcs constraint order start vg01 then start u01

pcs constraint order start u01 then start listener

pcs constraint order start listener then start orcl

查看所有依赖:

[root@pcs01 ~]# pcs constraint show --full

location constraints:

resource: vg01

enabled on: pcs02 (score:infinity) (role: started) (id:cli-prefer-vg01)

resource: virtualip

enabled on: pcs01 (score:infinity) (role: started) (id:cli-prefer-virtualip)

ordering constraints:

start virtualip then start vg01 (kind:mandatory) (id:order-virtualip-vg01-mandatory)

start vg01 then start u01 (kind:mandatory) (id:order-vg01-u01-mandatory)

start u01 then start listener (kind:mandatory) (id:order-u01-listener-mandatory)

start listener then start orcl (kind:mandatory) (id:order-listener-orcl-mandatory)

colocation constraints:

vg01 with virtualip (score:infinity) (id:colocation-vg01-virtualip-infinity)

u01 with vg01 (score:infinity) (id:colocation-u01-vg01-infinity)

listener with u01 (score:infinity) (id:colocation-listener-u01-infinity)

orcl with listener (score:infinity) (id:colocation-orcl-listener-infinity)

ticket constraints:

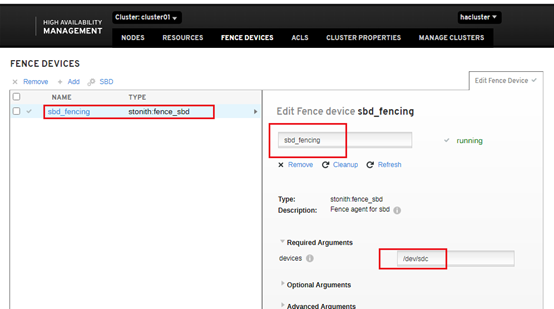

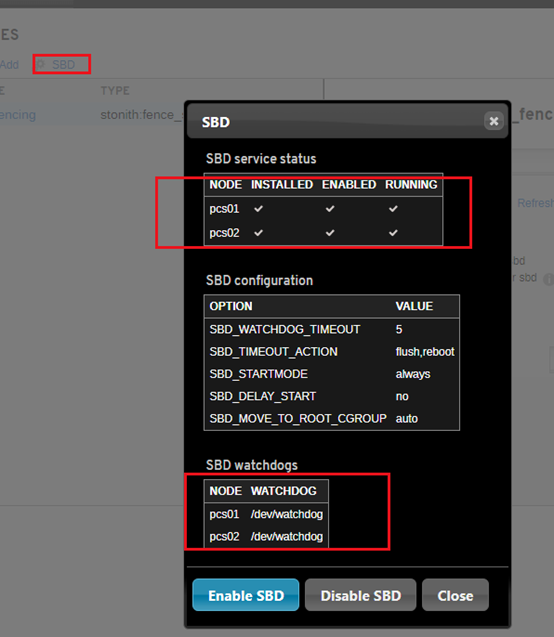

3.11安装fence devices

3.11.1sbd方式

此处采有共享磁盘sbd方式,磁盘只需100m大小足够(理论上>4m即可)

#pcs property | grep stonith-enabled

#pcs property set stonith-enabled=true

#yum install fence-agents-ipmilan fence-agents-sbd fence-agents-drac5 (all nodes)

configure softdog as a watchdog device and start automatic at boot time (all nodes):

# yum install -y watchdog sbd

change the sbd configuration sbd_device to point to the shared disk (all nodes):

# echo softdog > /etc/modules-load.d/softdog.conf

# /sbin/modprobe softdog

create the sbd device (just in one node)

# vi /etc/sysconfig/sbd

change:

sbd_device="/dev/sdc" ? # /dev/sdc is the shared disk

sbd_opts="-n node1" ? ?? # if cluster node name is different from hostname this option must be used

参考:

[root@pcs01 ~]# cat /etc/sysconfig/sbd|egrep -v "#|^$"

sbd_device="/dev/sdc"

sbd_pacemaker=yes

sbd_startmode=always

sbd_delay_start=no

sbd_watchdog_dev=/dev/watchdog

sbd_watchdog_timeout=5

sbd_timeout_action=flush,reboot

sbd_move_to_root_cgroup=auto

sbd_opts=

enable sbd service (all nodes):

#pcs stonith sbd device setup --device=/dev/sdc

the pacemaker stonith fence can be created (just one node):

# systemctl enable --now sbd

sbd is configured.

# pcs stonith create sbd_fencing fence_sbd devices=/dev/sdc

to test is the sbd is working:

# pcs stonith fence pcs02

node2 should be rebooted.

3.11.2idrac方式

this article explains how to configure fencing on a dell physical server, which is the most commonly used server in neteye 4 installations. a fencing configuration is not required for voting-only cluster nodes or for elastic-only nodes as they are not part of the pcs cluster.

configuring idrac

dell remote access controller (idrac) is a hardware component located on the motherboard which provides both a web interface and a command line interface to perform remote management tasks.

before beginning, you should properly configure ipmi settings (intelligent platform management interface) and create a new account.

you can access the idrac web interface and enable ipmi access over lan at: idrac settings > connectivity > network > ipmi settings:

then create a new user with the username and password of your choice, read-only privileges for the console, and administrative privileges on ipmi.

please note that you must replicate this configuration on each physical server.

install fence devices

next you need to install ipmilan fence devices on each server in order to use fencing on dell servers:

yum install fence-agents-ipmilan

now you will be able to find several new fence devices including fence_idrac and show its properties:

pcs stonith list

pcs stonith describe fence_idrac

test that the idrac interface is reachable using the default port 623:

nmap -su -p623

finally you can safely test your configuration by printing the chassis status on each node remotely.

ipmitool -i lanplus -h -u -p -y -v chassis status

configuring pcs

fencing can be enabled by setting the property called stonith, which is an acronym for shoot-the-other-node-in-the-head. disable stonith until fencing is correctly configured in order to avoid any issues during the procedure:

pcs property set stonith-enabled=false

pcs stonith cleanup

at this point you can create a stonith resource for each node. in a 2-node cluster it may happen that both nodes are unable to contact each other and then each node tries to fence the other one. but you can’t reboot both nodes at the same time since that will result in downtime and possibly harm cluster integrity. to avoid this you need to configure a different delay (e.g., one without delay, and the other one with at least a 5 second delay). to ensure the safety of your cluster, you should set the reboot method to “cycle“ instead of “onoff”.

pcs stonith create fence_node1 fence_idrac ipaddr="" "delay=0" lanplus="1" login="ipmi_username" passwd_script="ipmi_password" method="cycle" pcmk_host_list="node1.neteyelocal"

pcs stonith create fence_node2 fence_idrac ipaddr="" "delay=5" lanplus="1" login="ipmi_username" passwd_script="ipmi_password" method="cycle" pcmk_host_list="node2.neteyelocal"

you should set up a password script instead of directly using your password, for instance with a very simple bash script like the one below. the script should be readable only by the root user, preventing your idrac password from being extracted from the pcs resource. you should place this script in /usr/local/bin/ allowing you to invoke it as a regular command:

#! /bin/bash

echo “my_secret_psw“

if everything has been properly configured, then running pcs status should show the fence device with status started.

to prevent unwanted fencing in the event of minor network outages, increase the totem token timeout to at least 5 seconds by editing /etc/corosync/corosync.conf as follows:

totem {

version: 2

cluster_name: neteye

secauth: off

transport: udpu

token: 5000

}

then sync this config file to all other cluster nodes and reload corosync:

pcs cluster sync

pcs cluster reload corosync

unwanted fencing might happen also when a node “commit suicide”, i.e., shut itself down because it was not able to contact the other node of the cluster. this is an unwanted situation because all nodes of a cluster might be fenced at the same time. to avoid this you should set a constraint to prevent a node’s stonith resource from running on the cluster node itself:

pcs constraint location fence_node1 avoids node1.neteyelocal

now that fencing is configured, you only need to set the stonith property to true to enable it:

pcs property set stonith-enabled=true

pcs stonith cleanup

always remember to temporarily disable fencing during updates/upgrades.

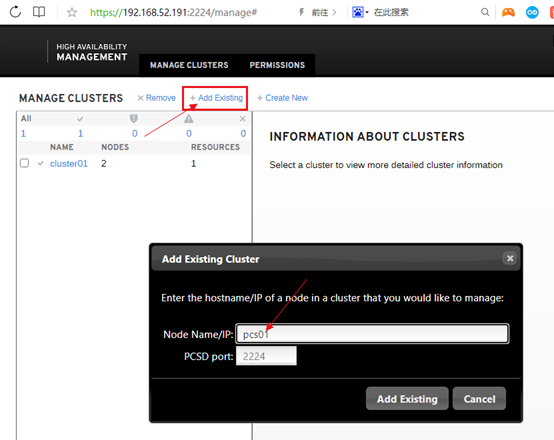

3.12图形界面控制台:

netstat -tunlp|grep listen|grep 2224

https://192.168.52.191:2224 建议用谷歌浏览器

hacluster/secure_password

3.13主机宕机测试

crm_mon或pcs status观察

reboot或shutdown -h now重启某一台

pcs status观察

df -h

su - oracle

sqlplus system/oracle@orcl测试连接

任意重启一台机器,pcs resource均可正常切换。

但如果同时关闭了两台主机,然后再起其中任意一台(另外一台保持关闭状态,模拟无法修复启动),那么起来的那台资源resource显示一直是stopped状态。

此时只能手工强制启动资源。

操作步骤如下:

pcs resource

根据上述结果的顺序依赖关系依次启动资源

pcs resource debug-start xxx

参考操作日志如下:

[root@pcs01 ~]# pcs status

cluster name: cluster01

stack: corosync

current dc: none

last updated: wed aug 17 10:03:39 2022

last change: wed aug 17 09:49:18 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

node pcs01: unclean (offline)

node pcs02: unclean (offline)

full list of resources:

resource group: oracle

virtualip (ocf::heartbeat:ipaddr2): stopped

vg01 (ocf::heartbeat:lvm): stopped

u01 (ocf::heartbeat:filesystem): stopped

listener (ocf::heartbeat:oralsnr): stopped

orcl (ocf::heartbeat:oracle): stopped

sbd_fencing (stonith:fence_sbd): stopped

daemon status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# pcs status

cluster name: cluster01

stack: corosync

current dc: pcs01 (version 1.1.23-1.0.1.el7_9.1-9acf116022) - partition without quorum

last updated: thu aug 18 09:14:13 2022

last change: wed aug 17 10:05:26 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

node pcs02: unclean (offline)

online: [ pcs01 ]

full list of resources:

resource group: oracle

virtualip (ocf::heartbeat:ipaddr2): stopped

vg01 (ocf::heartbeat:lvm): stopped

u01 (ocf::heartbeat:filesystem): stopped

listener (ocf::heartbeat:oralsnr): stopped

orcl (ocf::heartbeat:oracle): stopped

sbd_fencing (stonith:fence_sbd): stopped

daemon status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# journalctl |grep -i error

aug 18 09:06:08 localhost.localdomain kernel: ras: correctable errors collector initialized.

aug 18 09:06:13 pcs01 corosync[1267]: [qb ] error in connection setup (/dev/shm/qb-1267-1574-30-pr9ltr/qb): broken pipe (32)

[root@pcs01 ~]# corosync-cmapctl |grep members

runtime.totem.pg.mrp.srp.members.1.config_version (u64) = 0

runtime.totem.pg.mrp.srp.members.1.ip (str) = r(0) ip(192.168.52.191)

runtime.totem.pg.mrp.srp.members.1.join_count (u32) = 1

runtime.totem.pg.mrp.srp.members.1.status (str) = joined

[root@pcs01 ~]# pcs status corosync

membership information

----------------------

nodeid votes name

1 1 pcs01 (local)

[root@pcs01 ~]# pcs status pcsd

pcs01: online

pcs02: offline

[root@pcs01 ~]# pcs resource debug-start virtualip

operation start for virtualip (ocf:heartbeat:ipaddr2) returned: 'ok' (0)

> stderr: aug 17 10:03:56 info: adding inet address 192.168.52.190/24 with broadcast address 192.168.52.255 to device ens32

> stderr: aug 17 10:03:56 info: bringing device ens32 up

> stderr: aug 17 10:03:56 info: /usr/libexec/heartbeat/send_arp -i 200 -r 5 -p /var/run/resource-agents/send_arp-192.168.52.190 ens32 192.168.52.190 auto not_used not_used

[root@pcs01 ~]# pcs resource debug-start vg01

operation start for vg01 (ocf:heartbeat:lvm) returned: 'ok' (0)

> stdout: volume_list=[]

> stdout: volume group "vg01" successfully changed

> stdout: volume_list=[]

> stderr: aug 17 10:04:05 warning: disable lvmetad in lvm.conf. lvmetad should never be enabled in a clustered environment. set use_lvmetad=0 and kill the lvmetad process

> stderr: aug 17 10:04:05 info: activating volume group vg01

> stderr: aug 17 10:04:06 info: reading volume groups from cache. found volume group "ol" using metadata type lvm2 found volume group "vg01" using metadata type lvm2

> stderr: aug 17 10:04:06 info: new tag "pacemaker" added to vg01

> stderr: aug 17 10:04:06 info: 1 logical volume(s) in volume group "vg01" now active

[root@pcs01 ~]# pcs resource debug-start u01

operation start for u01 (ocf:heartbeat:filesystem) returned: 'ok' (0)

> stderr: aug 17 10:04:13 info: running start for /dev/vg01/lvol01 on /u01

[root@pcs01 ~]# pcs resource debug-start listener

operation start for listener (ocf:heartbeat:oralsnr) returned: 'ok' (0)

> stderr: aug 17 10:04:20 info: listener listener running:

> stderr: lsnrctl for linux: version 11.2.0.4.0 - production on 17-aug-2022 10:04:18

> stderr:

> stderr: 米乐app官网下载 copyright (c) 1991, 2013, oracle. all rights reserved.

> stderr:

> stderr: starting /u01/db/oracle/product/11.2.0/dbhome_1/bin/tnslsnr: please wait...

> stderr:

> stderr: tnslsnr for linux: version 11.2.0.4.0 - production

> stderr: system parameter file is /u01/db/oracle/product/11.2.0/dbhome_1/network/admin/listener.ora

> stderr: log messages written to /u01/db/oracle/diag/tnslsnr/pcs01/listener/alert/log.xml

> stderr: listening on: (description=(address=(protocol=ipc)(key=extproc1521)))

> stderr: listening on: (description=(address=(protocol=tcp)(host=192.168.52.190)(port=1521)))

> stderr:

> stderr: connecting to (description=(address=(protocol=ipc)(key=extproc1521)))

> stderr: status of the listener

> stderr: ------------------------

> stderr: alias listener

> stderr: version tnslsnr for linux: version 11.2.0.4.0 - production

> stderr: start date 17-aug-2022 10:04:19

> stderr: uptime 0 days 0 hr. 0 min. 0 sec

> stderr: trace level off

> stderr: security on: local os authentication

> stderr: snmp off

> stderr: listener parameter file /u01/db/oracle/product/11.2.0/dbhome_1/network/admin/listener.ora

> stderr: listener log file /u01/db/oracle/diag/tnslsnr/pcs01/listener/alert/log.xml

> stderr: listening endpoints summary...

> stderr: (description=(address=(protocol=ipc)(key=extproc1521)))

> stderr: (description=(address=(protocol=tcp)(host=192.168.52.190)(port=1521)))

> stderr: services summary...

> stderr: service "orcl" has 1 instance(s).

> stderr: instance "orcl", status unknown, has 1 handler(s) for this service...

> stderr: the command completed successfully

> stderr: last login: wed aug 17 09:58:46 cst 2022

[root@pcs01 ~]# pcs resource debug-start orcl

operation start for orcl (ocf:heartbeat:oracle) returned: 'ok' (0)

> stderr: aug 17 10:04:31 info: oracle instance orcl started:

[root@pcs01 ~]# pcs status

cluster name: cluster01

stack: corosync

current dc: pcs01 (version 1.1.23-1.0.1.el7_9.1-9acf116022) - partition without quorum

last updated: wed aug 17 10:04:37 2022

last change: wed aug 17 09:49:18 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

node pcs02: unclean (offline)

online: [ pcs01 ]

full list of resources:

resource group: oracle

virtualip (ocf::heartbeat:ipaddr2): stopped

vg01 (ocf::heartbeat:lvm): stopped

u01 (ocf::heartbeat:filesystem): stopped

listener (ocf::heartbeat:oralsnr): stopped

orcl (ocf::heartbeat:oracle): stopped

sbd_fencing (stonith:fence_sbd): stopped

daemon status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# df -h

filesystem size used avail use% mounted on

devtmpfs 3.8g 0 3.8g 0% /dev

tmpfs 3.8g 65m 3.7g 2% /dev/shm

tmpfs 3.8g 8.7m 3.8g 1% /run

tmpfs 3.8g 0 3.8g 0% /sys/fs/cgroup

/dev/mapper/ol-root 26g 7.1g 19g 28% /

/dev/sda1 1014m 184m 831m 19% /boot

tmpfs 768m 0 768m 0% /run/user/0

/dev/mapper/vg01-lvol01 10g 6.2g 3.9g 62% /u01

[root@pcs01 ~]# su - oracle

last login: wed aug 17 10:04:31 cst 2022

[oracle@pcs01 ~]$ sqlplus system/oracle@orcl

sql*plus: release 11.2.0.4.0 production on wed aug 17 10:04:55 2022

米乐app官网下载 copyright (c) 1982, 2013, oracle. all rights reserved.

connected to:

oracle database 11g enterprise edition release 11.2.0.4.0 - 64bit production

with the partitioning, olap, data mining and real application testing options

sql> exit

处理:安装多路径软件做磁盘聚合

可参考:

处理:

修改volume_list = []里边不要空格

问题:

处理:状态都正常,但存在历史错误信息,想清理掉:尝试pcs stonith cleanup未解决,最终systemctl stop pacemaker两台都停,然后都起systemctl start pacemaker,信息清理完毕。参考

pacemaker configuration for an oracle database and its listener

configuring fencing on dell servers

pacemaker搭建oracle ha

时间同步:

独占启用(exclusive activation)丛集中的卷册群组

oracle11g快速安装参考:

centos7.6加pcs搭建高可用数据库集群

pcs常用命令:

dell drac 5

统信ha搭建和部分命令手册(非原创)

active-passive cluster for near ha using pacemaker, drbd, corosync and mysql

[命令] pacemaker 命令 pcs resource (管理资源)

rhel 7 pcs 实现oracle 12c高可用

ssh互信脚本参考:(取自oracle12c软件包,此处并不需要配置互信,互信只是为了两台主机直接拷贝文件免密方便而已)

命令参考:./sshusersetup.sh -user root -hosts “pcs01 pcs02” -advanced –nopromptpassphrase

根据提示输入密码和yes即可,然后验证:

ssh pcs01 date

ssh pcs02 date

#!/bin/sh

# nitin jerath - aug 2005

#usage sshusersetup.sh -user [ -hosts \"\" | -hostfile ] [ -advanced ] [ -verify] [ -exverify ] [ -logfile ] [-confirm] [-shared] [-help] [-usepassphrase] [-nopromptpassphrase]

#eg. sshusersetup.sh -hosts "host1 host2" -user njerath -advanced

#this script is used to setup ssh connectivity from the host on which it is

# run to the specified remote hosts. after this script is run, the user can use # ssh to run commands on the remote hosts or copy files between the local host

# and the remote hosts without being prompted for passwords or confirmations.

# the list of remote hosts and the user name on the remote host is specified as

# a command line parameter to the script. note that in case the user on the

# remote host has its home directory nfs mounted or shared across the remote

# hosts, this script should be used with -shared option.

#specifying the -advanced option on the command line would result in ssh

# connectivity being setup among the remote hosts which means that ssh can be

# used to run commands on one remote host from the other remote host or copy

# files between the remote hosts without being prompted for passwords or

# confirmations.

#please note that the script would remove write permissions on the remote hosts

#for the user home directory and ~/.ssh directory for "group" and "others". this

# is an ssh requirement. the user would be explicitly informed about this by teh script and prompted to continue. in case the user presses no, the script would exit. in case the user does not want to be prompted, he can use -confirm option.

# as a part of the setup, the script would use ssh to create files within ~/.ssh

# directory of the remote node and to setup the requisite permissions. the

#script also uses scp to copy the local host public key to the remote hosts so

# that the remote hosts trust the local host for ssh. at the time, the script

#performs these steps, ssh connectivity has not been completely setup hence

# the script would prompt the user for the remote host password.

#for each remote host, for remote users with non-shared homes this would be

# done once for ssh and once for scp. if the number of remote hosts are x, the

# user would be prompted 2x times for passwords. for remote users with shared

# homes, the user would be prompted only twice, once each for scp and ssh.

#for security reasons, the script does not save passwords and reuse it. also,

# for security reasons, the script does not accept passwords redirected from a

#file. the user has to key in the confirmations and passwords at the prompts.

#the -verify option means that the user just wants to verify whether ssh has

#been set up. in this case, the script would not setup ssh but would only check

# whether ssh connectivity has been setup from the local host to the remote

# hosts. the script would run the date command on each remote host using ssh. in

# case the user is prompted for a password or sees a warning message for a

#particular host, it means ssh connectivity has not been setup correctly for

# that host.

#in case the -verify option is not specified, the script would setup ssh and

#then do the verification as well.

#in case the user speciies the -exverify option, an exhaustive verification would be done. in that case, the following would be checked:

# 1. ssh connectivity from local host to all remote hosts.

# 2. ssh connectivity from each remote host to itself and other remote hosts.

#echo parsing command line arguments

numargs=$#

advanced=false

hostname=`hostname`

confirm=no

shared=false

i=1

usr=$user

if test -z "$temp"

then

temp=/tmp

fi

identity=id_rsa

logfile=$temp/sshusersetup_`date %f-%h-%m-%s`.log

verify=false

exhaustive_verify=false

help=false

passphrase=no

rerun_sshkeygen=no

no_prompt_passphrase=no

while [ $i -le $numargs ]

do

j=$1

if [ $j = "-hosts" ]

then

hosts=$2

shift 1

i=`expr $i 1`

fi

if [ $j = "-user" ]

then

usr=$2

shift 1

i=`expr $i 1`

fi

if [ $j = "-logfile" ]

then

logfile=$2

shift 1

i=`expr $i 1`

fi

if [ $j = "-confirm" ]

then

confirm=yes

fi

if [ $j = "-hostfile" ]

then

cluster_configuration_file=$2

shift 1

i=`expr $i 1`

fi

if [ $j = "-usepassphrase" ]

then

passphrase=yes

fi

if [ $j = "-nopromptpassphrase" ]

then

no_prompt_passphrase=yes

fi

if [ $j = "-shared" ]

then

shared=true

fi

if [ $j = "-exverify" ]

then

exhaustive_verify=true

fi

if [ $j = "-verify" ]

then

verify=true

fi

if [ $j = "-advanced" ]

then

advanced=true

fi

if [ $j = "-help" ]

then

help=true

fi

i=`expr $i 1`

shift 1

done

if [ $help = "true" ]

then

echo "usage $0 -user [ -hosts \"\" | -hostfile ] [ -advanced ] [ -verify] [ -exverify ] [ -logfile ] [-confirm] [-shared] [-help] [-usepassphrase] [-nopromptpassphrase]"

echo "this script is used to setup ssh connectivity from the host on which it is run to the specified remote hosts. after this script is run, the user can use ssh to run commands on the remote hosts or copy files between the local host and the remote hosts without being prompted for passwords or confirmations. the list of remote hosts and the user name on the remote host is specified as a command line parameter to the script. "

echo "-user : user on remote hosts. "

echo "-hosts : space separated remote hosts list. "

echo "-hostfile : the user can specify the host names either through the -hosts option or by specifying the absolute path of a cluster configuration file. a sample host file contents are below: "

echo

echo " stacg30 stacg30int 10.1.0.0 stacg30v -"

echo " stacg34 stacg34int 10.1.0.1 stacg34v -"

echo

echo " the first column in each row of the host file will be used as the host name."

echo

echo "-usepassphrase : the user wants to set up passphrase to encrypt the private key on the local host. "

echo "-nopromptpassphrase : the user does not want to be prompted for passphrase related questions. this is for users who want the default behavior to be followed."

echo "-shared : in case the user on the remote host has its home directory nfs mounted or shared across the remote hosts, this script should be used with -shared option. "

echo " it is possible for the user to determine whether a user's home directory is shared or non-shared. let us say we want to determine that user user1's home directory is shared across hosts a, b and c."

echo " follow the following steps:"

echo " 1. on host a, touch ~user1/checksharedhome.tmp"

echo " 2. on hosts b and c, ls -al ~user1/checksharedhome.tmp"

echo " 3. if the file is present on hosts b and c in ~user1 directory and"

echo " is identical on all hosts a, b, c, it means that the user's home "

echo " directory is shared."

echo " 4. on host a, rm -f ~user1/checksharedhome.tmp"

echo " in case the user accidentally passes -shared option for non-shared homes or viceversa,ssh connectivity would only be set up for a subset of the hosts. the user would have to re-run the setyp script with the correct option to rectify this problem."

echo "-advanced : specifying the -advanced option on the command line would result in ssh connectivity being setup among the remote hosts which means that ssh can be used to run commands on one remote host from the other remote host or copy files between the remote hosts without being prompted for passwords or confirmations."

echo "-confirm: the script would remove write permissions on the remote hosts for the user home directory and ~/.ssh directory for "group" and "others". this is an ssh requirement. the user would be explicitly informed about this by the script and prompted to continue. in case the user presses no, the script would exit. in case the user does not want to be prompted, he can use -confirm option."

echo "as a part of the setup, the script would use ssh to create files within ~/.ssh directory of the remote node and to setup the requisite permissions. the script also uses scp to copy the local host public key to the remote hosts so that the remote hosts trust the local host for ssh. at the time, the script performs these steps, ssh connectivity has not been completely setup hence the script would prompt the user for the remote host password. "

echo "for each remote host, for remote users with non-shared homes this would be done once for ssh and once for scp. if the number of remote hosts are x, the user would be prompted 2x times for passwords. for remote users with shared homes, the user would be prompted only twice, once each for scp and ssh. for security reasons, the script does not save passwords and reuse it. also, for security reasons, the script does not accept passwords redirected from a file. the user has to key in the confirmations and passwords at the prompts. "

echo "-verify : -verify option means that the user just wants to verify whether ssh has been set up. in this case, the script would not setup ssh but would only check whether ssh connectivity has been setup from the local host to the remote hosts. the script would run the date command on each remote host using ssh. in case the user is prompted for a password or sees a warning message for a particular host, it means ssh connectivity has not been setup correctly for that host. in case the -verify option is not specified, the script would setup ssh and then do the verification as well. "

echo "-exverify : in case the user speciies the -exverify option, an exhaustive verification for all hosts would be done. in that case, the following would be checked: "

echo " 1. ssh connectivity from local host to all remote hosts. "

echo " 2. ssh connectivity from each remote host to itself and other remote hosts. "

echo the -exverify option can be used in conjunction with the -verify option as well to do an exhaustive verification once the setup has been done.

echo "taking some examples: let us say local host is z, remote hosts are a,b and c. local user is njerath. remote users are racqa(non-shared), aime(shared)."

echo "$0 -user racqa -hosts "a b c" -advanced -exverify -confirm"

echo "script would set up connectivity from z -> a, z -> b, z -> c, a -> a, a -> b, a -> c, b -> a, b -> b, b -> c, c -> a, c -> b, c -> c."

echo "since user has given -exverify option, all these scenario would be verified too."

echo

echo "now the user runs : $0 -user racqa -hosts "a b c" -verify"

echo "since -verify option is given, no ssh setup would be done, only verification of existing setup. also, since -exverify or -advanced options are not given, script would only verify connectivity from z -> a, z -> b, z -> c"

echo "now the user runs : $0 -user racqa -hosts "a b c" -verify -advanced"

echo "since -verify option is given, no ssh setup would be done, only verification of existing setup. also, since -advanced options is given, script would verify connectivity from z -> a, z -> b, z -> c, a-> a, a->b, a->c, a->d"

echo "now the user runs:"

echo "$0 -user aime -hosts "a b c" -confirm -shared"

echo "script would set up connectivity between z->a, z->b, z->c only since advanced option is not given."

echo "all these scenarios would be verified too."

exit

fi

if test -z "$hosts"

then

if test -n "$cluster_configuration_file" && test -f "$cluster_configuration_file"

then

hosts=`awk '$1 !~ /^#/ { str = str " " $1 } end { print str }' $cluster_configuration_file`

elif ! test -f "$cluster_configuration_file"

then

echo "please specify a valid and existing cluster configuration file."

fi

fi

if test -z "$hosts" || test -z $usr

then

echo "either user name or host information is missing"

echo "usage $0 -user [ -hosts \"\" | -hostfile ] [ -advanced ] [ -verify] [ -exverify ] [ -logfile ] [-confirm] [-shared] [-help] [-usepassphrase] [-nopromptpassphrase]"

exit 1

fi

if [ -d $logfile ]; then

echo $logfile is a directory, setting logfile to $logfile/ssh.log

logfile=$logfile/ssh.log

fi

echo the output of this script is also logged into $logfile | tee -a $logfile

if [ `echo $?` != 0 ]; then

echo error writing to the logfile $logfile, exiting

exit 1

fi

echo hosts are $hosts | tee -a $logfile

echo user is $usr | tee -a $logfile

ssh="/usr/bin/ssh"

scp="/usr/bin/scp"

ssh_keygen="/usr/bin/ssh-keygen"

calculateos()

{

platform=`uname -s`

case "$platform"

in

"sunos") os=solaris;;

"linux") os=linux;;

"hp-ux") os=hpunix;;

"aix") os=aix;;

*) echo "sorry, $platform is not currently supported." | tee -a $logfile

exit 1;;

esac

echo "platform:- $platform " | tee -a $logfile

}

calculateos

bits=1024

encr="rsa"

deadhosts=""

alivehosts=""

if [ $platform = "linux" ]

then

ping="/bin/ping"

else

ping="/usr/sbin/ping"

fi

#bug 9044791

if [ -n "$ssh_path" ]; then

ssh=$ssh_path

fi

if [ -n "$scp_path" ]; then

scp=$scp_path

fi

if [ -n "$ssh_keygen_path" ]; then

ssh_keygen=$ssh_keygen_path

fi

if [ -n "$ping_path" ]; then

ping=$ping_path

fi

path_error=0

if test ! -x $ssh ; then

echo "ssh not found at $ssh. please set the variable ssh_path to the correct location of ssh and retry."

path_error=1

fi

if test ! -x $scp ; then

echo "scp not found at $scp. please set the variable scp_path to the correct location of scp and retry."

path_error=1

fi

if test ! -x $ssh_keygen ; then

echo "ssh-keygen not found at $ssh_keygen. please set the variable ssh_keygen_path to the correct location of ssh-keygen and retry."

path_error=1

fi

if test ! -x $ping ; then

echo "ping not found at $ping. please set the variable ping_path to the correct location of ping and retry."

path_error=1

fi

if [ $path_error = 1 ]; then

echo "error: one or more of the required binaries not found, exiting"

exit 1

fi

#9044791 end

echo checking if the remote hosts are reachable | tee -a $logfile

for host in $hosts

do

if [ $platform = "sunos" ]; then

$ping -s $host 5 5

elif [ $platform = "hp-ux" ]; then

$ping $host -n 5 -m 5

else

$ping -c 5 -w 5 $host

fi

exitcode=`echo $?`

if [ $exitcode = 0 ]

then

alivehosts="$alivehosts $host"

else

deadhosts="$deadhosts $host"

fi

done

if test -z "$deadhosts"

then

echo remote host reachability check succeeded. | tee -a $logfile

echo the following hosts are reachable: $alivehosts. | tee -a $logfile

echo the following hosts are not reachable: $deadhosts. | tee -a $logfile

echo all hosts are reachable. proceeding further... | tee -a $logfile

else

echo remote host reachability check failed. | tee -a $logfile

echo the following hosts are reachable: $alivehosts. | tee -a $logfile

echo the following hosts are not reachable: $deadhosts. | tee -a $logfile

echo please ensure that all the hosts are up and re-run the script. | tee -a $logfile

echo exiting now... | tee -a $logfile

exit 1

fi

firsthost=`echo $hosts | awk '{print $1}; end { }'`

echo firsthost $firsthost

numhosts=`echo $hosts | awk '{ }; end {print nf}'`

echo numhosts $numhosts

if [ $verify = "true" ]

then

echo since user has specified -verify option, ssh setup would not be done. only, existing ssh setup would be verified. | tee -a $logfile

continue

else

echo the script will setup ssh connectivity from the host ''`hostname`'' to all | tee -a $logfile

echo the remote hosts. after the script is executed, the user can use ssh to run | tee -a $logfile

echo commands on the remote hosts or copy files between this host ''`hostname`'' | tee -a $logfile

echo and the remote hosts without being prompted for passwords or confirmations. | tee -a $logfile

echo | tee -a $logfile

echo note 1: | tee -a $logfile

echo as part of the setup procedure, this script will use 'ssh' and 'scp' to copy | tee -a $logfile

echo files between the local host and the remote hosts. since the script does not | tee -a $logfile

echo store passwords, you may be prompted for the passwords during the execution of | tee -a $logfile

echo the script whenever 'ssh' or 'scp' is invoked. | tee -a $logfile

echo | tee -a $logfile

echo note 2: | tee -a $logfile

echo "as per ssh requirements, this script will secure the user home directory" | tee -a $logfile

echo and the .ssh directory by revoking group and world write privileges to these | tee -a $logfile

echo "directories." | tee -a $logfile

echo | tee -a $logfile

echo "do you want to continue and let the script make the above mentioned changes (yes/no)?" | tee -a $logfile

if [ "$confirm" = "no" ]

then

read confirm

else

echo "confirmation provided on the command line" | tee -a $logfile

fi

echo | tee -a $logfile

echo the user chose ''$confirm'' | tee -a $logfile

if [ -z "$confirm" -o "$confirm" != "yes" -a "$confirm" != "no" ]

then

echo "you haven't specified proper input. please enter 'yes' or 'no'. exiting...."

exit 0

fi

if [ "$confirm" = "no" ]

then

echo "ssh setup is not done." | tee -a $logfile

exit 1

else

if [ $no_prompt_passphrase = "yes" ]

then

echo "user chose to skip passphrase related questions." | tee -a $logfile

else

if [ $shared = "true" ]

then

hostcount=`expr ${numhosts} 1`

passphrase_prompt=`expr 2 \* $hostcount`

else

passphrase_prompt=`expr 2 \* ${numhosts}`

fi

echo "please specify if you want to specify a passphrase for the private key this script will create for the local host. passphrase is used to encrypt the private key and makes ssh much more secure. type 'yes' or 'no' and then press enter. in case you press 'yes', you would need to enter the passphrase whenever the script executes ssh or scp. $passphrase " | tee -a $logfile

echo "the estimated number of times the user would be prompted for a passphrase is $passphrase_prompt. in addition, if the private-public files are also newly created, the user would have to specify the passphrase on one additional occasion. " | tee -a $logfile

echo "enter 'yes' or 'no'." | tee -a $logfile

if [ "$passphrase" = "no" ]

then

read passphrase

else

echo "confirmation provided on the command line" | tee -a $logfile

fi

echo | tee -a $logfile

echo the user chose ''$passphrase'' | tee -a $logfile

if [ -z "$passphrase" -o "$passphrase" != "yes" -a "$passphrase" != "no" ]

then

echo "you haven't specified whether to use passphrase or not. please specify 'yes' or 'no'. exiting..."

exit 0

fi

if [ "$passphrase" = "yes" ]

then

rerun_sshkeygen="yes"

#checking for existence of ${identity} file

if test -f $home/.ssh/${identity}.pub && test -f $home/.ssh/${identity}

then

echo "the files containing the client public and private keys already exist on the local host. the current private key may or may not have a passphrase associated with it. in case you remember the passphrase and do not want to re-run ssh-keygen, press 'no' and enter. if you press 'no', the script will not attempt to create any new public/private key pairs. if you press 'yes', the script will remove the old private/public key files existing and create new ones prompting the user to enter the passphrase. if you enter 'yes', any previous ssh user setups would be reset. if you press 'change', the script will associate a new passphrase with the old keys." | tee -a $logfile

echo "press 'yes', 'no' or 'change'" | tee -a $logfile

read rerun_sshkeygen

echo the user chose ''$rerun_sshkeygen'' | tee -a $logfile

if [ -z "$rerun_sshkeygen" -o "$rerun_sshkeygen" != "yes" -a "$rerun_sshkeygen" != "no" -a "$rerun_sshkeygen" != "change" ]

then

echo "you haven't specified whether to re-run 'ssh-keygen' or not. please enter 'yes' , 'no' or 'change'. exiting..."

exit 0;

fi

fi

else

if test -f $home/.ssh/${identity}.pub && test -f $home/.ssh/${identity}

then

echo "the files containing the client public and private keys already exist on the local host. the current private key may have a passphrase associated with it. in case you find using passphrase inconvenient(although it is more secure), you can change to it empty through this script. press 'change' if you want the script to change the passphrase for you. press 'no' if you want to use your old passphrase, if you had one."

read rerun_sshkeygen

echo the user chose ''$rerun_sshkeygen'' | tee -a $logfile

if [ -z "$rerun_sshkeygen" -o "$rerun_sshkeygen" != "yes" -a "$rerun_sshkeygen" != "no" -a "$rerun_sshkeygen" != "change" ]

then

echo "you haven't specified whether to re-run 'ssh-keygen' or not. please enter 'yes' , 'no' or 'change'. exiting..."

exit 0

fi

fi

fi

fi

echo creating .ssh directory on local host, if not present already | tee -a $logfile

mkdir -p $home/.ssh | tee -a $logfile

echo creating authorized_keys file on local host | tee -a $logfile

touch $home/.ssh/authorized_keys | tee -a $logfile

echo changing permissions on authorized_keys to 644 on local host | tee -a $logfile

chmod 644 $home/.ssh/authorized_keys | tee -a $logfile

mv -f $home/.ssh/authorized_keys $home/.ssh/authorized_keys.tmp | tee -a $logfile

echo creating known_hosts file on local host | tee -a $logfile

touch $home/.ssh/known_hosts | tee -a $logfile

echo changing permissions on known_hosts to 644 on local host | tee -a $logfile

chmod 644 $home/.ssh/known_hosts | tee -a $logfile

mv -f $home/.ssh/known_hosts $home/.ssh/known_hosts.tmp | tee -a $logfile

echo creating config file on local host | tee -a $logfile

echo if a config file exists already at $home/.ssh/config, it would be backed up to $home/.ssh/config.backup.

echo "host *" > $home/.ssh/config.tmp | tee -a $logfile

echo "forwardx11 no" >> $home/.ssh/config.tmp | tee -a $logfile

if test -f $home/.ssh/config

then

cp -f $home/.ssh/config $home/.ssh/config.backup

fi

mv -f $home/.ssh/config.tmp $home/.ssh/config | tee -a $logfile

chmod 644 $home/.ssh/config

if [ "$rerun_sshkeygen" = "yes" ]

then

echo removing old private/public keys on local host | tee -a $logfile

rm -f $home/.ssh/${identity} | tee -a $logfile

rm -f $home/.ssh/${identity}.pub | tee -a $logfile

echo running ssh keygen on local host | tee -a $logfile

$ssh_keygen -t $encr -b $bits -f $home/.ssh/${identity} | tee -a $logfile

elif [ "$rerun_sshkeygen" = "change" ]

then

echo running ssh keygen on local host to change the passphrase associated with the existing private key | tee -a $logfile

$ssh_keygen -p -t $encr -b $bits -f $home/.ssh/${identity} | tee -a $logfile

elif test -f $home/.ssh/${identity}.pub && test -f $home/.ssh/${identity}

then

continue

else

echo removing old private/public keys on local host | tee -a $logfile

rm -f $home/.ssh/${identity} | tee -a $logfile

rm -f $home/.ssh/${identity}.pub | tee -a $logfile

echo running ssh keygen on local host with empty passphrase | tee -a $logfile

$ssh_keygen -t $encr -b $bits -f $home/.ssh/${identity} -n '' | tee -a $logfile

fi

if [ $shared = "true" ]

then

if [ $user = $usr ]

then

#no remote operations required

echo remote user is same as local user | tee -a $logfile

remotehosts=""

chmod og-w $home $home/.ssh | tee -a $logfile

else

remotehosts="${firsthost}"

fi

else

remotehosts="$hosts"

fi

for host in $remotehosts

do

echo creating .ssh directory and setting permissions on remote host $host | tee -a $logfile

echo "the script would also be revoking write permissions for "group" and "others" on the home directory for $usr. this is an ssh requirement." | tee -a $logfile

echo the script would create ~$usr/.ssh/config file on remote host $host. if a config file exists already at ~$usr/.ssh/config, it would be backed up to ~$usr/.ssh/config.backup. | tee -a $logfile

echo the user may be prompted for a password here since the script would be running ssh on host $host. | tee -a $logfile

$ssh -o stricthostkeychecking=no -x -l $usr $host "/bin/sh -c \" mkdir -p .ssh ; chmod og-w . .ssh; touch .ssh/authorized_keys .ssh/known_hosts; chmod 644 .ssh/authorized_keys .ssh/known_hosts; cp .ssh/authorized_keys .ssh/authorized_keys.tmp ; cp .ssh/known_hosts .ssh/known_hosts.tmp; echo \\"host *\\" > .ssh/config.tmp; echo \\"forwardx11 no\\" >> .ssh/config.tmp; if test -f .ssh/config ; then cp -f .ssh/config .ssh/config.backup; fi ; mv -f .ssh/config.tmp .ssh/config\"" | tee -a $logfile

echo done with creating .ssh directory and setting permissions on remote host $host. | tee -a $logfile

done

for host in $remotehosts

do

echo copying local host public key to the remote host $host | tee -a $logfile

echo the user may be prompted for a password or passphrase here since the script would be using scp for host $host. | tee -a $logfile

$scp $home/.ssh/${identity}.pub $usr@$host:.ssh/authorized_keys | tee -a $logfile

echo done copying local host public key to the remote host $host | tee -a $logfile

done

cat $home/.ssh/${identity}.pub >> $home/.ssh/authorized_keys | tee -a $logfile

for host in $hosts

do

if [ "$advanced" = "true" ]

then

echo creating keys on remote host $host if they do not exist already. this is required to setup ssh on host $host. | tee -a $logfile

if [ "$shared" = "true" ]

then

identity_file_name=${identity}_$host

coalesce_identity_files_command="cat .ssh/${identity_file_name}.pub >> .ssh/authorized_keys"

else

identity_file_name=${identity}

fi

$ssh -o stricthostkeychecking=no -x -l $usr $host " /bin/sh -c \"if test -f .ssh/${identity_file_name}.pub && test -f .ssh/${identity_file_name}; then echo; else rm -f .ssh/${identity_file_name} ; rm -f .ssh/${identity_file_name}.pub ; $ssh_keygen -t $encr -b $bits -f .ssh/${identity_file_name} -n '' ; fi; ${coalesce_identity_files_command} \"" | tee -a $logfile

else

#at least get the host keys from all hosts for shared case - advanced option not set

if test $shared = "true" && test $advanced = "false"

then

if [ "$passphrase" = "yes" ]

then

echo "the script will fetch the host keys from all hosts. the user may be prompted for a passphrase here in case the private key has been encrypted with a passphrase." | tee -a $logfile

fi

$ssh -o stricthostkeychecking=no -x -l $usr $host "/bin/sh -c true"

fi

fi

done

for host in $remotehosts

do

if test $advanced = "true" && test $shared = "false"

then

$scp $usr@$host:.ssh/${identity}.pub $home/.ssh/${identity}.pub.$host | tee -a $logfile

cat $home/.ssh/${identity}.pub.$host >> $home/.ssh/authorized_keys | tee -a $logfile

rm -f $home/.ssh/${identity}.pub.$host | tee -a $logfile

fi

done

for host in $remotehosts

do

if [ "$advanced" = "true" ]

then

if [ "$shared" != "true" ]

then

echo updating authorized_keys file on remote host $host | tee -a $logfile

$scp $home/.ssh/authorized_keys $usr@$host:.ssh/authorized_keys | tee -a $logfile

fi

echo updating known_hosts file on remote host $host | tee -a $logfile

$scp $home/.ssh/known_hosts $usr@$host:.ssh/known_hosts | tee -a $logfile

fi

if [ "$passphrase" = "yes" ]

then

echo "the script will run ssh on the remote machine $host. the user may be prompted for a passphrase here in case the private key has been encrypted with a passphrase." | tee -a $logfile

fi

$ssh -x -l $usr $host "/bin/sh -c \"cat .ssh/authorized_keys.tmp >> .ssh/authorized_keys; cat .ssh/known_hosts.tmp >> .ssh/known_hosts; rm -f .ssh/known_hosts.tmp .ssh/authorized_keys.tmp\"" | tee -a $logfile

done

cat $home/.ssh/known_hosts.tmp >> $home/.ssh/known_hosts | tee -a $logfile

cat $home/.ssh/authorized_keys.tmp >> $home/.ssh/authorized_keys | tee -a $logfile

#added chmod to fix bug no 5238814

chmod 644 $home/.ssh/authorized_keys

#fix for bug no 5157782

chmod 644 $home/.ssh/config

rm -f $home/.ssh/known_hosts.tmp $home/.ssh/authorized_keys.tmp | tee -a $logfile

echo ssh setup is complete. | tee -a $logfile

fi

fi

echo | tee -a $logfile

echo ------------------------------------------------------------------------ | tee -a $logfile

echo verifying ssh setup | tee -a $logfile

echo =================== | tee -a $logfile

echo the script will now run the 'date' command on the remote nodes using ssh | tee -a $logfile

echo to verify if ssh is setup correctly. if the setup is correctly setup, | tee -a $logfile

echo there should be no output other than the date and ssh should not ask for | tee -a $logfile

echo passwords. if you see any output other than date or are prompted for the | tee -a $logfile

echo password, ssh is not setup correctly and you will need to resolve the | tee -a $logfile

echo issue and set up ssh again. | tee -a $logfile

echo the possible causes for failure could be: | tee -a $logfile

echo 1. the server settings in /etc/ssh/sshd_config file do not allow ssh | tee -a $logfile

echo for user $usr. | tee -a $logfile

echo 2. the server may have disabled public key based authentication.

echo 3. the client public key on the server may be outdated.

echo 4. ~$usr or ~$usr/.ssh on the remote host may not be owned by $usr. | tee -a $logfile

echo 5. user may not have passed -shared option for shared remote users or | tee -a $logfile

echo may be passing the -shared option for non-shared remote users. | tee -a $logfile

echo 6. if there is output in addition to the date, but no password is asked, | tee -a $logfile

echo it may be a security alert shown as part of company policy. append the | tee -a $logfile

echo "additional text to the /sysman/prov/resources/ignoremessages.txt file." | tee -a $logfile

echo ------------------------------------------------------------------------ | tee -a $logfile

#read -t 30 dummy

for host in $hosts

do

echo --$host:-- | tee -a $logfile

echo running $ssh -x -l $usr $host date to verify ssh connectivity has been setup from local host to $host. | tee -a $logfile

echo "if you see any other output besides the output of the date command or if you are prompted for a password here, it means ssh setup has not been successful. please note that being prompted for a passphrase may be ok but being prompted for a password is error." | tee -a $logfile

if [ "$passphrase" = "yes" ]

then

echo "the script will run ssh on the remote machine $host. the user may be prompted for a passphrase here in case the private key has been encrypted with a passphrase." | tee -a $logfile

fi

$ssh -l $usr $host "/bin/sh -c date" | tee -a $logfile

echo ------------------------------------------------------------------------ | tee -a $logfile

done

if [ "$exhaustive_verify" = "true" ]

then

for clienthost in $hosts

do

if [ "$shared" = "true" ]

then

remotessh="$ssh -i .ssh/${identity}_${clienthost}"

else

remotessh=$ssh

fi

for serverhost in $hosts

do

echo ------------------------------------------------------------------------ | tee -a $logfile

echo verifying ssh connectivity has been setup from $clienthost to $serverhost | tee -a $logfile

echo ------------------------------------------------------------------------ | tee -a $logfile

echo "if you see any other output besides the output of the date command or if you are prompted for a password here, it means ssh setup has not been successful." | tee -a $logfile

$ssh -l $usr $clienthost "$remotessh $serverhost \"/bin/sh -c date\"" | tee -a $logfile

echo ------------------------------------------------------------------------ | tee -a $logfile

done

echo -verification from $clienthost complete- | tee -a $logfile

done

else

if [ "$advanced" = "true" ]

then

if [ "$shared" = "true" ]

then

remotessh="$ssh -i .ssh/${identity}_${firsthost}"

else

remotessh=$ssh

fi

for host in $hosts

do

echo ------------------------------------------------------------------------ | tee -a $logfile

echo verifying ssh connectivity has been setup from $firsthost to $host | tee -a $logfile

echo "if you see any other output besides the output of the date command or if you are prompted for a password here, it means ssh setup has not been successful." | tee -a $logfile

$ssh -l $usr $firsthost "$remotessh $host \"/bin/sh -c date\"" | tee -a $logfile

echo ------------------------------------------------------------------------ | tee -a $logfile

done

echo -verification from $clienthost complete- | tee -a $logfile

fi

fi

echo "ssh verification complete." | tee -a $logfile