前言

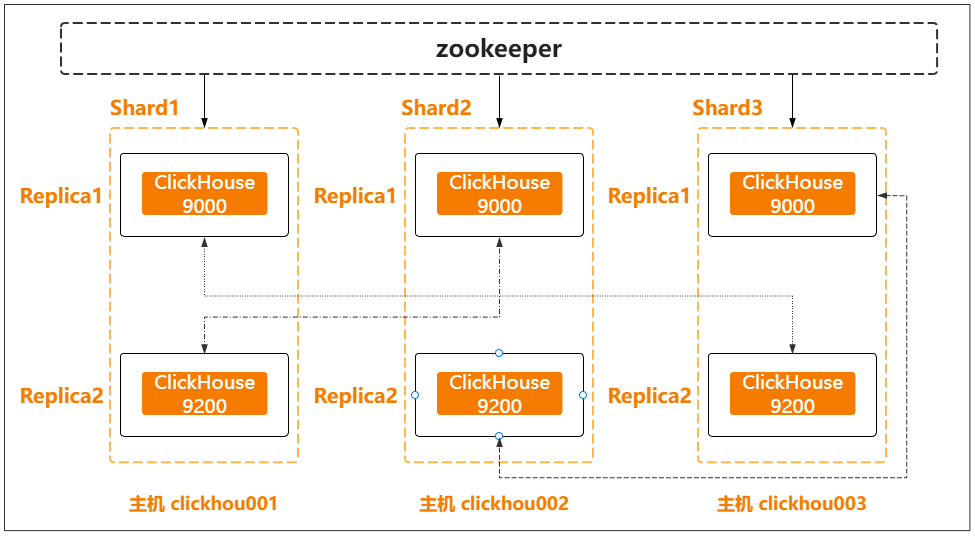

该文章介绍在centos7上部署clickhouse 3分片2副本集群的实施过程。

1 环境

1.1 拓扑图

1.2 环境信息

| 主机名 | ip | 端口 | 服务 | 配置文件 |

|---|---|---|---|---|

| clickhouse001 | 192.168.6.8 | 2181 | zookeeper | /etc/zookeeper/zoo.cfg |

| clickhouse002 | 192.168.6.6 | 2181 | zookeeper | /etc/zookeeper/zoo.cfg |

| clickhouse003 | 192.168.6.13 | 2181 | zookeeper | /etc/zookeeper/zoo.cfg |

| clickhouse001 | 192.168.6.8 | 9000/8123 | clickhouse客户端、服务端 | /etc/clickhouse-server/config_9000.xml /etc/clickhouse-server/users01.xml /etc/clickhouse-server/metrika01.xml |

| 9200/8223 | clickhouse客户端、服务端 | /etc/clickhouse-server/config_9200.xml /etc/clickhouse-server/users02.xml /etc/clickhouse-server/metrika02.xml | ||

| clickhouse002 | 192.168.6.6 | 9000/8123 | clickhouse客户端、服务端 | /etc/clickhouse-server/config_9000.xml /etc/clickhouse-server/users01.xml /etc/clickhouse-server/metrika01.xml |

| 9200/8223 | clickhouse客户端、服务端 | /etc/clickhouse-server/config_9200.xml /etc/clickhouse-server/users02.xml /etc/clickhouse-server/metrika02.xml | ||

| clickhouse003 | 192.168.6.13 | 9000/8123 | clickhouse客户端、服务端 | /etc/clickhouse-server/config_9000.xml /etc/clickhouse-server/users01.xml /etc/clickhouse-server/metrika01.xml |

| 9200/8223 | clickhouse客户端、服务端 | /etc/clickhouse-server/config_9200.xml /etc/clickhouse-server/users02.xml /etc/clickhouse-server/metrika02.xml |

下面部署以clickhou001操作举例,如果三台机器均操作,会注明。

2 zookeeper安装

2.1 zoo.cfg配置

3台机器均操作[ root@clickhou001:~ ]# cat /etc/zookeeper/zoo.cfg

datadir=/data/zookeeper

# the port at which the clients will connect

clientport=2181

server.1=192.168.6.8:2888:3888

server.2=192.168.6.6:2888:3888

server.3=192.168.6.13:2888:3888[ root@clickhou001:~ ]# echo stat|nc localhost 2181 | grep versionzookeeper version: 3.4.13官方文档:https://zookeeper.apache.org/doc/r3.4.14/zookeeperstarted.html

2.2 自启动

[ root@clickhou001:~ ]# cat /etc/systemd/system/zookeeper.service

[unit]

description=zookeeper service

requires=network.target

after=syslog.target

[service]

type=forking

user=zookeeper

group=zookeeper

execstart=/usr/local/zookeeper/bin/zkserver.sh start /etc/zookeeper/zoo.cfg

execstop=/usr/local/zookeeper/bin/zkserver.sh stop /etc/zookeeper/zoo.cfg

execreload=/usr/local/zookeeper/bin/zkserver.sh restart /etc/zookeeper/zoo.cfg

[install]

wantedby=default.targetsystemclt enable zookeeper.service3 clickhouse安装

3.1 rpm安装

3台机器均操作

yum -y install yum-utils

rpm --import https://repo.clickhouse.com/clickhouse-key.gpg

yum-config-manager --add-repo https://repo.clickhouse.com/rpm/stable/x86_64

yum install clickhouse-server clickhouse-client官方文档:https://clickhouse.com/docs/en/install

3.2 config.xml配置

三台机器均操作clickhou002的config_9000.xml中,[clickhou002] {分片2-副本1} {9000} > clickhou002的config_9200.xml中,[clickhou002] {分片1-副本2} {9200} >

clickhou003的config_9000.xml中,[clickhou003] {分片3-副本1} {9000} >

clickhou003的config_9200.xml中,[clickhou003] {分片2-副本2} {9200} >

省略了默认内容,下面只显示需要修改内容,主要是端口和相关目录。[ root@clickhou001:~ ]# cat /etc/clickhouse-server/config_9000.xml

<yandex>

<logger>

<level>tracelevel>

<log>/data/clickhouse/clickhouse_9000/log/clickhouse-server.loglog>

<errorlog>/data/clickhouse/clickhouse_9000/log/clickhouse-server.err.logerrorlog>

<display_name>[clickhou001] {分片1-副本1} {9000} > display_name>

<http_port>8123http_port>

<tcp_port>9000tcp_port>

<mysql_port>9004mysql_port>

<postgresql_port>9005postgresql_port>

<interserver_http_port>9009interserver_http_port>

<interserver_http_host>192.168.6.8interserver_http_host>

<listen_host>0.0.0.0listen_host>

<openssl>

<server>

<certificatefile>/etc/clickhouse-server/server.crtcertificatefile>

<privatekeyfile>/etc/clickhouse-server/server.keyprivatekeyfile>

<path>/data/clickhouse/clickhouse_9000/path>

<tmp_path>/data/clickhouse/clickhouse_9000/tmp/tmp_path>

<user_files_path>/data/clickhouse/clickhouse_9000/user_files/user_files_path>

<user_directories>

<users_xml>

<path>users01.xmlpath>

users_xml>

<local_directory>

<path>/data/clickhouse/clickhouse_9000/access/path>

local_directory>

user_directories>

<format_schema_path>/data/clickhouse/clickhouse_9000/format_schemas/format_schema_path>

yandex>[ root@clickhou001:~ ]# cat /etc/clickhouse-server/config_9200.xml

<yandex>

<logger>

<level>tracelevel>

<log>/data/clickhouse/clickhouse_9200/log/clickhouse-server.loglog>

<errorlog>/data/clickhouse/clickhouse_9200/log/clickhouse-server.err.logerrorlog>

<display_name>[clickhou001] {分片3-副本2} {9200} > display_name>

<http_port>8223http_port>

<tcp_port>9200tcp_port>

<mysql_port>9204mysql_port>

<postgresql_port>9205postgresql_port>

<interserver_http_port>9209interserver_http_port>

<interserver_http_host>192.168.6.8interserver_http_host>

<listen_host>0.0.0.0listen_host>

<openssl>

<server>

<certificatefile>/etc/clickhouse-server/server.crtcertificatefile>

<privatekeyfile>/etc/clickhouse-server/server.keyprivatekeyfile>

<path>/data/clickhouse/clickhouse_9200/path>

<tmp_path>/data/clickhouse/clickhouse_9200/tmp/tmp_path>

<user_files_path>/data/clickhouse/clickhouse_9200/user_files/user_files_path>

<user_directories>

<users_xml>

<path>users02.xmlpath>

users_xml>

<local_directory>

<path>/data/clickhouse/clickhouse_9200/access/path>

local_directory>

user_directories>

<format_schema_path>/data/clickhouse/clickhouse_9200/format_schemas/format_schema_path>

yandex>

[ root@clickhou001:~ ]# cat /etc/clickhouse-server/config_9200.xml

<yandex>

<logger>

<level>tracelevel>

<log>/data/clickhouse/clickhouse_9200/log/clickhouse-server.loglog>

<errorlog>/data/clickhouse/clickhouse_9200/log/clickhouse-server.err.logerrorlog>

<display_name>[clickhou001] {分片3-副本2} {9200} > display_name>

<http_port>8223http_port>

<tcp_port>9200tcp_port>

<mysql_port>9204mysql_port>

<postgresql_port>9205postgresql_port>

<interserver_http_port>9209interserver_http_port>

<interserver_http_host>192.168.6.8interserver_http_host>

<listen_host>0.0.0.0listen_host>

<openssl>

<server>

<certificatefile>/etc/clickhouse-server/server.crtcertificatefile>

<privatekeyfile>/etc/clickhouse-server/server.keyprivatekeyfile>

<path>/data/clickhouse/clickhouse_9200/path>

<tmp_path>/data/clickhouse/clickhouse_9200/tmp/tmp_path>

<user_files_path>/data/clickhouse/clickhouse_9200/user_files/user_files_path>

<user_directories>

<users_xml>

<path>users02.xmlpath>

users_xml>

<local_directory>

<path>/data/clickhouse/clickhouse_9200/access/path>

local_directory>

user_directories>

<format_schema_path>/data/clickhouse/clickhouse_9200/format_schemas/format_schema_path>

yandex>3.3 metrika.xml配置

[ root@clickhou001:~ ]# cat /etc/clickhouse-server/metrika01.xml

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.6.8host>

<port>2181port>

node>

<node index="2">

<host>192.168.6.6host>

<port>2181port>

node>

<node index="3">

<host>192.168.6.13host>

<port>2181port>

node>

zookeeper-servers>

<clickhouse_remote_servers>

<cluster_slowlog>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.8host>

<port>9000port>

replica>

<replica>

<host>192.168.6.13host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.6host>

<port>9000port>

replica>

<replica>

<host>192.168.6.8host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.13host>

<port>9000port>

replica>

<replica>

<host>192.168.6.6host>

<port>9200port>

replica>

shard>

cluster_slowlog>

clickhouse_remote_servers>

<macros>

<cluster>cluster_slowlogcluster>

<shard>01shard>

<replica>click1-shard01-replica01replica>

macros>

<networks>

<ip>::/0ip>

networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000min_part_size>

<min_part_size_ratio>0.01min_part_size_ratio>

<method>lz4method>

case>

clickhouse_compression>

yandex>

[ root@clickhou001:~ ]# cat /etc/clickhouse-server/metrika02.xml

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.6.8host>

<port>2181port>

node>

<node index="2">

<host>192.168.6.6host>

<port>2181port>

node>

<node index="3">

<host>192.168.6.13host>

<port>2181port>

node>

zookeeper-servers>

<clickhouse_remote_servers>

<cluster_slowlog>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.8host>

<port>9000port>

replica>

<replica>

<host>192.168.6.13host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.6host>

<port>9000port>

replica>

<replica>

<host>192.168.6.8host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.13host>

<port>9000port>

replica>

<replica>

<host>192.168.6.6host>

<port>9200port>

replica>

shard>

cluster_slowlog>

clickhouse_remote_servers>

<macros>

<cluster>cluster_slowlogcluster>

<shard>03shard>

<replica>click3-shard03-replica02replica>

macros>

<networks>

<ip>::/0ip>

networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000min_part_size>

<min_part_size_ratio>0.01min_part_size_ratio>

<method>lz4method>

case>

clickhouse_compression>

yandex>

[ root@clickhou002:~ ]# cat /etc/clickhouse-server/metrika01.xml

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.6.8host>

<port>2181port>

node>

<node index="2">

<host>192.168.6.6host>

<port>2181port>

node>

<node index="3">

<host>192.168.6.13host>

<port>2181port>

node>

zookeeper-servers>

<clickhouse_remote_servers>

<cluster_slowlog>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.8host>

<port>9000port>

replica>

<replica>

<host>192.168.6.13host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.6host>

<port>9000port>

replica>

<replica>

<host>192.168.6.8host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.13host>

<port>9000port>

replica>

<replica>

<host>192.168.6.6host>

<port>9200port>

replica>

shard>

cluster_slowlog>

clickhouse_remote_servers>

<macros>

<cluster>cluster_slowlogcluster>

<shard>02shard>

<replica>click2-shard02-replica01replica>

macros>

<networks>

<ip>::/0ip>

networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000min_part_size>

<min_part_size_ratio>0.01min_part_size_ratio>

<method>lz4method>

case>

clickhouse_compression>

yandex>

[ root@clickhou002:~ ]# cat /etc/clickhouse-server/metrika02.xml

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.6.8host>

<port>2181port>

node>

<node index="2">

<host>192.168.6.6host>

<port>2181port>

node>

<node index="3">

<host>192.168.6.13host>

<port>2181port>

node>

zookeeper-servers>

<clickhouse_remote_servers>

<cluster_slowlog>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.8host>

<port>9000port>

replica>

<replica>

<host>192.168.6.13host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.6host>

<port>9000port>

replica>

<replica>

<host>192.168.6.8host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.13host>

<port>9000port>

replica>

<replica>

<host>192.168.6.6host>

<port>9200port>

replica>

shard>

cluster_slowlog>

clickhouse_remote_servers>

<macros>

<cluster>cluster_slowlogcluster>

<shard>01shard>

<replica>click1-shard01-replica02replica>

macros>

<networks>

<ip>::/0ip>

networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000min_part_size>

<min_part_size_ratio>0.01min_part_size_ratio>

<method>lz4method>

case>

clickhouse_compression>

yandex>

[ root@clickhou003:~ ]# cat /etc/clickhouse-server/metrika01.xml

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.6.8host>

<port>2181port>

node>

<node index="2">

<host>192.168.6.6host>

<port>2181port>

node>

<node index="3">

<host>192.168.6.13host>

<port>2181port>

node>

zookeeper-servers>

<clickhouse_remote_servers>

<cluster_slowlog>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.8host>

<port>9000port>

replica>

<replica>

<host>192.168.6.13host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.6host>

<port>9000port>

replica>

<replica>

<host>192.168.6.8host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.13host>

<port>9000port>

replica>

<replica>

<host>192.168.6.6host>

<port>9200port>

replica>

shard>

cluster_slowlog>

clickhouse_remote_servers>

<macros>

<cluster>cluster_slowlogcluster>

<shard>02shard>

<replica>click3-shard03-replica01replica>

macros>

<networks>

<ip>::/0ip>

networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000min_part_size>

<min_part_size_ratio>0.01min_part_size_ratio>

<method>lz4method>

case>

clickhouse_compression>

yandex>

[ root@clickhou003:~ ]# cat /etc/clickhouse-server/metrika02.xml

<yandex>

<zookeeper-servers>

<node index="1">

<host>192.168.6.8host>

<port>2181port>

node>

<node index="2">

<host>192.168.6.6host>

<port>2181port>

node>

<node index="3">

<host>192.168.6.13host>

<port>2181port>

node>

zookeeper-servers>

<clickhouse_remote_servers>

<cluster_slowlog>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.8host>

<port>9000port>

replica>

<replica>

<host>192.168.6.13host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.6host>

<port>9000port>

replica>

<replica>

<host>192.168.6.8host>

<port>9200port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>192.168.6.13host>

<port>9000port>

replica>

<replica>

<host>192.168.6.6host>

<port>9200port>

replica>

shard>

cluster_slowlog>

clickhouse_remote_servers>

<macros>

<cluster>cluster_slowlogcluster>

<shard>02shard>

<replica>click2-shard02-replica02replica>

macros>

<networks>

<ip>::/0ip>

networks>

<clickhouse_compression>

<case>

<min_part_size>10000000000min_part_size>

<min_part_size_ratio>0.01min_part_size_ratio>

<method>lz4method>

case>

clickhouse_compression>

yandex>3.4 自启动

三台机器均操作[ root@clickhou001:~ ]# cat /etc/systemd/system/clickhouse-server9000.service

[unit]

description=clickhouse server (analytic dbms for big data)

requires=network-online.target

after=network-online.target

[service]

type=simple

user=clickhouse

group=clickhouse

restart=always

restartsec=30

runtimedirectory=clickhouse-server

execstart=/usr/bin/clickhouse-server --config=/etc/clickhouse-server/config_9000.xml --pid-file=/data/clickhouse/clickhouse_9000/clickhouse-server9000.pid

limitcore=infinity

limitnofile=500000

capabilityboundingset=cap_net_admin cap_ipc_lock cap_sys_nice

[install]

wantedby=multi-user.target

[ root@clickhou001:~ ]# cat /etc/systemd/system/clickhouse-server9200.service

[unit]

description=clickhouse server (analytic dbms for big data)

requires=network-online.target

after=network-online.target

[service]

type=simple

user=clickhouse

group=clickhouse

restart=always

restartsec=30

runtimedirectory=clickhouse-server

execstart=/usr/bin/clickhouse-server --config=/etc/clickhouse-server/config_9200.xml --pid-file=/data/clickhouse/clickhouse_9200/clickhouse-server9200.pid

limitcore=infinity

limitnofile=500000

capabilityboundingset=cap_net_admin cap_ipc_lock cap_sys_nice

[install]

wantedby=multi-user.target

systemctl restart clickhouse-server9000.service

systemctl enable clickhouse-server9000.service

systemctl status clickhouse-server9000.service

systemctl restart clickhouse-server9200.service

systemctl enable clickhouse-server9200.service

systemctl status clickhouse-server9200.service[ root@clickhou001:~ ]# systemctl status clickhouse-server9000.service

● clickhouse-server9000.service - clickhouse server (analytic dbms for big data)

loaded: loaded (/etc/systemd/system/clickhouse-server9000.service; enabled; vendor preset: disabled)

active: active (running) since tue 2021-11-09 18:23:36 cst; 10 months 28 days ago

main pid: 14116 (clckhouse-watch)

cgroup: /system.slice/clickhouse-server9000.service

├─14116 clickhouse-watchdog --config=/etc/clickhouse-server/config_9000.xml --pid-file=/data/clickhouse/clickhouse_9000/clickhouse-server9000.pid

└─14117 /usr/bin/clickhouse-server --config=/etc/clickhouse-server/config_9000.xml --pid-file=/data/clickhouse/clickhouse_9000/clickhouse-server9000.pid

warning: journal has been rotated since unit was started. log output is incomplete or unavailable.

[ root@clickhou001:~ ]# systemctl status clickhouse-server9200.service

● clickhouse-server9200.service - clickhouse server (analytic dbms for big data)

loaded: loaded (/etc/systemd/system/clickhouse-server9200.service; enabled; vendor preset: disabled)

active: active (running) since tue 2021-11-09 18:23:43 cst; 10 months 28 days ago

main pid: 14296 (clckhouse-watch)

cgroup: /system.slice/clickhouse-server9200.service

├─14296 clickhouse-watchdog --config=/etc/clickhouse-server/config_9200.xml --pid-file=/data/clickhouse/clickhouse_9200/clickhouse-server9200.pid

└─14297 /usr/bin/clickhouse-server --config=/etc/clickhouse-server/config_9200.xml --pid-file=/data/clickhouse/clickhouse_9200/clickhouse-server9200.pid

warning: journal has been rotated since unit was started. log output is incomplete or unavailable.

4 登录验证

[ root@clickhou001:~ ]# clickhouse-client --port=9000 --multiline

clickhouse client version 21.10.2.15 (official build).

connecting to localhost:9000 as user default.

connected to clickhouse server version 21.10.2 revision 54449.

[clickhou001-ops-prod-bj4] {分片1-副本1} {9000} > select cluster,shard_num,replica_num,host_name,host_address,port,is_local,user from system.clusters;

select

cluster,

shard_num,

replica_num,

host_name,

host_address,

port,

is_local,

user

from system.clusters

query id: f6555600-bae2-4f90-b41d-474458ac4e66

┌cluster─────┬shard_num─┬replica_num─┬host_name─ ─┬─host_address─┬─port┬─is_local┬─user ─┐

│ cluster_slowlog │ 1 │ 1 │ 192.168.6.8 │ 192.168.6.8 │ 9000 │ 1 │ default │

│ cluster_slowlog │ 1 │ 2 │ 192.168.6.13 │ 192.168.6.13 │ 9200 │ 0 │ default │

│ cluster_slowlog │ 2 │ 1 │ 192.168.6.6 │ 192.168.6.6 │ 9000 │ 0 │ default │

│ cluster_slowlog │ 2 │ 2 │ 192.168.6.8 │ 192.168.6.8 │ 9200 │ 0 │ default │

│ cluster_slowlog │ 3 │ 1 │ 192.168.6.13 │ 192.168.6.13 │ 9000 │ 0 │ default │

│ cluster_slowlog │ 3 │ 2 │ 192.168.6.6 │ 192.168.6.6 │ 9200 │ 0 │ default │

└──────── ┴──── ─┴────── ┴───────┴────────┴───┴─────┴─── ─┘

6 rows in set. elapsed: 0.002 sec.

5 监控部署

clickhouse_exporter重新编译过。

[ root@clickhou001:/data/app ]# nohup ./clickhouse_exporter -scrape_uri=http://192.168.6.8:8213/ -log.level=info >> /dev/null 2>&1 &最后修改时间:2022-10-17 11:14:05

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【米乐app官网下载的版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。