大家好, 这次大表哥分享的是 mysql mgr 高可用故障自动识别方案 proxy sql.

首先,我们要清楚 原生的 mysql mgr 是 不提供故障自动识别方案的, 市面上taf的m6米乐安卓版下载的解决方案大致分为如下:

1.官方的mysql router 的组件: 可以参考大表哥之前的文章 https://www.modb.pro/db/399249

2.开源的路由组件, 像是 proxy sql

3.开发自研的自动探测的脚本,可以集成在应用连接池的代码里面,实现vip的漂移或者是dns的域名解析切换。

今天我们要分享的是 用开源的组件 proxy sql 实现 mgr 的自动故障转移。

个人认为 proxy sql 是一款十分轻量级的,可以实现快速部署的轻量级的路由组件。 我们先看一下 proxy sql 的官方文档和下载地址:

https://proxysql.com/

proxy sql 下载

根据自己的os的版本选择相应的rpm包下载:

https://github.com/sysown/proxysql/releases

安装:

infra [mysql@wqdcsrv3353 ~]# sudo rpm -ivh proxysql2-2.0.14-1.1.el7.x86_64.rpm

warning:proxysql2-2.0.14-1.1.el7.x86_64.rpm: header v4 dsa/sha1 signature, key id cd2efd2a: nokey

preparing... ################################# [100%]

updating / installing...

1:proxysql2-2.0.14-1.1.el7.x86_64 ################################# [100%]

uat mysql@wqdcsrv3352[09:53:17]:/data/software $ which proxysql

/bin/proxysql

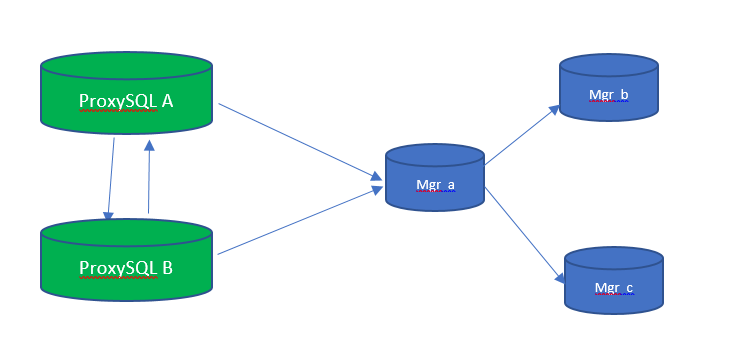

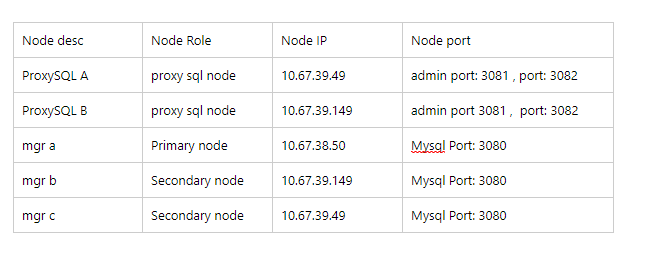

我们接下来要搭建一个 proxy 的集群, 这个集群是为 mysql mgr 提供了路由的服务。 示例图如下:

对应的测试机器如下:

这里注意的是 proxy 的集群是去中心化的设计,没有主从角色状态的概念。

我们先预先准备好一个mgr的mysql 集群:

mysql> select * from replication_group_members;

--------------------------- -------------------------------------- -------------- ------------- -------------- ------------- ---------------- ----------------------------

| channel_name | member_id | member_host | member_port | member_state | member_role | member_version | member_communication_stack |

--------------------------- -------------------------------------- -------------- ------------- -------------- ------------- ---------------- ----------------------------

| group_replication_applier | 16d296b7-83d1-11ec-8d13-005056aefbb6 | 10.67.39.149 | 3080 | online | secondary | 8.0.27 | xcom |

| group_replication_applier | 30af63d9-7db1-11ec-9d58-f403439dfd00 | 10.67.38.50 | 3080 | online | primary | 8.0.27 | xcom |

| group_replication_applier | 89866a9b-7db1-11ec-9d58-f403439dfd00 | 10.67.39.49 | 3080 | online | secondary | 8.0.27 | xcom |

--------------------------- -------------------------------------- -------------- ------------- -------------- ------------- ---------------- ----------------------------

3 rows in set (0.01 sec)

我们需要在mgr中建立 proxy 的心跳探测账户:

create user proxy_monitor@'10.%' identified by '***********' ;

grant replication client on *.* to `proxy_monitor`@`10.%`;

grant select on `sys`.* to `proxy_monitor`@`10.%`

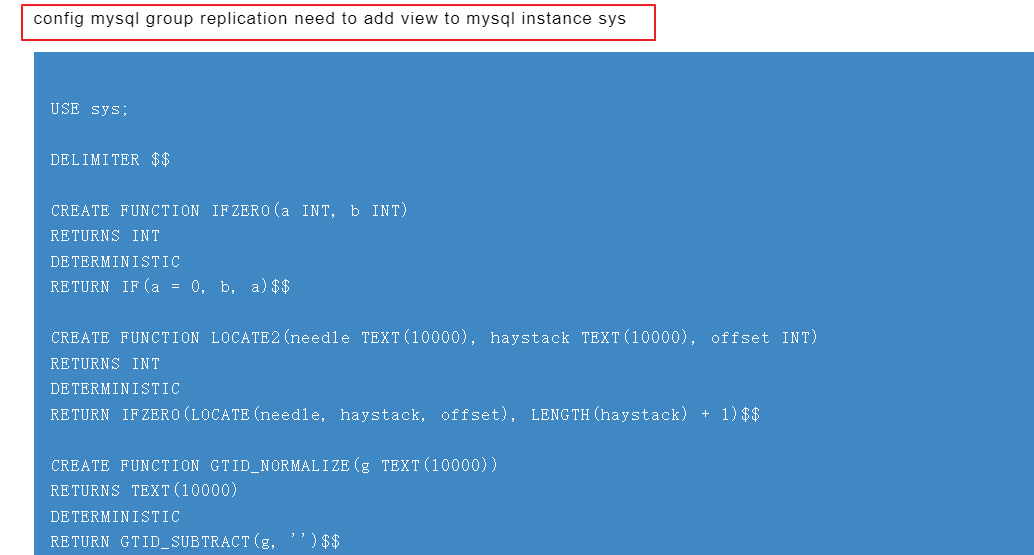

另外proxy sql 对 mysql mgr 的支持需要创建一些试图和函数: 这个脚本可以从官方网上拿到:

https://proxysql.com/documentation/main-runtime/#mysql_group_replication_hostgroups

use sys;

delimiter $$

create function ifzero(a int, b int)

returns int

deterministic

return if(a = 0, b, a)$$

create function locate2(needle text(10000), haystack text(10000), offset int)

returns int

deterministic

return ifzero(locate(needle, haystack, offset), length(haystack) 1)$$

create function gtid_normalize(g text(10000))

returns text(10000)

deterministic

return gtid_subtract(g, '')$$

create function gtid_count(gtid_set text(10000))

returns int

deterministic

begin

declare result bigint default 0;

declare colon_pos int;

declare next_dash_pos int;

declare next_colon_pos int;

declare next_comma_pos int;

set gtid_set = gtid_normalize(gtid_set);

set colon_pos = locate2(':', gtid_set, 1);

while colon_pos != length(gtid_set) 1 do

set next_dash_pos = locate2('-', gtid_set, colon_pos 1);

set next_colon_pos = locate2(':', gtid_set, colon_pos 1);

set next_comma_pos = locate2(',', gtid_set, colon_pos 1);

if next_dash_pos < next_colon_pos and next_dash_pos < next_comma_pos then

set result = result

substr(gtid_set, next_dash_pos 1,

least(next_colon_pos, next_comma_pos) - (next_dash_pos 1)) -

substr(gtid_set, colon_pos 1, next_dash_pos - (colon_pos 1)) 1;

else

set result = result 1;

end if;

set colon_pos = next_colon_pos;

end while;

return result;

end$$

create function gr_applier_queue_length()

returns int

deterministic

begin

return (select sys.gtid_count( gtid_subtract( (select

received_transaction_set from performance_schema.replication_connection_status

where channel_name = 'group_replication_applier' ), (select

@@global.gtid_executed) )));

end$$

create function gr_member_in_primary_partition()

returns varchar(3)

deterministic

begin

return (select if( member_state='online' and ((select count(*) from

performance_schema.replication_group_members where member_state != 'online') >=

((select count(*) from performance_schema.replication_group_members)/2) = 0),

'yes', 'no' ) from performance_schema.replication_group_members join

performance_schema.replication_group_member_stats using(member_id));

end$$

create view gr_member_routing_candidate_status as select

sys.gr_member_in_primary_partition() as viable_candidate,

if( (select (select group_concat(variable_value) from

performance_schema.global_variables where variable_name in ('read_only',

'super_read_only')) != 'off,off'), 'yes', 'no') as read_only,

sys.gr_applier_queue_length() as transactions_behind, count_transactions_in_queue as 'transactions_to_cert' from performance_schema.replication_group_member_stats;$$

delimiter ;

执行完脚本之后,我们可以验证一下创建的对象:

mysql> use sys;

reading table information for completion of table and column names

you can turn off this feature to get a quicker startup with -a

database changed

mysql> select gr_member_in_primary_partition();

----------------------------------

| gr_member_in_primary_partition() |

----------------------------------

| yes |

----------------------------------

1 row in set (0.00 sec)

mysql> select * from gr_member_routing_candidate_status;

------------------ ----------- --------------------- ----------------------

| viable_candidate | read_only | transactions_behind | transactions_to_cert |

------------------ ----------- --------------------- ----------------------

| yes | no | 0 | 0 |

------------------ ----------- --------------------- ----------------------

1 row in set (0.01 sec)

下面我们搭建 proxy sql 的 集群:

1)配置文件参数

完整的参数文件配置: 每个 proxy sql 的节点的配置文件是一致的, 对于proxy sql 的集群来说,没有主从的角色之分

datadir="/data/mysql3080/proxysql" admin_variables= { admin_credentials="admin:admin;proxy_cluster:*****" mysql_ifaces="0.0.0.0:3081" cluster_username="proxy_cluster" cluster_password="******" cluster_check_interval_ms=200 cluster_check_status_frequency=100 cluster_mysql_query_rules_save_to_disk=true cluster_mysql_servers_save_to_disk=true cluster_mysql_users_save_to_disk=true cluster_proxysql_servers_save_to_disk=true cluster_mysql_query_rules_diffs_before_sync=10 cluster_mysql_servers_diffs_before_sync=10 cluster_mysql_users_diffs_before_sync=10 cluster_proxysql_servers_diffs_before_sync=10 } proxysql_servers = ({hostname="10.67.38.50",port=3081,weight=0},{hostname="10.67.39.49",port=3081,weight=0}) mysql_variables= { threads=8 max_connections=5000 default_query_delay=0 have_compress=true poll_timeout=2000 interfaces="0.0.0.0:3082" default_schema="information_schema" stacksize=1048576 server_version="8.0.20" default_query_timeout=7200000 connect_timeout_server=3000 monitor_username="proxy_monitor" monitor_password="admin123" monitor_history=600000 monitor_connect_interval=2000 monitor_ping_interval=2000 monitor_read_only_interval=2000 monitor_read_only_timeout=500 ping_interval_server_msec=120000 ping_timeout_server=500 commands_stats=true sessions_sort=true connect_retries_on_failure=10 default_charset="utf8mb4" threshold_query_length=524288 threshold_resultset_size=524288 max_allowed_packet=67108864 max_transaction_time=14400000 monitor_replication_lag_interval=10000 eventslog_filename="/data/mysql3080/log/proxysql.log" eventslog_format=2 auditlog_filename="/data/mysql3080/log/audit.log" } mysql_servers = ({address="10.67.38.50",port=3080,hostgroup=20, max_connections=5000,max_replication_lag=2000},{address="10.67.39.49",port=3080,hostgroup=40, max_connections=5000,max_replication_lag=2000},{address="10.67.39.149",port=3080,hostgroup=40, max_connections=5000,max_replication_lag=2000}) mysql_users: ( {username = "db_monitor",password = "admin123",default_hostgroup = 20,max_connections=50,default_schema="information_schema",active = 1}) mysql_query_rules: ({rule_id=1,active=1,match_digest=".",apply=0,log=1} ) scheduler= ( ) mysql_replication_hostgroups= ( ) mysql_group_replication_hostgroups: ( {writer_hostgroup=20, backup_writer_hostgroup=30, reader_hostgroup=40, offline_hostgroup=10,active=1,max_writers=1,writer_is_also_reader=0,max_transactions_behind=4000} )

配置文件中重要的参数含义如下:

a)proxy sql 集群的配置

admin_credentials="admin:admin;proxy_cluster:*****" ### 1)amdin/admin 是proxy sql 后台管理员的账户和密码, 2)proxy sql 集群是通过 proxy_cluster这个账户来实现配置文件的定期同步的

mysql_ifaces="0.0.0.0:3081" --proxy sql 管理员后台登录的端口

cluster_username="proxy_cluster" --proxy sql 集群的账户

cluster_password="*****" --proxy sql 集群的密码

--下面是proxy sql 集群同步检查相关的一些参数

cluster_check_interval_ms=200

cluster_check_status_frequency=100

cluster_mysql_query_rules_save_to_disk=true

cluster_mysql_servers_save_to_disk=true

cluster_mysql_users_save_to_disk=true

cluster_proxysql_servers_save_to_disk=true

cluster_mysql_query_rules_diffs_before_sync=10

cluster_mysql_servers_diffs_before_sync=10

cluster_mysql_users_diffs_before_sync=10

cluster_proxysql_servers_diffs_before_sync=10

--proxy 集群成员的配置

proxysql_servers =

({hostname="10.67.38.50",port=3081,weight=0},{hostname="10.67.39.49",port=3081,weight=0})

b)mysql server 的配置

mysql_variables=

{

max_connections=5000 --最大连接数

interfaces="0.0.0.0:3082" -- 对应用系统暴露的端口

monitor_username="proxy_monitor" -- 连接mysql mgr 集群的探测心跳账号

monitor_password="********"

...

...

}

-- mgr 集群的信息,其中 hostgroup = 20 是主库, hostgroup = 40 表示从库

mysql_servers =

({address="10.67.38.50",port=3080,hostgroup=20, max_connections=5000,max_replication_lag=2000},

{address="10.67.39.49",port=3080,hostgroup=40, max_connections=5000,max_replication_lag=2000},

{address="10.67.39.149",port=3080,hostgroup=40, max_connections=5000,max_replication_lag=2000}

)

-- 定义 mgr 的 hostgroup: 20:写组, 30:备份写组, 40:读组,10:离线组

mysql_group_replication_hostgroups:

(

{writer_hostgroup=20,

backup_writer_hostgroup=30,

reader_hostgroup=40,

offline_hostgroup=10,active=1,max_writers=1,writer_is_also_reader=0,max_transactions_behind=4000}

)

c)其他的一些基本的配置

datadir="/data/mysql3080/proxysql" -- proxy sql 的数据目录,首次启动的时候,会在这个目录下生成相应的数据文件,日志文件

uat mysql@wqdcsrv3352[13:52:26]:/data/mysql3080/proxysql $ ls -lhtr

total 392k

-rw------- 1 mysql mysql 1.7k jun 6 13:39 proxysql-key.pem

-rw------- 1 mysql mysql 1.1k jun 6 13:39 proxysql-ca.pem

-rw------- 1 mysql mysql 1.1k jun 6 13:39 proxysql-cert.pem

-rw-r--r-- 1 mysql mysql 7 jun 6 13:39 proxysql.pid

-rw------- 1 mysql mysql 192k jun 6 13:39 proxysql.db

-rw------- 1 mysql mysql 19k jun 6 13:49 proxysql.log

-rwxr-xr-x 1 mysql mysql 2.6k jun 6 13:52 proxy_mysql3080.cnf

-rw------- 1 mysql mysql 160k jun 6 14:21 proxysql_stats.db

2)创建proxy sql 的路径并启动 (2个ha的节点都需要执行同样的命令)

mkdir -p /data/mysql3080/proxysql

启动的时候需要制定一下: proxy sql的data 目录以及配置文件

/usr/bin/proxysql -c /data/mysql3080/proxysql/proxy_mysql3080.cnf -d /data/mysql3080/proxysql

3)登录并验证proxy sql 的集群 默认的管理员账户是 admin/admin, 管理员的端口是 3081

uat mysql@wqdcsrv3353[14:40:47]:/data/mysql3080/proxysql $ /opt/mysql/product/percona8.0/bin/mysql -h 127.0.0.1 -p 3081 -u admin -padmin

查看2个 proxy sql 集群的信息:

mysql> select * from proxysql_servers;

------------- ------ -------- ---------

| hostname | port | weight | comment |

------------- ------ -------- ---------

| 10.67.38.50 | 3081 | 0 | |

| 10.67.39.49 | 3081 | 0 | |

------------- ------ -------- ---------

2 rows in set (0.00 sec)

查看 mysql mgr 的server 信息 :

mysql> select * from mysql_servers;

-------------- -------------- ------ ----------- -------- -------- ------------- ----------------- --------------------- --------- ---------------- ---------

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

-------------- -------------- ------ ----------- -------- -------- ------------- ----------------- --------------------- --------- ---------------- ---------

| 20 | 10.67.38.50 | 3080 | 0 | online | 1 | 0 | 5000 | 2000 | 0 | 0 | |

| 40 | 10.67.39.49 | 3080 | 0 | online | 1 | 0 | 5000 | 2000 | 0 | 0 | |

| 40 | 10.67.39.149 | 3080 | 0 | online | 1 | 0 | 5000 | 2000 | 0 | 0 | |

-------------- -------------- ------ ----------- -------- -------- ------------- ----------------- --------------------- --------- ---------------- ---------

3 rows in set (0.00 sec)

如果proxy sql 启动失败, 请查看集群的日志信息:

uat mysql@wqdcsrv3352[14:47:42]:/data/mysql3080/proxysql $ view proxysql.log

如果有proxy sql 节点挂掉或者网络问题的话,会报错:

2022-06-06 14:50:58 proxysql_cluster.cpp:217:proxysql_cluster_monitor_thread(): [warning] cluster: unable to connect to peer 10.67.39.49:3081 . error: can't connect to mysql server on '10.67.39.49' (115)

2022-06-06 14:50:58 proxysql_cluster.cpp:217:proxysql_cluster_monitor_thread(): [warning] cluster: unable to connect to peer 10.67.39.49:3081 . error: can't connect to mysql server on '10.67.39.49' (115)

接下来,我们用 java (spring boot) 写一个简单的小代码片段,模拟一直在读写数据库:

在数据库的读写过程中,我们模拟2个的故障自动转移的场景:

1)mgr 主节点down掉

2)proxy sql 集群挂掉一个节点

我们来准备一下,我们的小程序片段:

首先我们需要同时在 proxy sql 和 mysql 中创建我们的账户:

登录 mysql mgr 的主节点 :

创建数据库 :testdb

用户名/密码: app_test/app_test

mysql> create database testdb;

query ok, 1 row affected (0.01 sec)

mysql> create user app_test@'10.%' identified with mysql_native_password by 'app_test';

query ok, 0 rows affected (0.01 sec)

mysql> grant all on testdb.* to app_test@'10.%';

query ok, 0 rows affected (0.01 sec)

-- 这个加密的密码字符串是给下面 proxy sql 手动插入记录时 准备的值

mysql> select authentication_string from mysql.user where user = 'app_test';

-------------------------------------------

| authentication_string |

-------------------------------------------

| *8832ab64049091e6c9a1b921e55cc2a8faf2e31c |

-------------------------------------------

1 row in set (0.00 sec)

登录proxy sql 客户端, 创建对应的 proxy sql的 user :

mysql> insert into mysql_users (username,password,default_schema,default_hostgroup,max_connections) values ('app_test','*8832ab64049091e6c9a1b921e55cc2a8faf2e31c','testdb',20,200)

-> ;

query ok, 1 row affected (0.00 sec)

mysql> load mysql users to runtime;

query ok, 0 rows affected (0.00 sec)

mysql> save mysql users to disk;

query ok, 0 rows affected (0.01 sec)

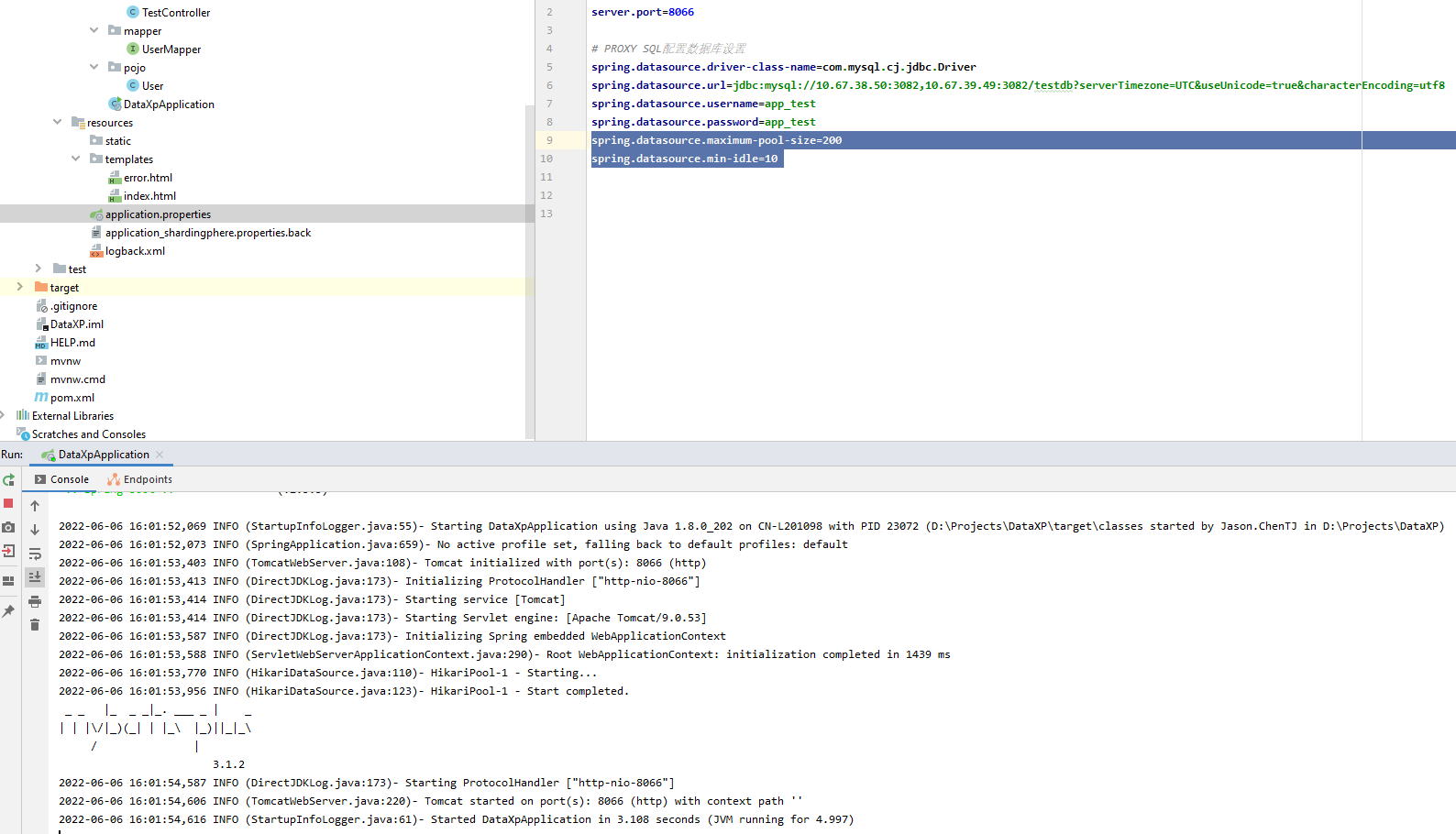

接下来,我们需要在 application.properties 配置数据库的连接信息:

注意这里我们要写的是 proxy sql 的连接地址:

spring.datasource.driver-class-name=com.mysql.cj.jdbc.driver

spring.datasource.url=jdbc:mysql://10.67.38.50:3082,10.67.39.49:3082/testdb?servertimezone=utc&useunicode=true&characterencoding=utf8

spring.datasource.username=app_test

spring.datasource.password=app_test

spring.datasource.maximum-pool-size=200 -- 设置最大连接200

spring.datasource.min-idle=10 -- 最小空闲连接

我们启动 springboot 的程序之后:

由于我们设置了数据库连接池的最小连接数是 10, 我们可以观察到 mysql mgr 的主节点 和 proxy sql 中间件中均建立了10个连接:

proxy sql:

mysql> show processlist;

----------- ---------- -------- ----------- --------- --------- ------

| sessionid | user | db | hostgroup | command | time_ms | info |

----------- ---------- -------- ----------- --------- --------- ------

| 5 | app_test | testdb | 20 | sleep | 1228988 | null |

| 6 | app_test | testdb | 20 | sleep | 1229159 | null |

| 7 | app_test | testdb | 20 | sleep | 1229090 | null |

| 8 | app_test | testdb | 20 | sleep | 1229068 | null |

| 9 | app_test | testdb | 20 | sleep | 1229098 | null |

| 10 | app_test | testdb | 20 | sleep | 1229073 | null |

| 11 | app_test | testdb | 20 | sleep | 1229004 | null |

| 12 | app_test | testdb | 20 | sleep | 1228984 | null |

| 13 | app_test | testdb | 20 | sleep | 1229013 | null |

| 14 | app_test | testdb | 20 | sleep | 1228997 | null |

----------- ---------- -------- ----------- --------- --------- ------

10 rows in set (0.01 sec)

mysql mgr的主节点:

mysql> select * from processlist where user = 'app_test';

------ ---------- ------------------- -------- --------- ------ ------- ------ --------- ----------- ---------------

| id | user | host | db | command | time | state | info | time_ms | rows_sent | rows_examined |

------ ---------- ------------------- -------- --------- ------ ------- ------ --------- ----------- ---------------

| 7832 | app_test | 10.67.38.50:43890 | testdb | sleep | 1617 | | null | 1616551 | 1 | 1 |

| 7833 | app_test | 10.67.38.50:43892 | testdb | sleep | 1617 | | null | 1616530 | 1 | 1 |

| 7825 | app_test | 10.67.38.50:43874 | testdb | sleep | 1617 | | null | 1616804 | 1 | 1 |

| 7834 | app_test | 10.67.38.50:43894 | testdb | sleep | 1617 | | null | 1616511 | 1 | 1 |

| 7826 | app_test | 10.67.38.50:43876 | testdb | sleep | 1617 | | null | 1616676 | 1 | 1 |

| 7827 | app_test | 10.67.38.50:43878 | testdb | sleep | 1617 | | null | 1616656 | 1 | 1 |

| 7828 | app_test | 10.67.38.50:43880 | testdb | sleep | 1617 | | null | 1616635 | 1 | 1 |

| 7829 | app_test | 10.67.38.50:43882 | testdb | sleep | 1617 | | null | 1616615 | 1 | 1 |

| 7830 | app_test | 10.67.38.50:43886 | testdb | sleep | 1617 | | null | 1616591 | 1 | 1 |

| 7831 | app_test | 10.67.38.50:43888 | testdb | sleep | 1617 | | null | 1616570 | 1 | 1 |

------ ---------- ------------------- -------- --------- ------ ------- ------ --------- ----------- ---------------

10 rows in set (0.00 sec)

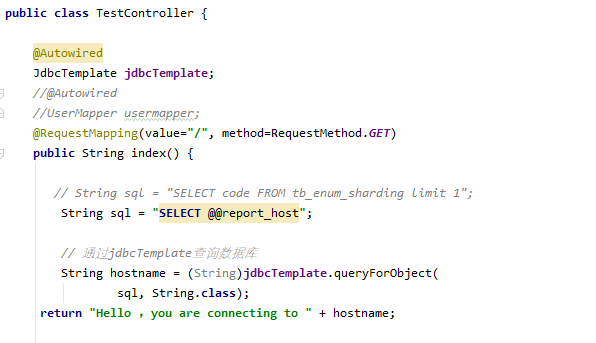

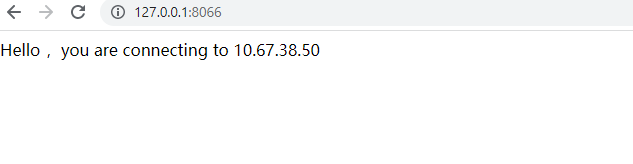

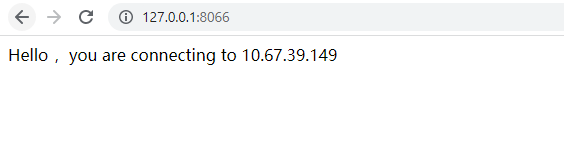

我们写几行简单的代码,输出一下 mysql 的 report_host 变量,看一下 当前连接的mysql 的实例的 ip 地址:

浏览器的地址是输入测试 : http://127.0.0.1:8066/

我们模拟一下主库 10.67.38.50 down 机, 我们去shutdown 一下 mysql :

mysql> shutdown; query ok, 0 rows affected (0.00 sec)

我们再次刷新浏览器的地址: http://127.0.0.1:8066/

这个时候,我们观察应用的日志,会有连接失效的警告:正好是连接池里面10个失效的连接,符合我们的预期

2022-06-07 09:41:37,691 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@3a9211b1 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,713 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@72ab37ad (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,731 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@e952d6f (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,750 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@1ca54772 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,768 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@f5b9fe6 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,788 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@4c444391 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,808 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@436dde80 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,827 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@37064435 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,846 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@5c50386a (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:41:37,864 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@6ff2e9f (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

我们登陆数据库查看: 果然 10.67.39.149 是现在的主库。

mysql> select * from replication_group_members;

--------------------------- -------------------------------------- -------------- ------------- -------------- ------------- ---------------- ----------------------------

| channel_name | member_id | member_host | member_port | member_state | member_role | member_version | member_communication_stack |

--------------------------- -------------------------------------- -------------- ------------- -------------- ------------- ---------------- ----------------------------

| group_replication_applier | 16d296b7-83d1-11ec-8d13-005056aefbb6 | 10.67.39.149 | 3080 | online | primary | 8.0.27 | xcom |

| group_replication_applier | 89866a9b-7db1-11ec-9d58-f403439dfd00 | 10.67.39.49 | 3080 | online | secondary | 8.0.27 | xcom |

--------------------------- -------------------------------------- -------------- ------------- -------------- ------------- ---------------- ----------------------------

2 rows in set (0.00 sec)

接下来我们在测试一下 proxy sql 的ha, 我们需要手动关闭 proxy sql 的节点 :

spring.datasource.url=jdbc:mysql://10.67.38.50:3082,10.67.39.49:3082/testdb?servertimezone=utc&useunicode=true&characterencoding=utf8

我们来关闭一下连接串中 第一个 proxysql 的节点: 10.67.38.50

uat mysql@wqdcsrv3352[09:51:19]:~ $ ps -ef|grep proxysql | grep 3080

mysql 113953 1 0 jun06 ? 00:00:00 /usr/bin/proxysql -c /data/mysql3080/proxysql/proxy_mysql3080.cnf -d /data/mysql3080/proxysql

mysql 113954 113953 0 jun06 ? 00:09:42 /usr/bin/proxysql -c /data/mysql3080/proxysql/proxy_mysql3080.cnf -d /data/mysql3080/proxysql

uat mysql@wqdcsrv3352[09:51:27]:~ $ kill 113954 113953

uat mysql@wqdcsrv3352[09:51:35]:~ $ ps -ef|grep proxysql | grep 3080

我们尝试刷新一下浏览器: http://127.0.0.1:8066/ 依然可以访问数据库。

这个时候,我们再次观察应用的日志,又会有连接失效的警告:正好是连接池里面10个失效的连接,再次符合我们的预期

2022-06-07 09:54:04,845 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@39d142aa (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,867 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@7aa9eace (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,886 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@18c3b7de (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,904 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@1576383b (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,921 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@1c8dcba4 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,940 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@3822d597 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,960 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@167b150d (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,978 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@6cb3d640 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:04,995 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@2904b822 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

2022-06-07 09:54:05,012 warn (poolbase.java:184)- hikaripool-1 - failed to validate connection com.mysql.cj.jdbc.ha.multihostmysqlconnection@d07555 (no operations allowed after connection closed.). possibly consider using a shorter maxlifetime value.

我们查询一下现在存活的proxy sql 的节点上的连接数:符合我们的预期,连接已经漂移到了存活的 proxy sql 的节点

mysql> show processlist;

----------- ---------- -------- ----------- --------- --------- ------

| sessionid | user | db | hostgroup | command | time_ms | info |

----------- ---------- -------- ----------- --------- --------- ------

| 27 | app_test | testdb | 20 | sleep | 182321 | null |

| 28 | app_test | testdb | 20 | sleep | 180263 | null |

| 29 | app_test | testdb | 20 | sleep | 178193 | null |

| 30 | app_test | testdb | 20 | sleep | 176140 | null |

| 31 | app_test | testdb | 20 | sleep | 174718 | null |

| 32 | app_test | testdb | 20 | sleep | 172338 | null |

| 33 | app_test | testdb | 20 | sleep | 170321 | null |

| 34 | app_test | testdb | 20 | sleep | 168121 | null |

| 35 | app_test | testdb | 20 | sleep | 166129 | null |

| 36 | app_test | testdb | 20 | sleep | 164108 | null |

----------- ---------- -------- ----------- --------- --------- ------

10 rows in set (0.00 sec)

我们总结一下,我们完成了

1) proxy sql 集群的搭建

2) ha 故障自动转移之 mgr 主节点 down 机

3) ha 故障自动转移之 proxy sql 节点 down 机

最后我想说的是,一定要结合自己公司的实际应用系统来测试 mysql ha的自动故障转移。 是 java 还是 python, 还是go?

java 的话, 用的是什么持久层的协议框架?用的什么连接池(c3po,hikari)?

作为dba必须要了解你的系统的持久层框架和连接池的工作机制。