公司目前在进行去o,为保证生产操作,搭建了模拟生产环境的多节点rac集群,作为一学就会,一会就废系列,今天推出该系列的第二篇,和11g rac删除节点不同,10g rac 删除稍有差别,后面会奉上该系列第三篇,10g的集群删除。

本次验证测试环境为oracle 11g rac 三节点集群环境,现计划模拟下线其中一个节点。

1.1 现有集群节点信息

| 主机名 | public-ip | virtual-ip | scan-ip | private-ip | 操作系统版本 | 数据库版本 |

|---|---|---|---|---|---|---|

| host210 | 192.168.17.136 | 192.168.17.103 | 192.168.17.108 | 10.90.1.36 | centos release 6.10 (final) | 11.2.0.4 |

| host211 | 192.168.17.137 | 192.168.17.104 | 192.168.17.108 | 10.90.1.37 | centos release 6.10 (final) | 11.2.0.4 |

| host212 | 192.168.17.139 | 192.168.17.105 | 192.168.17.108 | 10.90.1.38 | centos release 6.10 (final) | 11.2.0.4 |

现计划将host212从集群中下线,将rac集群修改为 二节点rac集群。

2.1 检查css服务

检查现有集群节点css服务是否正常。

--- 使用grid用户,任一节点 [root@host210 ~]# su - grid [grid@host210 ~]$ olsnodes -s -t host210 active unpinned host211 active unpinned host212 active unpinned ## unpinned代表正常 ## 若是pinned代表不正常,需执行 [grid@hostxxx ~] crsctl unpin css -n hostname 【hostname即为不正常节点】

2.2 检查集群及数据库状态

集群状态信息如下:

[grid@host210 ~]$ crs_stat -t name type target state host ------------------------------------------------------------ ora.crs.dg ora....up.type online online host210 ora.data.dg ora....up.type online online host210 ora....er.lsnr ora....er.type online online host210 ora....n1.lsnr ora....er.type online online host210 ora.asm ora.asm.type online online host210 ora.cvu ora.cvu.type online online host210 ora.gsd ora.gsd.type offline offline ora....sm1.asm application online online host210 ora....10.lsnr application online online host210 ora....210.gsd application offline offline ora....210.ons application online online host210 ora....210.vip ora....t1.type online online host210 ora....sm2.asm application online online host211 ora....11.lsnr application online online host211 ora....211.gsd application offline offline ora....211.ons application online online host211 ora....211.vip ora....t1.type online online host211 ora....sm3.asm application online online host212 ora....12.lsnr application online online host212 ora....212.gsd application offline offline ora....212.ons application online online host212 ora....212.vip ora....t1.type online online host212 ora....network ora....rk.type online online host210 ora.oc4j ora.oc4j.type online online host210 ora.ons ora.ons.type online online host210 ora.oradb.db ora....se.type online online host210 ora.scan1.vip ora....ip.type online online host210

数据库状态信息如下:

[root@host210 ~]# su - oracle [oracle@host210 ~]$ sqlplus / as sysdba sql*plus: release 11.2.0.4.0 production on thu sep 21 11:05:38 2023 米乐app官网下载 copyright (c) 1982, 2013, oracle. all rights reserved. connected to: oracle database 11g enterprise edition release 11.2.0.4.0 - 64bit production with the partitioning, real application clusters, automatic storage management, olap, data mining and real application testing options sql> set long 99999 head off pages 0 lines 1000 sql> select inst_id,instance_number,instance_name,host_name,status,thread# from gv$instance; 1 1 oradb1 host210 open 1 3 3 oradb3 host212 open 3 2 2 oradb2 host211 open 2

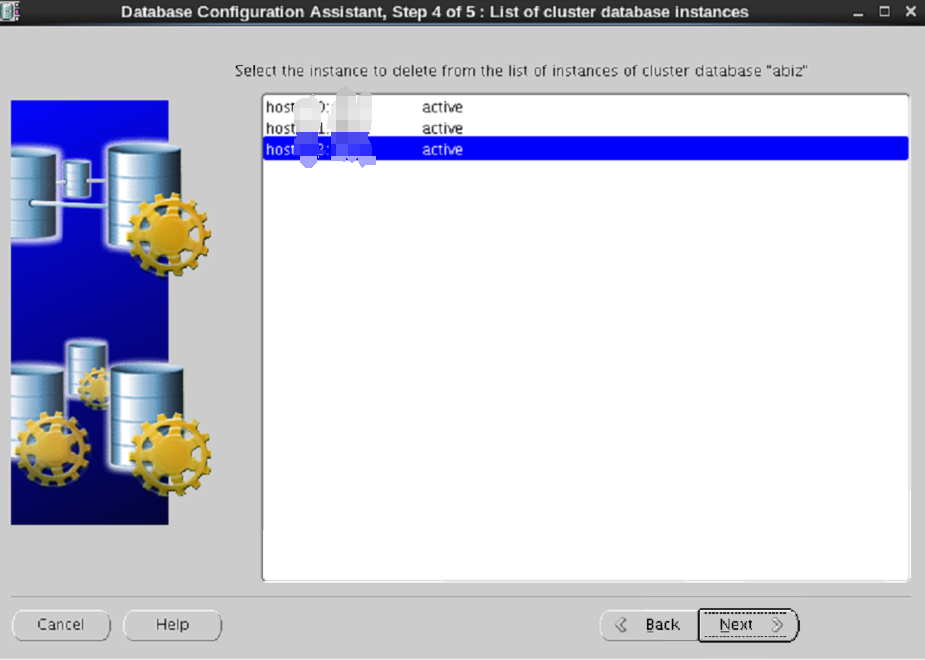

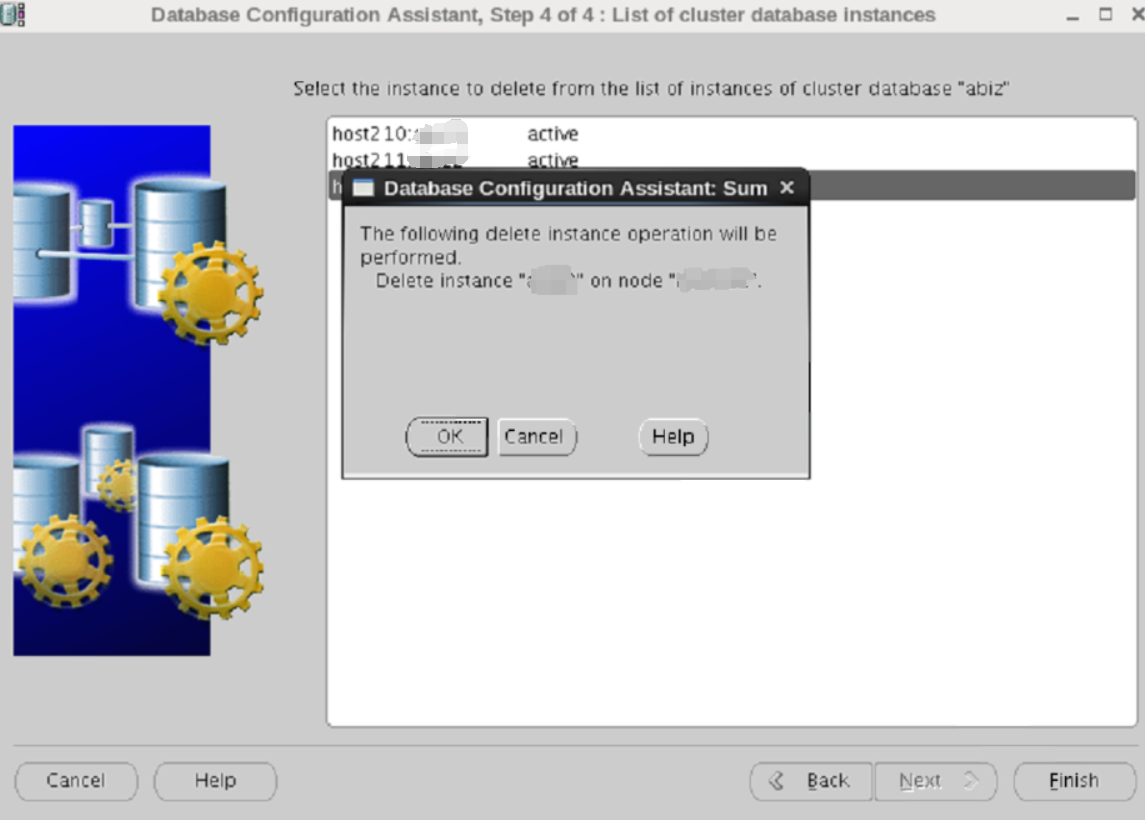

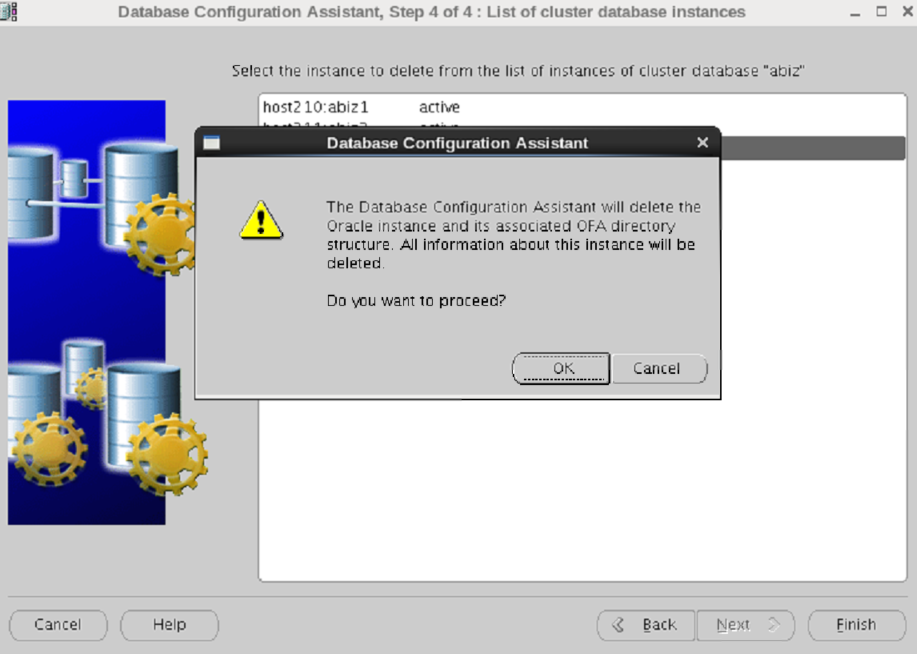

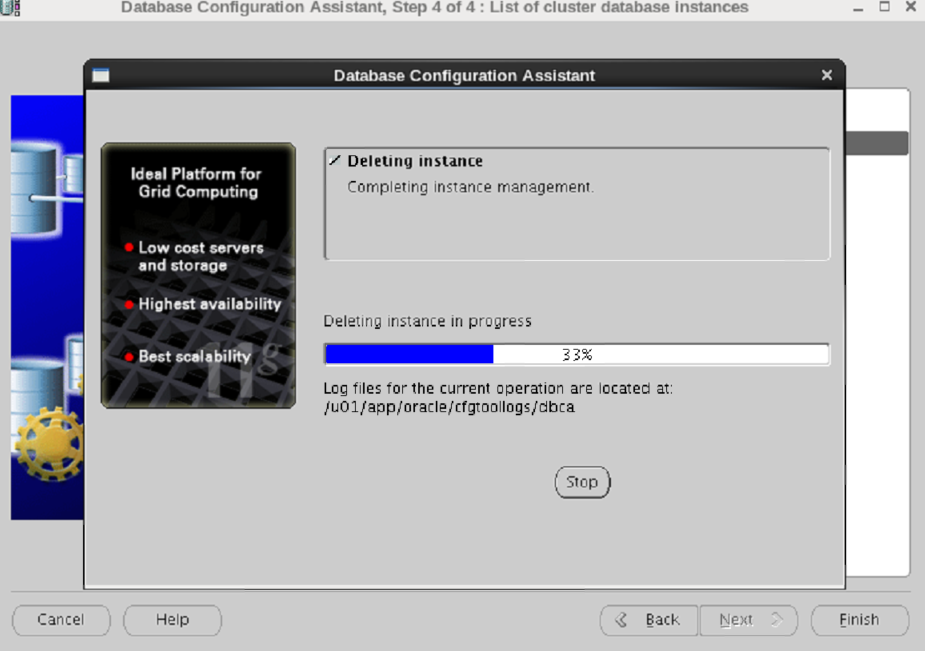

2.3 删除数据库实例

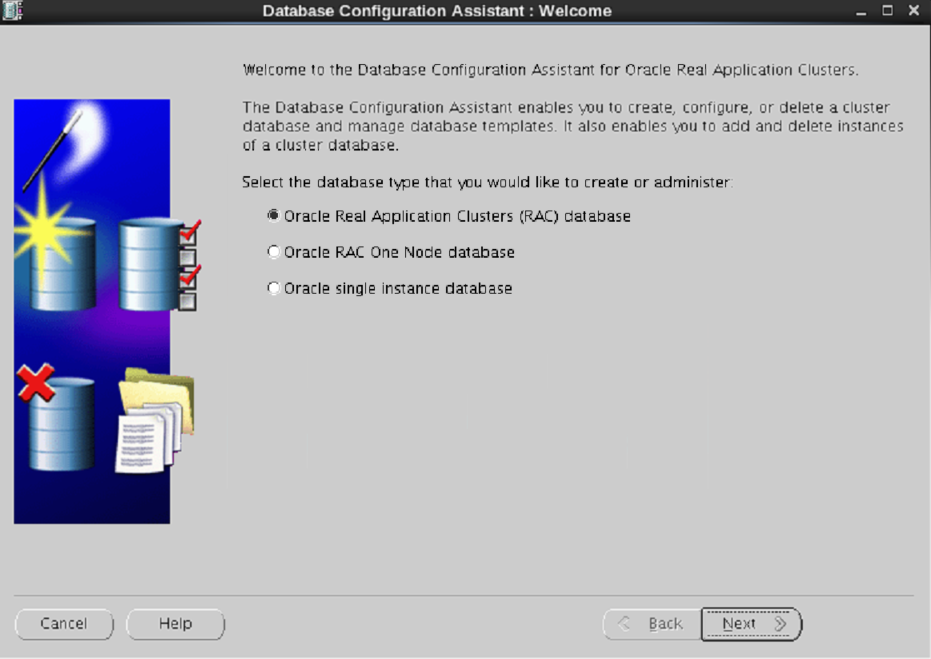

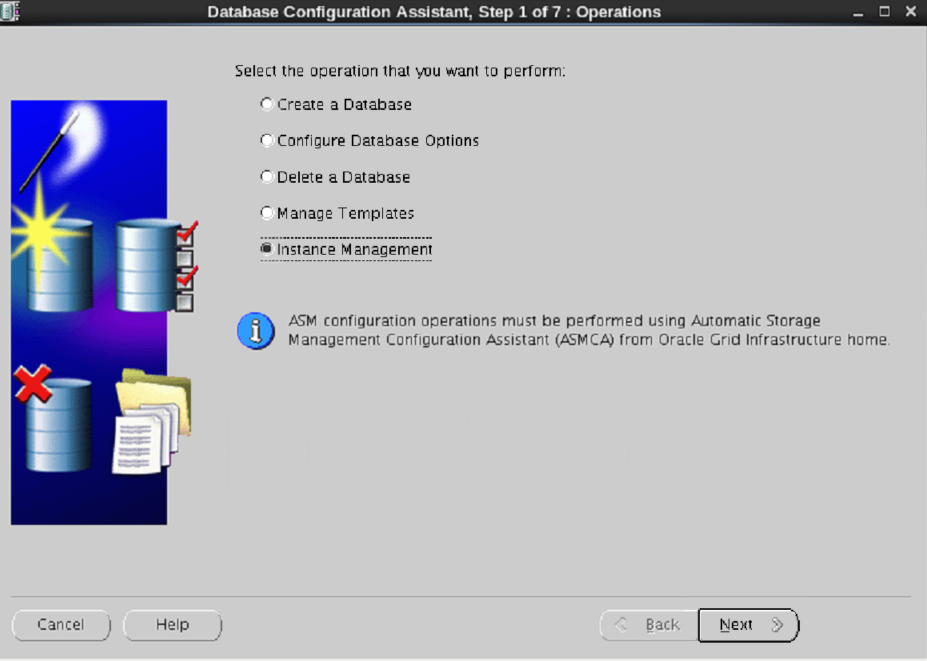

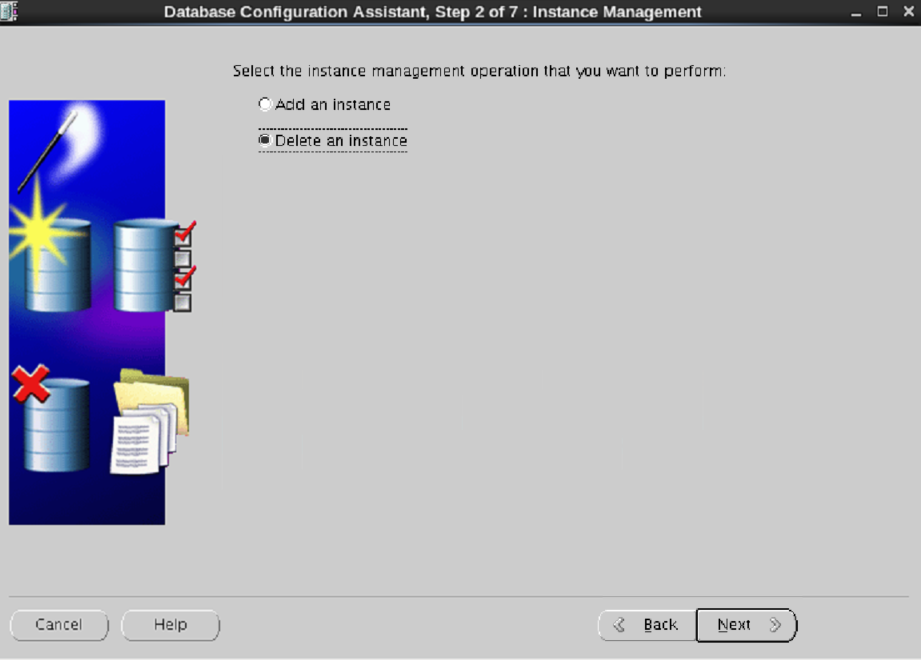

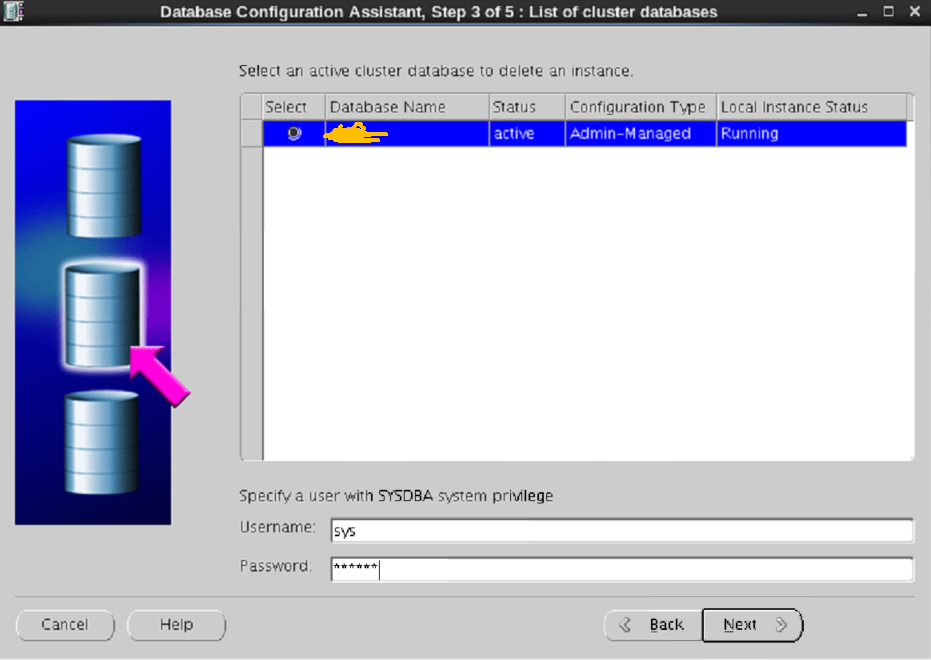

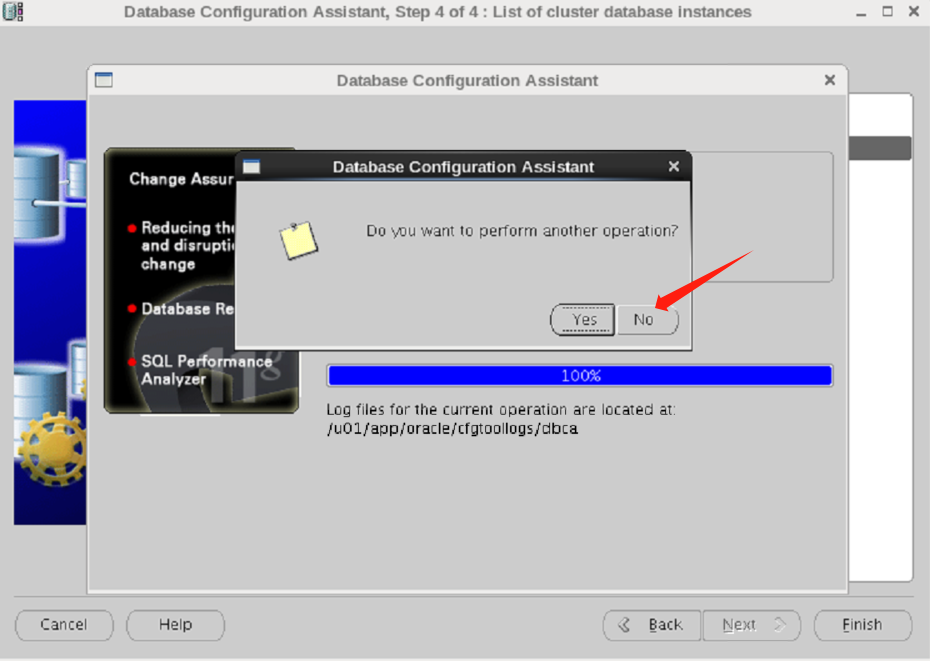

删除数据库实例有两种方式,一种可通过图形的方式,另一种可通过命令的方式。

2.3.1 图形方式

2.3.2 命令方式

--- 使用oracle用户在任一未被删除节点执行 [root@host210 ~]# su - oracle [oracle@host210 ~]$ dbca -silent -deleteinstance -nodelist host212 -gdbname oradb -instancename oradb3 -sysdbausername sys -sysdbapassword oracle deleting instance 1% complete 2% complete 6% complete 13% complete 20% complete 26% complete 33% complete 40% complete 46% complete 53% complete 60% complete 66% complete completing instance management. 100% complete look at the log file "/u01/app/oracle/cfgtoollogs/dbca/oradb.log" for further details. -- 通过如下方式查询,可以看到实例3已经被删除 [oracle@host210 ~]$ sqlplus / as sysdba sql*plus: release 11.2.0.4.0 production on thu sep 21 11:05:38 2023 米乐app官网下载 copyright (c) 1982, 2013, oracle. all rights reserved. connected to: oracle database 11g enterprise edition release 11.2.0.4.0 - 64bit production with the partitioning, real application clusters, automatic storage management, olap, data mining and real application testing options sql> set long 99999 head off pages 0 lines 1000 sql> select inst_id,instance_number,instance_name,host_name,status,thread# from gv$instance; 1 1 oradb1 host210 open 1 2 2 oradb2 host211 open 2 ## /u01/app/oracle/cfgtoollogs/dbca/oradb.log 日志信息如下: [root@host210 ~]# more /u01/app/oracle/cfgtoollogs/dbca/oradb.log the database configuration assistant will delete the oracle instance and its associated ofa directory structure. all information about this instance will be deleted. do you want to proceed? deleting instance dbca_progress : 1% dbca_progress : 2% dbca_progress : 6% dbca_progress : 13% dbca_progress : 20% dbca_progress : 26% dbca_progress : 33% dbca_progress : 40% dbca_progress : 46% dbca_progress : 53% dbca_progress : 60% dbca_progress : 66% completing instance management. dbca_progress : 100%

2.4 停被删除节点监听

-- 使用grid用户在任一节点操作 [grid@host210 ~]$ srvctl config listener -a name: listener network: 1, owner: grid home:/u01/app/11.2.0/grid on node(s) host210,host211,host212 end points: tcp:1521 [grid@host210 ~]$ srvctl disable listener -l listener -n host212 [grid@host210 ~]$ srvctl stop listener -l listener -n host212 -- 查看集群状态,可看到被删除实例host212监听已停 [grid@host210 ~]$ crs_stat -t name type target state host ------------------------------------------------------------ ora.crs.dg ora....up.type online online host210 ora.data.dg ora....up.type online online host210 ora....er.lsnr ora....er.type online online host210 ora....n1.lsnr ora....er.type online online host210 ora.asm ora.asm.type online online host210 ora.cvu ora.cvu.type online online host210 ora.gsd ora.gsd.type offline offline ora....sm1.asm application online online host210 ora....10.lsnr application online online host210 ora....210.gsd application offline offline ora....210.ons application online online host210 ora....210.vip ora....t1.type online online host210 ora....sm2.asm application online online host211 ora....11.lsnr application online online host211 ora....211.gsd application offline offline ora....211.ons application online online host211 ora....211.vip ora....t1.type online online host211 ora....sm3.asm application online online host212 ora....12.lsnr application offline offline ora....212.gsd application offline offline ora....212.ons application online online host212 ora....212.vip ora....t1.type online online host212 ora....network ora....rk.type online online host210 ora.oc4j ora.oc4j.type online online host210 ora.ons ora.ons.type online online host210 ora.oradb.db ora....se.type online online host210 ora.scan1.vip ora....ip.type online online host210

2.5 第一次更新集群列表信息

-- 在被删除的host212节点使用oracle用户更新集群列表信息 [root@host212 ~]# su - oracle [oracle@host212 ~]$ $oracle_home/oui/bin/runinstaller -updatenodelist oracle_home=$oracle_home "cluster_nodes={host212}" -local starting oracle universal installer... checking swap space: must be greater than 500 mb. actual 65535 mb passed the inventory pointer is located at /etc/orainst.loc the inventory is located at /u01/app/orainventory 'updatenodelist' was successful.

2.6 删除数据库oracle软件

注意:在被删除节点执行。

-- 在被删除的host212节点使用oracle用户执行 [oracle@host212 ~]$ $oracle_home/deinstall/deinstall -local -- 上述命令执行结果如下 checking for required files and bootstrapping ... please wait ... location of logs /u01/app/orainventory/logs/ ############ oracle deinstall & deconfig tool start ############ ######################### check operation start ######################### ## [start] install check configuration ## checking for existence of the oracle home location /u01/app/oracle/product/11.2.0/db_1 oracle home type selected for deinstall is: oracle real application cluster database oracle base selected for deinstall is: /u01/app/oracle checking for existence of central inventory location /u01/app/orainventory checking for existence of the oracle grid infrastructure home /u01/app/11.2.0/grid the following nodes are part of this cluster: host212 checking for sufficient temp space availability on node(s) : 'host212' ## [end] install check configuration ## network configuration check config start network de-configuration trace file location: /u01/app/orainventory/logs/netdc_check2023-09-21_11-26-01-am.log network configuration check config end database check configuration start database de-configuration trace file location: /u01/app/orainventory/logs/databasedc_check2023-09-21_11-26-03-am.log database check configuration end enterprise manager configuration assistant start emca de-configuration trace file location: /u01/app/orainventory/logs/emcadc_check2023-09-21_11-26-05-am.log enterprise manager configuration assistant end oracle configuration manager check start ocm check log file location : /u01/app/orainventory/logs//ocm_check8572.log oracle configuration manager check end ######################### check operation end ######################### ####################### check operation summary ####################### oracle grid infrastructure home is: /u01/app/11.2.0/grid the cluster node(s) on which the oracle home deinstallation will be performed are:host212 since -local option has been specified, the oracle home will be deinstalled only on the local node, 'host212', and the global configuration will be removed. oracle home selected for deinstall is: /u01/app/oracle/product/11.2.0/db_1 inventory location where the oracle home registered is: /u01/app/orainventory the option -local will not modify any database configuration for this oracle home. no enterprise manager configuration to be updated for any database(s) no enterprise manager asm targets to update no enterprise manager listener targets to migrate checking the config status for ccr oracle home exists with ccr directory, but ccr is not configured ccr check is finished do you want to continue (y - yes, n - no)? [n]: y ---输入 y a log of this session will be written to: '/u01/app/orainventory/logs/deinstall_deconfig2023-09-21_11-26-00-am.out' any error messages from this session will be written to: '/u01/app/orainventory/logs/deinstall_deconfig2023-09-21_11-26-00-am.err' ######################## clean operation start ######################## enterprise manager configuration assistant start emca de-configuration trace file location: /u01/app/orainventory/logs/emcadc_clean2023-09-21_11-26-05-am.log updating enterprise manager asm targets (if any) updating enterprise manager listener targets (if any) enterprise manager configuration assistant end database de-configuration trace file location: /u01/app/orainventory/logs/databasedc_clean2023-09-21_11-26-23-am.log network configuration clean config start network de-configuration trace file location: /u01/app/orainventory/logs/netdc_clean2023-09-21_11-26-23-am.log de-configuring local net service names configuration file... local net service names configuration file de-configured successfully. de-configuring backup files... backup files de-configured successfully. the network configuration has been cleaned up successfully. network configuration clean config end oracle configuration manager clean start ocm clean log file location : /u01/app/orainventory/logs//ocm_clean8572.log oracle configuration manager clean end setting the force flag to false setting the force flag to cleanup the oracle base oracle universal installer clean start detach oracle home '/u01/app/oracle/product/11.2.0/db_1' from the central inventory on the local node : done delete directory '/u01/app/oracle/product/11.2.0/db_1' on the local node : done failed to delete the directory '/u01/app/oracle'. the directory is in use. delete directory '/u01/app/oracle' on the local node : failed <<<< oracle universal installer cleanup completed with errors. oracle universal installer clean end ## [start] oracle install clean ## clean install operation removing temporary directory '/tmp/deinstall2023-09-21_11-25-55am' on node 'host212' ## [end] oracle install clean ## ######################### clean operation end ######################### ####################### clean operation summary ####################### cleaning the config for ccr as ccr is not configured, so skipping the cleaning of ccr configuration ccr clean is finished successfully detached oracle home '/u01/app/oracle/product/11.2.0/db_1' from the central inventory on the local node. successfully deleted directory '/u01/app/oracle/product/11.2.0/db_1' on the local node. failed to delete directory '/u01/app/oracle' on the local node. oracle universal installer cleanup completed with errors. oracle deinstall tool successfully cleaned up temporary directories. ####################################################################### ############# oracle deinstall & deconfig tool end #############

2.7 停止节点nodeapps

在任一非被删除节点执行。

[root@host210 ~]# su - grid -- 查看集群状态 [grid@host210 ~]$ crs_stat -t name type target state host ------------------------------------------------------------ ora.crs.dg ora....up.type online online host210 ora.data.dg ora....up.type online online host210 ora....er.lsnr ora....er.type online online host210 ora....n1.lsnr ora....er.type online online host210 ora.asm ora.asm.type online online host210 ora.cvu ora.cvu.type online online host210 ora.gsd ora.gsd.type offline offline ora....sm1.asm application online online host210 ora....10.lsnr application online online host210 ora....210.gsd application offline offline ora....210.ons application online online host210 ora....210.vip ora....t1.type online online host210 ora....sm2.asm application online online host211 ora....11.lsnr application online online host211 ora....211.gsd application offline offline ora....211.ons application online online host211 ora....211.vip ora....t1.type online online host211 ora....sm3.asm application online online host212 ora....12.lsnr application offline offline ora....212.gsd application offline offline ora....212.ons application online online host212 ora....212.vip ora....t1.type online online host212 ora....network ora....rk.type online online host210 ora.oc4j ora.oc4j.type online online host210 ora.ons ora.ons.type online online host210 ora.oradb.db ora....se.type online online host210 ora.scan1.vip ora....ip.type online online host210 [grid@host210 ~]$ exit logout -- 使用oracle用户执行 [root@host210 ~]# su - oracle [oracle@host210 ~]$ srvctl stop nodeapps -n host212 [oracle@host210 ~]$ exit logout -- 再次查看集群状态 [root@host210 ~]# su - grid [grid@host210 ~]$ crs_stat -t name type target state host ------------------------------------------------------------ ora.crs.dg ora....up.type online online host210 ora.data.dg ora....up.type online online host210 ora....er.lsnr ora....er.type online online host210 ora....n1.lsnr ora....er.type online online host210 ora.asm ora.asm.type online online host210 ora.cvu ora.cvu.type online online host210 ora.gsd ora.gsd.type offline offline ora....sm1.asm application online online host210 ora....10.lsnr application online online host210 ora....210.gsd application offline offline ora....210.ons application online online host210 ora....210.vip ora....t1.type online online host210 ora....sm2.asm application online online host211 ora....11.lsnr application online online host211 ora....211.gsd application offline offline ora....211.ons application online online host211 ora....211.vip ora....t1.type online online host211 ora....sm3.asm application online online host212 ora....12.lsnr application offline offline ora....212.gsd application offline offline ora....212.ons application offline offline ora....212.vip ora....t1.type offline offline ora....network ora....rk.type online online host210 ora.oc4j ora.oc4j.type online online host210 ora.ons ora.ons.type online online host210 ora.oradb.db ora....se.type online online host210 ora.scan1.vip ora....ip.type online online host210 ## 如上可以看到被删除节点在集群状态都已显示为offline

2.8 第二次更新集群列表信息

在任一未被删除节点使用oracle用户更新集群列表信息。

[root@host210 ~]# su - oracle [oracle@host210 ~]$ $oracle_home/oui/bin/runinstaller -updatenodelist oracle_home=$oracle_home "cluster_nodes={host210,host211}" starting oracle universal installer... checking swap space: must be greater than 500 mb. actual 65535 mb passed the inventory pointer is located at /etc/orainst.loc the inventory is located at /u01/app/orainventory 'updatenodelist' was successful.

2.9 去除被删节点集群软件

注意:使用root用户在被删除节点执行。

[root@host212 ~]# /u01/app/11.2.0/grid/crs/install/rootcrs.pl -deconfig -force -- 上述命令执行结果如下 using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params network exists: 1/192.168.17.0/255.255.255.0/em1, type static vip exists: /host210-vip/192.168.17.103/192.168.17.0/255.255.255.0/em1, hosting node host210 vip exists: /host211-vip/192.168.17.104/192.168.17.0/255.255.255.0/em1, hosting node host211 vip exists: /host212-vip/192.168.17.105/192.168.17.0/255.255.255.0/em1, hosting node host212 gsd exists ons exists: local port 6100, remote port 6200, em port 2016 prko-2426 : ons is already stopped on node(s): host212 prko-2425 : vip is already stopped on node(s): host212 prko-2440 : network resource is already stopped. crs-2791: starting shutdown of oracle high availability services-managed resources on 'host212' crs-2673: attempting to stop 'ora.crsd' on 'host212' crs-2790: starting shutdown of cluster ready services-managed resources on 'host212' crs-2673: attempting to stop 'ora.crs.dg' on 'host212' crs-2673: attempting to stop 'ora.data.dg' on 'host212' crs-2677: stop of 'ora.data.dg' on 'host212' succeeded crs-2677: stop of 'ora.crs.dg' on 'host212' succeeded crs-2673: attempting to stop 'ora.asm' on 'host212' crs-2677: stop of 'ora.asm' on 'host212' succeeded crs-2792: shutdown of cluster ready services-managed resources on 'host212' has completed crs-2677: stop of 'ora.crsd' on 'host212' succeeded crs-2673: attempting to stop 'ora.crf' on 'host212' crs-2673: attempting to stop 'ora.ctssd' on 'host212' crs-2673: attempting to stop 'ora.evmd' on 'host212' crs-2673: attempting to stop 'ora.asm' on 'host212' crs-2673: attempting to stop 'ora.mdnsd' on 'host212' crs-2677: stop of 'ora.crf' on 'host212' succeeded crs-2677: stop of 'ora.mdnsd' on 'host212' succeeded crs-2677: stop of 'ora.evmd' on 'host212' succeeded crs-2677: stop of 'ora.asm' on 'host212' succeeded crs-2673: attempting to stop 'ora.cluster_interconnect.haip' on 'host212' crs-2677: stop of 'ora.ctssd' on 'host212' succeeded crs-2677: stop of 'ora.cluster_interconnect.haip' on 'host212' succeeded crs-2673: attempting to stop 'ora.cssd' on 'host212' crs-2677: stop of 'ora.cssd' on 'host212' succeeded crs-2673: attempting to stop 'ora.gipcd' on 'host212' crs-2677: stop of 'ora.gipcd' on 'host212' succeeded crs-2673: attempting to stop 'ora.gpnpd' on 'host212' crs-2677: stop of 'ora.gpnpd' on 'host212' succeeded crs-2793: shutdown of oracle high availability services-managed resources on 'host212' has completed crs-4133: oracle high availability services has been stopped. removing trace file analyzer successfully deconfigured oracle clusterware stack on this node

2.10 去除被删除节点

在任一非被删除节点使用root用户操作。

[root@host210 ~]# su - grid [grid@host210 ~]$ olsnodes -t -s host210 active unpinned host211 active unpinned host212 inactive unpinned [grid@host210 ~]$ exit logout [root@host210 ~]# /u01/app/11.2.0/grid/bin/crsctl delete node -n host212 crs-4661: node host212 successfully deleted. [root@host210 ~]# su - grid [grid@host210 ~]$ olsnodes -t -s host210 active unpinned host211 active unpinned

2.11 第三次更新集群列表信息

在被删除节点更新列表信息。

-- 使用 grid用户在被删除节点操作

[root@host212 ~]# su - grid

[grid@host212 ~]$ $oracle_home/oui/bin/runinstaller -updatenodelist oracle_home=$oracle_home "cluster_nodes={host212}" crs=true -local

starting oracle universal installer...

checking swap space: must be greater than 500 mb. actual 65535 mb passed

the inventory pointer is located at /etc/orainst.loc

the inventory is located at /u01/app/orainventory

'updatenodelist' was successful.

2.12 删除数据库grid软件

注意:在被删除节点操作执行。

-- 使用grid用户操作 [root@host212 ~]# su - grid [grid@host212 ~]$ $oracle_home/deinstall/deinstall -local -- 上述操作执行结果如下所示 checking for required files and bootstrapping ... please wait ... location of logs /tmp/deinstall2023-09-21_11-46-20am/logs/ ############ oracle deinstall & deconfig tool start ############ ######################### check operation start ######################### ## [start] install check configuration ## checking for existence of the oracle home location /u01/app/11.2.0/grid oracle home type selected for deinstall is: oracle grid infrastructure for a cluster oracle base selected for deinstall is: /u01/app/grid checking for existence of central inventory location /u01/app/orainventory checking for existence of the oracle grid infrastructure home the following nodes are part of this cluster: host212 checking for sufficient temp space availability on node(s) : 'host212' ## [end] install check configuration ## traces log file: /tmp/deinstall2023-09-21_11-46-20am/logs//crsdc.log enter an address or the name of the virtual ip used on node "host212"[host212-vip] > --- 敲回车 the following information can be collected by running "/sbin/ifconfig -a" on node "host212" enter the ip netmask of virtual ip "192.168.17.105" on node "host212"[255.255.255.0] > --- 敲回车 enter the network interface name on which the virtual ip address "192.168.17.105" is active > --- 敲回车 enter an address or the name of the virtual ip[] > --- 敲回车 network configuration check config start network de-configuration trace file location: /tmp/deinstall2023-09-21_11-46-20am/logs/netdc_check2023-09-21_11-46-48-am.log specify all rac listeners (do not include scan listener) that are to be de-configured [listener]: network configuration check config end asm check configuration start asm de-configuration trace file location: /tmp/deinstall2023-09-21_11-46-20am/logs/asmcadc_check2023-09-21_11-46-52-am.log ######################### check operation end ######################### ####################### check operation summary ####################### oracle grid infrastructure home is: the cluster node(s) on which the oracle home deinstallation will be performed are:host212 since -local option has been specified, the oracle home will be deinstalled only on the local node, 'host212', and the global configuration will be removed. oracle home selected for deinstall is: /u01/app/11.2.0/grid inventory location where the oracle home registered is: /u01/app/orainventory following rac listener(s) will be de-configured: listener option -local will not modify any asm configuration. do you want to continue (y - yes, n - no)? [n]: y --- 输入 y,敲回车 a log of this session will be written to: '/tmp/deinstall2023-09-21_11-46-20am/logs/deinstall_deconfig2023-09-21_11-46-24-am.out' any error messages from this session will be written to: '/tmp/deinstall2023-09-21_11-46-20am/logs/deinstall_deconfig2023-09-21_11-46-24-am.err' ######################## clean operation start ######################## asm de-configuration trace file location: /tmp/deinstall2023-09-21_11-46-20am/logs/asmcadc_clean2023-09-21_11-46-58-am.log asm clean configuration end network configuration clean config start network de-configuration trace file location: /tmp/deinstall2023-09-21_11-46-20am/logs/netdc_clean2023-09-21_11-46-58-am.log de-configuring rac listener(s): listener de-configuring listener: listener stopping listener on node "host212": listener warning: failed to stop listener. listener may not be running. listener de-configured successfully. de-configuring naming methods configuration file... naming methods configuration file de-configured successfully. de-configuring backup files... backup files de-configured successfully. the network configuration has been cleaned up successfully. network configuration clean config end ----------------------------------------> the deconfig command below can be executed in parallel on all the remote nodes. execute the command on the local node after the execution completes on all the remote nodes. run the following command as the root user or the administrator on node "host212". /tmp/deinstall2023-09-21_11-46-20am/perl/bin/perl -i/tmp/deinstall2023-09-21_11-46-20am/perl/lib -i/tmp/deinstall2023-09-21_11-46-20am/crs/install /tmp/deinstall2023-09-21_11-46-20am/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2023-09-21_11-46-20am/response/deinstall_ora11g_gridinfrahome1.rsp" press enter after you finish running the above commands ================================================================ -- 使用root新开一个被删除节点的会话窗口,执行上述命令,如下所示 [root@host212 ~]# /tmp/deinstall2023-09-21_11-46-20am/perl/bin/perl -i/tmp/deinstall2023-09-21_11-46-20am/perl/lib -i/tmp/deinstall2023-09-21_11-46-20am/crs/install /tmp/deinstall2023-09-21_11-46-20am/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2023-09-21_11-46-20am/response/deinstall_ora11g_gridinfrahome1.rsp" -- 上述命令执行输出结果如下 using configuration parameter file: /tmp/deinstall2023-09-21_11-46-20am/response/deinstall_ora11g_gridinfrahome1.rsp ****unable to retrieve oracle clusterware home. start oracle clusterware stack and try again. crs-4047: no oracle clusterware components configured. crs-4000: command stop failed, or completed with errors. ################################################################ # you must kill processes or reboot the system to properly # # cleanup the processes started by oracle clusterware # ################################################################ either /etc/oracle/olr.loc does not exist or is not readable make sure the file exists and it has read and execute access either /etc/oracle/olr.loc does not exist or is not readable make sure the file exists and it has read and execute access failure in execution (rc=-1, 256, no such file or directory) for command /etc/init.d/ohasd deinstall error: package cvuqdisk is not installed successfully deconfigured oracle clusterware stack on this node -- 待以上执行完毕,重新返回前会话 ================================================================ <---------------------------------------- --- 敲回车 remove the directory: /tmp/deinstall2023-09-21_11-46-20am on node: setting the force flag to false setting the force flag to cleanup the oracle base oracle universal installer clean start detach oracle home '/u01/app/11.2.0/grid' from the central inventory on the local node : done delete directory '/u01/app/11.2.0/grid' on the local node : done delete directory '/u01/app/orainventory' on the local node : done delete directory '/u01/app/grid' on the local node : done oracle universal installer cleanup was successful. oracle universal installer clean end ## [start] oracle install clean ## clean install operation removing temporary directory '/tmp/deinstall2023-09-21_11-46-20am' on node 'host212' ## [end] oracle install clean ## ######################### clean operation end ######################### ####################### clean operation summary ####################### following rac listener(s) were de-configured successfully: listener oracle clusterware is stopped and successfully de-configured on node "host212" oracle clusterware is stopped and de-configured successfully. successfully detached oracle home '/u01/app/11.2.0/grid' from the central inventory on the local node. successfully deleted directory '/u01/app/11.2.0/grid' on the local node. successfully deleted directory '/u01/app/orainventory' on the local node. successfully deleted directory '/u01/app/grid' on the local node. oracle universal installer cleanup was successful. run 'rm -rf /etc/orainst.loc' as root on node(s) 'host212' at the end of the session. run 'rm -rf /opt/orclfmap' as root on node(s) 'host212' at the end of the session. run 'rm -rf /etc/oratab' as root on node(s) 'host212' at the end of the session. oracle deinstall tool successfully cleaned up temporary directories. ####################################################################### ############# oracle deinstall & deconfig tool end #############

2.13 第四次更新集群列表信息

在任一非删除节点操作执行。

-- 使用grid用户执行 [root@host210 ~]# su - grid [grid@host210 ~]$ $oracle_home/oui/bin/runinstaller -updatenodelist oracle_home=$oracle_home "cluster_nodes={host210,host211}" crs=true starting oracle universal installer... checking swap space: must be greater than 500 mb. actual 65535 mb passed the inventory pointer is located at /etc/orainst.loc the inventory is located at /u01/app/orainventory 'updatenodelist' was successful.

2.15 清除被删节点信息

若要清空被删除节点上的数据库目录及文件信息,可在被删除节点使用root用户执行。

[root@host212 ~]# rm -rf /tmp/* [root@host212 ~]# rm -rf /tmp/.* [root@host212 ~]# rm -f /usr/local/bin/dbhome [root@host212 ~]# rm -f /usr/local/bin/oraenv [root@host212 ~]# rm -f /usr/local/bin/coraenv [root@host212 ~]# rm -rf /opt/orclfmap #删除数据库软件安装目录 [root@host212 ~]# rm -rf /u01/app/*

在任一非被删除节点执行。

[root@host210 ~]# su - grid [grid@host210 ~]$ cluvfy stage -post nodedel -n host212 performing post-checks for node removal checking crs integrity... clusterware version consistency passed crs integrity check passed node removal check passed post-check for node removal was successful. [grid@host210 ~]$ crs_stat -t name type target state host ------------------------------------------------------------ ora.crs.dg ora....up.type online online host210 ora.data.dg ora....up.type online online host210 ora....er.lsnr ora....er.type online online host210 ora....n1.lsnr ora....er.type online online host210 ora.asm ora.asm.type online online host210 ora.cvu ora.cvu.type online online host210 ora.gsd ora.gsd.type offline offline ora....sm1.asm application online online host210 ora....10.lsnr application online online host210 ora....210.gsd application offline offline ora....210.ons application online online host210 ora....210.vip ora....t1.type online online host210 ora....sm2.asm application online online host211 ora....11.lsnr application online online host211 ora....211.gsd application offline offline ora....211.ons application online online host211 ora....211.vip ora....t1.type online online host211 ora....network ora....rk.type online online host210 ora.oc4j ora.oc4j.type online online host210 ora.ons ora.ons.type online online host210 ora.oradb.db ora....se.type online online host210 ora.scan1.vip ora....ip.type online online host210 [root@host210 ~]# su - oracle [oracle@host210 ~]$ sqlplus / as sysdba sql*plus: release 11.2.0.4.0 production on thu sep 21 11:56:39 2023 米乐app官网下载 copyright (c) 1982, 2013, oracle. all rights reserved. connected to: oracle database 11g enterprise edition release 11.2.0.4.0 - 64bit production with the partitioning, real application clusters, automatic storage management, olap, data mining and real application testing options sql> set long 99999 head off pages 0 lines 1000 sql> select inst_id,instance_number,instance_name,host_name,status,thread# from gv$instance; 1 1 oradb1 host210 open 1 2 2 oradb2 host211 open 2 sql> select inst_id,thread#,status,groups,instance from gv$thread; 2 1 open 2 oradb1 2 2 open 2 oradb2 1 1 open 2 oradb1 1 2 open 2 oradb2 ## 可以看到集群中已不含有被删除节点信息。

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【米乐app官网下载的版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。